Lloyd-Lipow: Difference between revisions

| (32 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

{{template:RGA BOOK|5|Lloyd-Lipow}} | {{template:RGA BOOK|3.5|Lloyd-Lipow}} | ||

Lloyd and Lipow (1962) considered a situation in which a test program is conducted in | Lloyd and Lipow (1962) considered a situation in which a test program is conducted in <math>N\,\!</math> stages. Each stage consists of a certain number of trials of an item undergoing testing, and the data set is recorded as successes or failures. All tests in a given stage of testing involve similar items. The results of each stage of testing are used to improve the item for further testing in the next stage. For the <math>{{k}^{th}}\,\!</math> group of data, taken in chronological order, there are <math>{{n}_{k}}\,\!</math> tests with <math>{{S}_{k}}\,\!</math> observed successes. The reliability growth function is then given in Lloyd and Lipow [[RGA_References|[6]]]: | ||

:<math>{{R}_{k}}={{R}_{\infty }}-\frac{\alpha }{k}\,\!</math> | |||

where: | where: | ||

*<math>R_k =\,\!</math> the actual reliability during the <math>k^{th}\,\!</math> stage of testing | |||

*<math>R_{\infty} =\,\!</math> the ultimate reliability attained if <math>k\to{\infty}\,\!</math> | |||

*<math>\alpha>0 =\,\!</math> modifies the rate of growth | |||

Note that essentially, <math>{{R}_{k}}=\tfrac{{{S}_{k}}}{{{n}_{k}}}\,\!</math>. If the data set consists of reliability data, then <math>{{S}_{k}}\,\!</math> is assumed to be the observed reliability given and <math>{{n}_{k}}\,\!</math> is considered 1. | |||

Note that essentially, | |||

==Parameter Estimation== | ==Parameter Estimation== | ||

When analyzing reliability data in the RGA software, you have the option to enter the reliability values in percent or in decimal format. However, <math>{{\hat{R}}_{\infty }}\,\!</math> will always be returned in decimal format and not in percent. The estimated parameters in the RGA software are unitless. | |||

===Maximum Likelihood Estimators===<!-- THIS SECTION HEADER IS LINKED FROM ANOTHER SECTION IN THIS PAGE. IF YOU RENAME THE SECTION, YOU MUST UPDATE THE LINK(S). --> | |||

For the <math>{{k}^{th}}\,\!</math> stage: | |||

===Maximum Likelihood Estimators=== | |||

For the | |||

:<math>{{L}_{k}}=const.\text{ }R_{k}^{{{S}_{k}}}{{(1-{{R}_{k}})}^{{{n}_{k}}-{{S}_{k}}}}\,\!</math> | |||

And assuming that the results are independent between stages: | And assuming that the results are independent between stages: | ||

:<math>L=\underset{k=1}{\overset{N}{\mathop \prod }}\,R_{k}^{{{S}_{k}}}{{(1-{{R}_{k}})}^{{{n}_{k}}-{{S}_{k}}}}\,\!</math> | |||

Then taking the natural log gives: | Then taking the natural log gives: | ||

::<math>\Lambda =\underset{k=1}{\overset{N}{\mathop \sum }}\,{{S}_{k}}\ln \left( {{R}_{\infty }}-\frac{\alpha }{k} \right)+\underset{k=1}{\overset{N}{\mathop \sum }}\,({{n}_{k}}-{{S}_{k}})\ln \left( 1-{{R}_{\infty }}+\frac{\alpha }{k} \right)</math> | ::<math>\Lambda =\underset{k=1}{\overset{N}{\mathop \sum }}\,{{S}_{k}}\ln \left( {{R}_{\infty }}-\frac{\alpha }{k} \right)+\underset{k=1}{\overset{N}{\mathop \sum }}\,({{n}_{k}}-{{S}_{k}})\ln \left( 1-{{R}_{\infty }}+\frac{\alpha }{k} \right)\,\!</math> | ||

Differentiating with respect to | Differentiating with respect to <math>{{R}_{\infty }}\,\!</math> and <math>\alpha ,\,\!</math> yields: | ||

:<math>\frac{\partial \Lambda }{\partial {{R}_{\infty }}}=\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{{{S}_{k}}}{{{R}_{\infty }}-\tfrac{\alpha }{k}}-\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{{{n}_{k}}-{{S}_{k}}}{1-{{R}_{\infty }}+\tfrac{\alpha }{k}}\,\!</math> | |||

:<math>\frac{\partial \Lambda }{\partial \alpha }=-\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{\tfrac{{{S}_{k}}}{k}}{{{R}_{\infty }}-\tfrac{\alpha }{k}}+\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{\tfrac{{{n}_{k}}-{{S}_{k}}}{k}}{1-{{R}_{\infty }}+\tfrac{\alpha }{k}}\,\!</math> | |||

: | Rearranging the equations and setting them equal to zero gives: | ||

:<math>\frac{\partial \Lambda }{\partial {{R}_{\infty }}}=\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{\tfrac{{{S}_{k}}}{{{n}_{k}}}-\left( {{R}_{\infty }}-\tfrac{\alpha }{k} \right)}{\tfrac{1}{{{n}_{k}}}\left( {{R}_{\infty }}-\tfrac{\alpha }{k} \right)\left( 1-{{R}_{\infty }}+\tfrac{\alpha }{k} \right)}=0\,\!</math> | |||

:<math>\frac{\partial \Lambda }{\partial \alpha }=-\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{\tfrac{1}{k}\tfrac{{{S}_{k}}}{{{n}_{k}}}-\left( {{R}_{\infty }}-\tfrac{\alpha }{k} \right)\tfrac{1}{k}}{\tfrac{1}{{{n}_{k}}}\left( {{R}_{\infty }}-\tfrac{\alpha }{k} \right)\left( 1-{{R}_{\infty }}+\tfrac{\alpha }{k} \right)}=0\,\!</math> | |||

The resulting equations can be solved simultaneously for <math>\widehat{\alpha }\,\!</math> and <math>{{\hat{R}}_{\infty }}\,\!</math>. It should be noted that a closed form solution does not exist for either of the parameters; thus, they must be estimated numerically. | |||

===Least Squares Estimators=== | ===Least Squares Estimators=== | ||

To obtain least squares estimators for <math>{{R}_{\infty }}\,\!</math> and <math>\alpha \,\!</math>, the sum of squares, <math>Q\,\!</math>, of the deviations of the observed success-ratio, <math>{{S}_{k}}/{{n}_{k}}\,\!</math>, is minimized from its expected value, <math>{{R}_{\infty }}-\tfrac{\alpha }{k}\,\!</math>, with respect to the parameters <math>{{R}_{\infty }}\,\!</math> and <math>\alpha .\,\!</math> Therefore, <math>Q\,\!</math> is expressed as: | |||

:<math>Q=\underset{k=1}{\overset{N}{\mathop \sum }}\,{{\left( \frac{{{S}_{k}}}{{{n}_{k}}}-{{R}_{\infty }}+\frac{\alpha }{k} \right)}^{2}}\,\!</math> | |||

Taking the derivatives with respect to <math>{{R}_{\infty }}\,\!</math> and <math>\alpha \,\!</math> and setting equal to zero yields: | |||

:<math>\begin{align} | |||

\frac{\partial Q}{\partial {{R}_{\infty }}} = -2\underset{k=1}{\overset{N}{\mathop \sum }}\,\left( \frac{{{S}_{k}}}{{{n}_{k}}}-{{R}_{\infty }}+\frac{\alpha }{k} \right)=0 \\ | |||

\frac{\partial Q}{\partial \alpha } = 2\underset{k=1}{\overset{N}{\mathop \sum }}\,\left( \frac{{{S}_{k}}}{{{n}_{k}}}-{{R}_{\infty }}+\frac{\alpha }{k} \right)\frac{1}{k}=0 | |||

\end{align}\,\!</math> | |||

Solving the equations simultaneously, the least squares estimates of <math>{{R}_{\infty }}\,\!</math> and <math>\alpha \,\!</math> are: | |||

:<math>{{\hat{R}}_{\infty }}=\frac{\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{{{k}^{2}}}\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{{{S}_{k}}}{{{n}_{k}}}-\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k}\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{{{S}_{k}}}{k{{n}_{k}}}}{N\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{{{k}^{2}}}-{{\left( \underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k} \right)}^{2}}}\,\!</math> | |||

or: | |||

:<math>\text{ }{{\hat{R}}_{\infty }}=\frac{\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{{{k}^{2}}}\underset{k=1}{\overset{N}{\mathop{\sum }}}\,{{R}_{k}}-\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k}\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{{{R}_{k}}}{k}}{N\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{{{k}^{2}}}-{{\left( \underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k} \right)}^{2}}}\,\!</math> | |||

and: | |||

:<math>\hat{\alpha }=\frac{\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k}\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{{{S}_{k}}}{{{n}_{k}}}-N\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{{{S}_{k}}}{k{{n}_{k}}}}{N\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{{{k}^{2}}}-{{\left( \underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k} \right)}^{2}}}\,\!</math> | |||

or: | |||

:<math>\hat{\alpha }=\frac{\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k}\underset{k=1}{\overset{N}{\mathop{\sum }}}\,{{R}_{k}}-N\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{{{R}_{k}}}{k}}{N\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{{{k}^{2}}}-{{\left( \underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k} \right)}^{2}}}\,\!</math> | |||

====Example - Least Squares====<!-- THIS SECTION HEADER IS LINKED FROM ANOTHER SECTION IN THIS PAGE. IF YOU RENAME THE SECTION, YOU MUST UPDATE THE LINK(S). --> | |||

{{:Lloyd-Lipow_Least_Squares_Example}} | |||

! | |||

==Confidence Bounds== | ==Confidence Bounds== | ||

This section presents the methods used in the RGA software to estimate the confidence bounds under the Lloyd-Lipow model. One of the properties of maximum likelihood estimators is that they are asymptotically normal. This indicates that they are normally distributed for large samples [[RGA_References|[6]], [[RGA_References|7]]]. Additionally, since the parameter <math>\alpha \,\!</math> must be positive, <math>\ln \alpha \,\!</math> is treated as being normally distributed as well. The parameter <math>{{R}_{\infty }}\,\!</math> represents the ultimate reliability that would be attained if <math>k\to \infty \,\!</math>. <math>{{R}_{k}}\,\!</math> is the actual reliability during the <math>{{k}^{th}}\,\!</math> stage of testing. Therefore, <math>{{R}_{\infty }}\,\!</math> and <math>{{R}_{k}}\,\!</math> will be between 0 and 1. Consequently, the endpoints of the confidence intervals of the parameters <math>{{R}_{\infty }}\,\!</math> and <math>{{R}_{k}}\,\!</math> also will be between 0 and 1. To obtain the confidence interval, it is common practice to use the logit transformation. | |||

The confidence bounds on the parameters | The confidence bounds on the parameters <math>\alpha \,\!</math> and <math>{{R}_{\infty }}\,\!</math> are given by: | ||

:<math>C{{B}_{\alpha }}=\hat{\alpha }{{e}^{\pm {{z}_{\alpha /2}}\sqrt{Var(\hat{\alpha })}/\hat{\alpha }}}\,\!</math> | |||

:<math>C{{B}_{{{R}_{\infty }}}}=\frac{{{{\hat{R}}}_{\infty }}}{{{{\hat{R}}}_{\infty }}+(1-{{{\hat{R}}}_{\infty }}){{e}^{\pm {{z}_{\alpha /2}}\sqrt{Var({{{\hat{R}}}_{\infty }})}/\left[ {{{\hat{R}}}_{\infty }}(1-{{{\hat{R}}}_{\infty }}) \right]}}}\,\!</math> | |||

where | where <math>{{z}_{\alpha /2}}\,\!</math> represents the percentage points of the <math>N(0,1)\,\!</math> distribution such that <math>P\{z\ge {{z}_{\alpha /2}}\}=\alpha /2\,\!</math>. | ||

The confidence bounds on reliability are given by: | The confidence bounds on reliability are given by: | ||

:<math>CB=\frac{{{{\hat{R}}}_{k}}}{{{{\hat{R}}}_{k}}+(1-{{{\hat{R}}}_{k}}){{e}^{\pm {{z}_{\alpha /2}}\sqrt{Var({{{\hat{R}}}_{k}})}/\left[ {{{\hat{R}}}_{k}}(1-{{{\hat{R}}}_{k}}) \right]}}}\,\!</math> | |||

where: | |||

:<math>Var({{\widehat{R}}_{k}})=Var({{\widehat{R}}_{\infty }})+\frac{1}{{{k}^{2}}}\cdot Var(\widehat{\alpha })-\frac{2}{k}\cdot Cov({{\widehat{R}}_{\infty }},\widehat{\alpha })\,\!</math> | |||

All the variances can be calculated using the Fisher Matrix: | All the variances can be calculated using the Fisher Matrix: | ||

:<math> | |||

\begin{bmatrix} | |||

-\tfrac{{{\partial }^{2}}\Lambda }{\partial R_{\infty }^{2}} & -\tfrac{{{\partial }^{2}}\Lambda }{\partial \alpha \partial {{R}_{\infty }}} \\ | -\tfrac{{{\partial }^{2}}\Lambda }{\partial R_{\infty }^{2}} & -\tfrac{{{\partial }^{2}}\Lambda }{\partial \alpha \partial {{R}_{\infty }}} \\ | ||

-\tfrac{{{\partial }^{2}}\Lambda }{\partial \alpha \partial {{R}_{\infty }}} & -\tfrac{{{\partial }^{2}}\Lambda }{\partial {{\alpha }^{2}}} \\ | -\tfrac{{{\partial }^{2}}\Lambda }{\partial \alpha \partial {{R}_{\infty }}} & -\tfrac{{{\partial }^{2}}\Lambda }{\partial {{\alpha }^{2}}} \\ | ||

\end{ | \end{bmatrix}^{-1}= \begin{bmatrix} | ||

Var({{\widehat{R}}_{\infty }}) & Cov({{\widehat{R}}_{\infty }},\widehat{\alpha }) \\ | Var({{\widehat{R}}_{\infty }}) & Cov({{\widehat{R}}_{\infty }},\widehat{\alpha }) \\ | ||

Cov({{\widehat{R}}_{\infty }},\widehat{\alpha }) & Var(\widehat{\alpha }) \\ | Cov({{\widehat{R}}_{\infty }},\widehat{\alpha }) & Var(\widehat{\alpha }) \\ | ||

\end{ | \end{bmatrix}\,\!</math> | ||

From | From the [[Lloyd-Lipow#Maximum_Likelihood_Estimators|ML estimators of the Lloyd-Lipow model]], taking the second partial derivatives yields: | ||

:<math>\frac{{{\partial }^{2}}\Lambda }{\partial R_{\infty }^{2}}=-\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{{{S}_{k}}}{{{\left( {{R}_{\infty }}-\tfrac{\alpha }{k} \right)}^{2}}}-\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{{{n}_{k}}-{{S}_{k}}}{{{\left( 1-{{R}_{\infty }}+\tfrac{\alpha }{k} \right)}^{2}}}\,\!</math> | |||

:<math>\frac{{{\partial }^{2}}\Lambda }{\partial {{\alpha }^{2}}}=-\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{\tfrac{{{S}_{k}}}{{{k}^{2}}}}{{{\left( {{R}_{\infty }}-\tfrac{\alpha }{k} \right)}^{2}}}-\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{\tfrac{{{n}_{k}}-{{S}_{k}}}{{{k}^{2}}}}{{{\left( 1-{{R}_{\infty }}+\tfrac{\alpha }{k} \right)}^{2}}}\,\!</math> | |||

and: | |||

:<math>\frac{{{\partial }^{2}}\Lambda }{\partial {{R}_{\infty }}\partial \alpha }=\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{\tfrac{{{S}_{k}}}{k}}{{{\left( {{R}_{\infty }}-\tfrac{\alpha }{k} \right)}^{2}}}-\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{\tfrac{{{n}_{k}}-{{S}_{k}}}{k}}{{{\left( 1-{{R}_{\infty }}+\tfrac{\alpha }{k} \right)}^{2}}}\,\!</math> | |||

The confidence bounds can be obtained by solving for the three equations shown above and the equation for <math>Var(\hat{R}_{k})\,\!</math>, and then substituting the values into the Fisher Matrix. | |||

As an example, you can calculate and plot the confidence bounds for the data set given above in the [[Lloyd-Lipow# | As an example, you can calculate and plot the confidence bounds for the data set given above in the [[Lloyd-Lipow#Example_-_Least_Squares|Least Squares example]] as: | ||

:<math>\begin{align} | |||

\frac{{{\partial }^{2}}\Lambda }{\partial R_{\infty }^{2}} | \frac{{{\partial }^{2}}\Lambda }{\partial R_{\infty }^{2}} = & -255.3835-937.2902=-1192.6737 \\ | ||

\frac{{{\partial }^{2}}\Lambda }{\partial {{\alpha }^{2}}} | \frac{{{\partial }^{2}}\Lambda }{\partial {{\alpha }^{2}}} = & -24.4575-43.3930=-67.8505 \\ | ||

\frac{{{\partial }^{2}}\Lambda }{\partial {{R}_{\infty }}\partial \alpha } | \frac{{{\partial }^{2}}\Lambda }{\partial {{R}_{\infty }}\partial \alpha } = & 48.6606-140.7518=-92.0912 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The variances can be calculated using the Fisher Matrix: | The variances can be calculated using the Fisher Matrix: | ||

:<math>\begin{align} | |||

{{\left[ \begin{matrix} | |||

1192.6737 & 92.0912 \\ | 1192.6737 & 92.0912 \\ | ||

92.0912 & 67.8505 \\ | 92.0912 & 67.8505 \\ | ||

| Line 235: | Line 133: | ||

Cov({{\widehat{R}}_{\infty }},\widehat{\alpha }) & Var(\widehat{\alpha }) \\ | Cov({{\widehat{R}}_{\infty }},\widehat{\alpha }) & Var(\widehat{\alpha }) \\ | ||

\end{matrix} \right] \\ | \end{matrix} \right] \\ | ||

= & \left[ \begin{matrix} | |||

0.00093661 & -0.00127123 \\ | 0.00093661 & -0.00127123 \\ | ||

-0.00127123 & 0.01646371 \\ | -0.00127123 & 0.01646371 \\ | ||

\end{matrix} \right] | \end{matrix} \right] | ||

\end{align | \end{align}\,\!</math> | ||

The variance of <math>{{R}_{k}}\,\!</math> is obtained such that: | |||

:<math>\begin{align} | |||

Var({{\widehat{R}}_{k}})& =Var({{\widehat{R}}_{\infty }})+\frac{1}{{{k}^{2}}}\cdot Var(\widehat{\alpha })-\frac{2}{k}\cdot Cov({{\widehat{R}}_{\infty }},\widehat{\alpha })\\ | |||

Var({{\widehat{R}}_{k}})& =0.00093661+\frac{1}{{{k}^{2}}}\cdot 0.01646371+\frac{2}{k}\cdot 0.00127123 | |||

\end{align}</math> | \end{align}\,\!</math> | ||

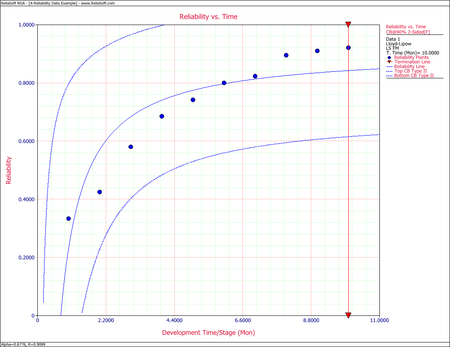

The confidence bounds on reliability can now be calculated. The associated confidence bounds at the 90% confidence level are plotted in the figure below with the predicted reliability, <math>{{R}_{k}}\,\!</math>. | |||

: | [[Image:rga6.2.png|center|450px|Predicted reliability with 90% confidence bounds.]] | ||

===Example - Lloyd-Lipow Confidence Bounds=== | |||

{{:Lloyd-Lipow_Confidence_Bounds_Example}} | |||

==More Examples== | ==More Examples== | ||

===Grouped per Configuration Example=== | ===Grouped per Configuration Example=== | ||

{{:Grouped_per_Configuration_-_Lloyd-Lipow_Model}} | |||

{ | |||

===Reliability Data Example=== | ===Reliability Data Example=== | ||

{{:Reliability_Data_-_Lloyd-Lipow_Model}} | |||

{ | |||

===Sequential Data Example=== | ===Sequential Data Example=== | ||

{{:Sequential_Data_-_Lloyd-Lipow_Model}} | |||

{ | |||

===Sequential Data with Failure Modes Example=== | ===Sequential Data with Failure Modes Example=== | ||

{{:Sequential_Data_with_Modes_-_Lloyd-Lipow_Model}} | |||

{ | |||

Latest revision as of 22:55, 3 June 2014

Lloyd and Lipow (1962) considered a situation in which a test program is conducted in [math]\displaystyle{ N\,\! }[/math] stages. Each stage consists of a certain number of trials of an item undergoing testing, and the data set is recorded as successes or failures. All tests in a given stage of testing involve similar items. The results of each stage of testing are used to improve the item for further testing in the next stage. For the [math]\displaystyle{ {{k}^{th}}\,\! }[/math] group of data, taken in chronological order, there are [math]\displaystyle{ {{n}_{k}}\,\! }[/math] tests with [math]\displaystyle{ {{S}_{k}}\,\! }[/math] observed successes. The reliability growth function is then given in Lloyd and Lipow [6]:

- [math]\displaystyle{ {{R}_{k}}={{R}_{\infty }}-\frac{\alpha }{k}\,\! }[/math]

where:

- [math]\displaystyle{ R_k =\,\! }[/math] the actual reliability during the [math]\displaystyle{ k^{th}\,\! }[/math] stage of testing

- [math]\displaystyle{ R_{\infty} =\,\! }[/math] the ultimate reliability attained if [math]\displaystyle{ k\to{\infty}\,\! }[/math]

- [math]\displaystyle{ \alpha\gt 0 =\,\! }[/math] modifies the rate of growth

Note that essentially, [math]\displaystyle{ {{R}_{k}}=\tfrac{{{S}_{k}}}{{{n}_{k}}}\,\! }[/math]. If the data set consists of reliability data, then [math]\displaystyle{ {{S}_{k}}\,\! }[/math] is assumed to be the observed reliability given and [math]\displaystyle{ {{n}_{k}}\,\! }[/math] is considered 1.

Parameter Estimation

When analyzing reliability data in the RGA software, you have the option to enter the reliability values in percent or in decimal format. However, [math]\displaystyle{ {{\hat{R}}_{\infty }}\,\! }[/math] will always be returned in decimal format and not in percent. The estimated parameters in the RGA software are unitless.

Maximum Likelihood Estimators

For the [math]\displaystyle{ {{k}^{th}}\,\! }[/math] stage:

- [math]\displaystyle{ {{L}_{k}}=const.\text{ }R_{k}^{{{S}_{k}}}{{(1-{{R}_{k}})}^{{{n}_{k}}-{{S}_{k}}}}\,\! }[/math]

And assuming that the results are independent between stages:

- [math]\displaystyle{ L=\underset{k=1}{\overset{N}{\mathop \prod }}\,R_{k}^{{{S}_{k}}}{{(1-{{R}_{k}})}^{{{n}_{k}}-{{S}_{k}}}}\,\! }[/math]

Then taking the natural log gives:

- [math]\displaystyle{ \Lambda =\underset{k=1}{\overset{N}{\mathop \sum }}\,{{S}_{k}}\ln \left( {{R}_{\infty }}-\frac{\alpha }{k} \right)+\underset{k=1}{\overset{N}{\mathop \sum }}\,({{n}_{k}}-{{S}_{k}})\ln \left( 1-{{R}_{\infty }}+\frac{\alpha }{k} \right)\,\! }[/math]

Differentiating with respect to [math]\displaystyle{ {{R}_{\infty }}\,\! }[/math] and [math]\displaystyle{ \alpha ,\,\! }[/math] yields:

- [math]\displaystyle{ \frac{\partial \Lambda }{\partial {{R}_{\infty }}}=\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{{{S}_{k}}}{{{R}_{\infty }}-\tfrac{\alpha }{k}}-\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{{{n}_{k}}-{{S}_{k}}}{1-{{R}_{\infty }}+\tfrac{\alpha }{k}}\,\! }[/math]

- [math]\displaystyle{ \frac{\partial \Lambda }{\partial \alpha }=-\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{\tfrac{{{S}_{k}}}{k}}{{{R}_{\infty }}-\tfrac{\alpha }{k}}+\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{\tfrac{{{n}_{k}}-{{S}_{k}}}{k}}{1-{{R}_{\infty }}+\tfrac{\alpha }{k}}\,\! }[/math]

Rearranging the equations and setting them equal to zero gives:

- [math]\displaystyle{ \frac{\partial \Lambda }{\partial {{R}_{\infty }}}=\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{\tfrac{{{S}_{k}}}{{{n}_{k}}}-\left( {{R}_{\infty }}-\tfrac{\alpha }{k} \right)}{\tfrac{1}{{{n}_{k}}}\left( {{R}_{\infty }}-\tfrac{\alpha }{k} \right)\left( 1-{{R}_{\infty }}+\tfrac{\alpha }{k} \right)}=0\,\! }[/math]

- [math]\displaystyle{ \frac{\partial \Lambda }{\partial \alpha }=-\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{\tfrac{1}{k}\tfrac{{{S}_{k}}}{{{n}_{k}}}-\left( {{R}_{\infty }}-\tfrac{\alpha }{k} \right)\tfrac{1}{k}}{\tfrac{1}{{{n}_{k}}}\left( {{R}_{\infty }}-\tfrac{\alpha }{k} \right)\left( 1-{{R}_{\infty }}+\tfrac{\alpha }{k} \right)}=0\,\! }[/math]

The resulting equations can be solved simultaneously for [math]\displaystyle{ \widehat{\alpha }\,\! }[/math] and [math]\displaystyle{ {{\hat{R}}_{\infty }}\,\! }[/math]. It should be noted that a closed form solution does not exist for either of the parameters; thus, they must be estimated numerically.

Least Squares Estimators

To obtain least squares estimators for [math]\displaystyle{ {{R}_{\infty }}\,\! }[/math] and [math]\displaystyle{ \alpha \,\! }[/math], the sum of squares, [math]\displaystyle{ Q\,\! }[/math], of the deviations of the observed success-ratio, [math]\displaystyle{ {{S}_{k}}/{{n}_{k}}\,\! }[/math], is minimized from its expected value, [math]\displaystyle{ {{R}_{\infty }}-\tfrac{\alpha }{k}\,\! }[/math], with respect to the parameters [math]\displaystyle{ {{R}_{\infty }}\,\! }[/math] and [math]\displaystyle{ \alpha .\,\! }[/math] Therefore, [math]\displaystyle{ Q\,\! }[/math] is expressed as:

- [math]\displaystyle{ Q=\underset{k=1}{\overset{N}{\mathop \sum }}\,{{\left( \frac{{{S}_{k}}}{{{n}_{k}}}-{{R}_{\infty }}+\frac{\alpha }{k} \right)}^{2}}\,\! }[/math]

Taking the derivatives with respect to [math]\displaystyle{ {{R}_{\infty }}\,\! }[/math] and [math]\displaystyle{ \alpha \,\! }[/math] and setting equal to zero yields:

- [math]\displaystyle{ \begin{align} \frac{\partial Q}{\partial {{R}_{\infty }}} = -2\underset{k=1}{\overset{N}{\mathop \sum }}\,\left( \frac{{{S}_{k}}}{{{n}_{k}}}-{{R}_{\infty }}+\frac{\alpha }{k} \right)=0 \\ \frac{\partial Q}{\partial \alpha } = 2\underset{k=1}{\overset{N}{\mathop \sum }}\,\left( \frac{{{S}_{k}}}{{{n}_{k}}}-{{R}_{\infty }}+\frac{\alpha }{k} \right)\frac{1}{k}=0 \end{align}\,\! }[/math]

Solving the equations simultaneously, the least squares estimates of [math]\displaystyle{ {{R}_{\infty }}\,\! }[/math] and [math]\displaystyle{ \alpha \,\! }[/math] are:

- [math]\displaystyle{ {{\hat{R}}_{\infty }}=\frac{\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{{{k}^{2}}}\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{{{S}_{k}}}{{{n}_{k}}}-\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k}\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{{{S}_{k}}}{k{{n}_{k}}}}{N\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{{{k}^{2}}}-{{\left( \underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k} \right)}^{2}}}\,\! }[/math]

or:

- [math]\displaystyle{ \text{ }{{\hat{R}}_{\infty }}=\frac{\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{{{k}^{2}}}\underset{k=1}{\overset{N}{\mathop{\sum }}}\,{{R}_{k}}-\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k}\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{{{R}_{k}}}{k}}{N\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{{{k}^{2}}}-{{\left( \underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k} \right)}^{2}}}\,\! }[/math]

and:

- [math]\displaystyle{ \hat{\alpha }=\frac{\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k}\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{{{S}_{k}}}{{{n}_{k}}}-N\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{{{S}_{k}}}{k{{n}_{k}}}}{N\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{{{k}^{2}}}-{{\left( \underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k} \right)}^{2}}}\,\! }[/math]

or:

- [math]\displaystyle{ \hat{\alpha }=\frac{\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k}\underset{k=1}{\overset{N}{\mathop{\sum }}}\,{{R}_{k}}-N\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{{{R}_{k}}}{k}}{N\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{{{k}^{2}}}-{{\left( \underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k} \right)}^{2}}}\,\! }[/math]

Example - Least Squares

After a 20-stage reliability development test program, 20 groups of success/failure data were obtained and are given in the table below. Do the following:

- Fit the Lloyd-Lipow model to the data using least squares.

- Plot the reliabilities predicted by the Lloyd-Lipow model along with the observed reliabilities, and compare the results.

| Test Stage Number([math]\displaystyle{ k\,\! }[/math]) | Number of Tests in Stage([math]\displaystyle{ n_k\,\! }[/math]) | Number of Successful Tests([math]\displaystyle{ S_k\,\! }[/math]) | Raw Data Reliability | Lloyd-Lipow Reliability |

|---|---|---|---|---|

| 1 | 9 | 6 | 0.667 | 0.7002 |

| 2 | 9 | 5 | 0.556 | 0.7369 |

| 3 | 8 | 7 | 0.875 | 0.7552 |

| 4 | 10 | 6 | 0.600 | 0.7662 |

| 5 | 9 | 7 | 0.778 | 0.7736 |

| 6 | 10 | 8 | 0.800 | 0.7788 |

| 7 | 10 | 7 | 0.700 | 0.7827 |

| 8 | 10 | 6 | 0.600 | 0.7858 |

| 9 | 11 | 7 | 0.636 | 0.7882 |

| 10 | 11 | 9 | 0.818 | 0.7902 |

| 11 | 9 | 9 | 1.000 | 0.7919 |

| 12 | 12 | 10 | 0.833 | 0.7933 |

| 13 | 12 | 9 | 0.750 | 0.7945 |

| 14 | 11 | 8 | 0.727 | 0.7956 |

| 15 | 10 | 7 | 0.700 | 0.7965 |

| 16 | 10 | 8 | 0.800 | 0.7973 |

| 17 | 11 | 10 | 0.909 | 0.7980 |

| 18 | 10 | 9 | 0.900 | 0.7987 |

| 19 | 9 | 8 | 0.889 | 0.7992 |

| 20 | 8 | 7 | 0.875 | 0.7998 |

Solution

- The least squares estimates are:

- [math]\displaystyle{ \begin{align} \underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{1}{k}= & \underset{k=1}{\overset{20}{\mathop \sum }}\,\frac{1}{k}=3.5977 \\ \underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{1}{{{k}^{2}}}= & \underset{k=1}{\overset{20}{\mathop \sum }}\,\frac{1}{{{k}^{2}}}=1.5962 \\ \underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{{{S}_{k}}}{{{n}_{k}}}= & \underset{k=1}{\overset{20}{\mathop \sum }}\,\frac{{{S}_{k}}}{{{n}_{k}}}=15.4131 \end{align}\,\! }[/math]

- [math]\displaystyle{ \underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{{{S}_{k}}}{k\cdot {{n}_{k}}}=\underset{k=1}{\overset{20}{\mathop \sum }}\,\frac{{{S}_{k}}}{k\cdot {{n}_{k}}}=2.5632\,\! }[/math]

- [math]\displaystyle{ \begin{align} \text{ }{{\hat{R}}_{\infty }}= &\frac{\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{{{k}^{2}}}\underset{k=1}{\overset{N}{\mathop{\sum }}}\,{{R}_{k}}-\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k}\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{{{R}_{k}}}{k}}{N\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{{{k}^{2}}}-{{\left( \underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k} \right)}^{2}}} \\ = & \frac{(1.5962)(15.413)-(3.5977)(2.5637)}{(20)(1.5962)-{{(3.5977)}^{2}}} \\ = & 0.8104 \end{align}\,\! }[/math]

- [math]\displaystyle{ \begin{align} \hat{\alpha }= &\frac{\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k}\underset{k=1}{\overset{N}{\mathop{\sum }}}\,{{R}_{k}}-N\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{{{R}_{k}}}{k}}{N\underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{{{k}^{2}}}-{{\left( \underset{k=1}{\overset{N}{\mathop{\sum }}}\,\tfrac{1}{k} \right)}^{2}}}\\ = & \frac{(3.5977)(15.413)-(20)(2.5637)}{(20)(1.5962)-{{(3.5977)}^{2}}} \\ = & 0.2207 \end{align}\,\! }[/math]

- [math]\displaystyle{ {{R}_{k}}=0.8104-\frac{0.2201}{k}\,\! }[/math]

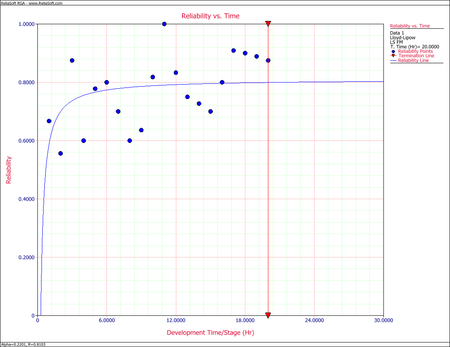

- The reliabilities from the raw data and the reliabilities predicted from the Lloyd-Lipow reliability growth model are given in the last two columns of the table. The figure below shows the plot. Based on the given data, the model cannot do much more than to basically fit a line through the middle of the points.

Confidence Bounds

This section presents the methods used in the RGA software to estimate the confidence bounds under the Lloyd-Lipow model. One of the properties of maximum likelihood estimators is that they are asymptotically normal. This indicates that they are normally distributed for large samples [6, 7]. Additionally, since the parameter [math]\displaystyle{ \alpha \,\! }[/math] must be positive, [math]\displaystyle{ \ln \alpha \,\! }[/math] is treated as being normally distributed as well. The parameter [math]\displaystyle{ {{R}_{\infty }}\,\! }[/math] represents the ultimate reliability that would be attained if [math]\displaystyle{ k\to \infty \,\! }[/math]. [math]\displaystyle{ {{R}_{k}}\,\! }[/math] is the actual reliability during the [math]\displaystyle{ {{k}^{th}}\,\! }[/math] stage of testing. Therefore, [math]\displaystyle{ {{R}_{\infty }}\,\! }[/math] and [math]\displaystyle{ {{R}_{k}}\,\! }[/math] will be between 0 and 1. Consequently, the endpoints of the confidence intervals of the parameters [math]\displaystyle{ {{R}_{\infty }}\,\! }[/math] and [math]\displaystyle{ {{R}_{k}}\,\! }[/math] also will be between 0 and 1. To obtain the confidence interval, it is common practice to use the logit transformation.

The confidence bounds on the parameters [math]\displaystyle{ \alpha \,\! }[/math] and [math]\displaystyle{ {{R}_{\infty }}\,\! }[/math] are given by:

- [math]\displaystyle{ C{{B}_{\alpha }}=\hat{\alpha }{{e}^{\pm {{z}_{\alpha /2}}\sqrt{Var(\hat{\alpha })}/\hat{\alpha }}}\,\! }[/math]

- [math]\displaystyle{ C{{B}_{{{R}_{\infty }}}}=\frac{{{{\hat{R}}}_{\infty }}}{{{{\hat{R}}}_{\infty }}+(1-{{{\hat{R}}}_{\infty }}){{e}^{\pm {{z}_{\alpha /2}}\sqrt{Var({{{\hat{R}}}_{\infty }})}/\left[ {{{\hat{R}}}_{\infty }}(1-{{{\hat{R}}}_{\infty }}) \right]}}}\,\! }[/math]

where [math]\displaystyle{ {{z}_{\alpha /2}}\,\! }[/math] represents the percentage points of the [math]\displaystyle{ N(0,1)\,\! }[/math] distribution such that [math]\displaystyle{ P\{z\ge {{z}_{\alpha /2}}\}=\alpha /2\,\! }[/math].

The confidence bounds on reliability are given by:

- [math]\displaystyle{ CB=\frac{{{{\hat{R}}}_{k}}}{{{{\hat{R}}}_{k}}+(1-{{{\hat{R}}}_{k}}){{e}^{\pm {{z}_{\alpha /2}}\sqrt{Var({{{\hat{R}}}_{k}})}/\left[ {{{\hat{R}}}_{k}}(1-{{{\hat{R}}}_{k}}) \right]}}}\,\! }[/math]

where:

- [math]\displaystyle{ Var({{\widehat{R}}_{k}})=Var({{\widehat{R}}_{\infty }})+\frac{1}{{{k}^{2}}}\cdot Var(\widehat{\alpha })-\frac{2}{k}\cdot Cov({{\widehat{R}}_{\infty }},\widehat{\alpha })\,\! }[/math]

All the variances can be calculated using the Fisher Matrix:

- [math]\displaystyle{ \begin{bmatrix} -\tfrac{{{\partial }^{2}}\Lambda }{\partial R_{\infty }^{2}} & -\tfrac{{{\partial }^{2}}\Lambda }{\partial \alpha \partial {{R}_{\infty }}} \\ -\tfrac{{{\partial }^{2}}\Lambda }{\partial \alpha \partial {{R}_{\infty }}} & -\tfrac{{{\partial }^{2}}\Lambda }{\partial {{\alpha }^{2}}} \\ \end{bmatrix}^{-1}= \begin{bmatrix} Var({{\widehat{R}}_{\infty }}) & Cov({{\widehat{R}}_{\infty }},\widehat{\alpha }) \\ Cov({{\widehat{R}}_{\infty }},\widehat{\alpha }) & Var(\widehat{\alpha }) \\ \end{bmatrix}\,\! }[/math]

From the ML estimators of the Lloyd-Lipow model, taking the second partial derivatives yields:

- [math]\displaystyle{ \frac{{{\partial }^{2}}\Lambda }{\partial R_{\infty }^{2}}=-\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{{{S}_{k}}}{{{\left( {{R}_{\infty }}-\tfrac{\alpha }{k} \right)}^{2}}}-\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{{{n}_{k}}-{{S}_{k}}}{{{\left( 1-{{R}_{\infty }}+\tfrac{\alpha }{k} \right)}^{2}}}\,\! }[/math]

- [math]\displaystyle{ \frac{{{\partial }^{2}}\Lambda }{\partial {{\alpha }^{2}}}=-\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{\tfrac{{{S}_{k}}}{{{k}^{2}}}}{{{\left( {{R}_{\infty }}-\tfrac{\alpha }{k} \right)}^{2}}}-\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{\tfrac{{{n}_{k}}-{{S}_{k}}}{{{k}^{2}}}}{{{\left( 1-{{R}_{\infty }}+\tfrac{\alpha }{k} \right)}^{2}}}\,\! }[/math]

and:

- [math]\displaystyle{ \frac{{{\partial }^{2}}\Lambda }{\partial {{R}_{\infty }}\partial \alpha }=\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{\tfrac{{{S}_{k}}}{k}}{{{\left( {{R}_{\infty }}-\tfrac{\alpha }{k} \right)}^{2}}}-\underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{\tfrac{{{n}_{k}}-{{S}_{k}}}{k}}{{{\left( 1-{{R}_{\infty }}+\tfrac{\alpha }{k} \right)}^{2}}}\,\! }[/math]

The confidence bounds can be obtained by solving for the three equations shown above and the equation for [math]\displaystyle{ Var(\hat{R}_{k})\,\! }[/math], and then substituting the values into the Fisher Matrix.

As an example, you can calculate and plot the confidence bounds for the data set given above in the Least Squares example as:

- [math]\displaystyle{ \begin{align} \frac{{{\partial }^{2}}\Lambda }{\partial R_{\infty }^{2}} = & -255.3835-937.2902=-1192.6737 \\ \frac{{{\partial }^{2}}\Lambda }{\partial {{\alpha }^{2}}} = & -24.4575-43.3930=-67.8505 \\ \frac{{{\partial }^{2}}\Lambda }{\partial {{R}_{\infty }}\partial \alpha } = & 48.6606-140.7518=-92.0912 \end{align}\,\! }[/math]

The variances can be calculated using the Fisher Matrix:

- [math]\displaystyle{ \begin{align} {{\left[ \begin{matrix} 1192.6737 & 92.0912 \\ 92.0912 & 67.8505 \\ \end{matrix} \right]}^{-1}}= & \left[ \begin{matrix} Var({{\widehat{R}}_{\infty }}) & Cov({{\widehat{R}}_{\infty }},\widehat{\alpha }) \\ Cov({{\widehat{R}}_{\infty }},\widehat{\alpha }) & Var(\widehat{\alpha }) \\ \end{matrix} \right] \\ = & \left[ \begin{matrix} 0.00093661 & -0.00127123 \\ -0.00127123 & 0.01646371 \\ \end{matrix} \right] \end{align}\,\! }[/math]

The variance of [math]\displaystyle{ {{R}_{k}}\,\! }[/math] is obtained such that:

- [math]\displaystyle{ \begin{align} Var({{\widehat{R}}_{k}})& =Var({{\widehat{R}}_{\infty }})+\frac{1}{{{k}^{2}}}\cdot Var(\widehat{\alpha })-\frac{2}{k}\cdot Cov({{\widehat{R}}_{\infty }},\widehat{\alpha })\\ Var({{\widehat{R}}_{k}})& =0.00093661+\frac{1}{{{k}^{2}}}\cdot 0.01646371+\frac{2}{k}\cdot 0.00127123 \end{align}\,\! }[/math]

The confidence bounds on reliability can now be calculated. The associated confidence bounds at the 90% confidence level are plotted in the figure below with the predicted reliability, [math]\displaystyle{ {{R}_{k}}\,\! }[/math].

Example - Lloyd-Lipow Confidence Bounds

Consider the success/failure data given in the following table. Solve for the Lloyd-Lipow parameters using least squares analysis, and plot the Lloyd-Lipow reliability with 2-sided confidence bounds at the 90% confidence level.

| Test Stage Number([math]\displaystyle{ k\,\! }[/math]) | Result | Number of Tests([math]\displaystyle{ n_k\,\! }[/math]> | Successful Tests([math]\displaystyle{ S_k=R_i\,\! }[/math]) |

|---|---|---|---|

| 1 | F | 1 | 0 |

| 2 | F | 1 | 0 |

| 3 | F | 1 | 0 |

| 4 | S | 1 | 0.2500 |

| 5 | F | 1 | 0.2000 |

| 6 | F | 1 | 0.1667 |

| 7 | S | 1 | 0.2857 |

| 8 | S | 1 | 0.3750 |

| 9 | S | 1 | 0.4444 |

| 10 | S | 1 | 0.5000 |

| 11 | S | 1 | 0.5455 |

| 12 | S | 1 | 0.5833 |

| 13 | S | 1 | 0.6154 |

| 14 | S | 1 | 0.6429 |

| 15 | S | 1 | 0.6667 |

| 16 | S | 1 | 0.6875 |

| 17 | F | 1 | 0.6471 |

| 18 | S | 1 | 0.6667 |

| 19 | F | 1 | 0.6316 |

| 20 | S | 1 | 0.6500 |

| 21 | S | 1 | 0.6667 |

| 22 | S | 1 | 0.6818 |

Solution

Note that the data set contains three consecutive failures at the beginning of the test. These failures will be ignored throughout the analysis because it is considered that the test starts when the reliability is not equal to zero or one. The number of data points is now reduced to 19. Also, note that the only time that the first three first failures are considered is to calculate the observed reliability in the test. For example, given this data set, the observed reliability at stage 4 is [math]\displaystyle{ 1/4=0.25\,\! }[/math]. This is considered to be the reliability at stage 1.

From the table, the least squares estimates can be calculated as follows:

- [math]\displaystyle{ \begin{align} \underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{1}{k}= & \underset{k=1}{\overset{19}{\mathop \sum }}\,\frac{1}{k}=3.54774 \\ \underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{1}{{{k}^{2}}}= & \underset{k=1}{\overset{19}{\mathop \sum }}\,\frac{1}{{{k}^{2}}}=1.5936 \\ \underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{{{S}_{k}}}{{{n}_{k}}}= & \underset{k=1}{\overset{19}{\mathop \sum }}\,\frac{{{S}_{k}}}{{{n}_{k}}}=9.907 \end{align}\,\! }[/math]

and:

- [math]\displaystyle{ \underset{k=1}{\overset{N}{\mathop \sum }}\,\frac{{{S}_{k}}}{k\cdot {{n}_{k}}}=\underset{k=1}{\overset{19}{\mathop \sum }}\,\frac{{{S}_{k}}}{k\cdot {{n}_{k}}}=1.3002\,\! }[/math]

Using these estimates to obtain [math]\displaystyle{ \hat{R}_{\infty}\,\! }[/math] and [math]\displaystyle{ \hat{\alpha}\,\! }[/math] yields:

- [math]\displaystyle{ \begin{align} {{{\hat{R}}}_{\infty }} = & \frac{(1.5936)(9.907)-(3.5477)(1.3002)}{(19)(1.5936)-{{(3.5477)}^{2}}} \\ = & 0.6316 \end{align}\,\! }[/math]

and:

- [math]\displaystyle{ \begin{align} \hat{\alpha } = & \frac{(3.5477)(9.907)-(19)(1.3002)}{(19)(1.5936)-{{(3.5477)}^{2}}} \\ = & 0.5902 \end{align}\,\! }[/math]

Therefore, the Lloyd-Lipow reliability growth model is as follows, where [math]\displaystyle{ k\,\! }[/math] is the number of the test stage.

- [math]\displaystyle{ {{R}_{k}}=0.6316-\frac{0.5902}{k}\,\! }[/math]

Using the data from the table:

- [math]\displaystyle{ \begin{align} \frac{{{\partial }^{2}}\Lambda }{\partial R_{\infty }^{2}} = & -176.847-40.500=-217.347 \\ \frac{{{\partial }^{2}}\Lambda }{\partial {{\alpha }^{2}}} = & -146.763-2.1274=-148.891 \\ \frac{{{\partial }^{2}}\Lambda }{\partial {{R}_{\infty }}\partial \alpha } = & 149.909-6.5660=143.343 \end{align}\,\! }[/math]

The variances can be calculated using the Fisher Matrix:

- [math]\displaystyle{ \begin{align} {{\left[ \begin{matrix} 217.347 & -143.343 \\ -143.343 & 148.891 \\ \end{matrix} \right]}^{-1}}= & \left[ \begin{matrix} Var({{\widehat{R}}_{\infty }}) & Cov({{\widehat{R}}_{\infty }},\widehat{\alpha }) \\ Cov({{\widehat{R}}_{\infty }},\widehat{\alpha }) & Var(\widehat{\alpha }) \\ \end{matrix} \right] \\ = & \left[ \begin{matrix} 0.0126033 & 0.0121335 \\ 0.0121335 & 0.0183977 \\ \end{matrix} \right] \end{align}\,\! }[/math]

The variance of [math]\displaystyle{ {{R}_{k}}\,\! }[/math] is therefore:

- [math]\displaystyle{ Var({{\widehat{R}}_{k}})=0.0126031+\frac{1}{{{k}^{2}}}\cdot 0.0183977-\frac{2}{k}\cdot 0.0121335\,\! }[/math]

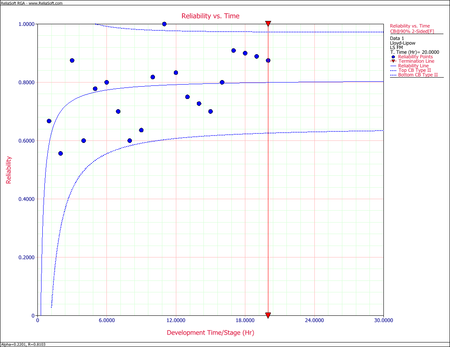

The confidence bounds on reliability can now can be calculated. The associated confidence bounds on reliability at the 90% confidence level are plotted in the following figure, with the predicted reliability, [math]\displaystyle{ {{R}_{k}}\,\! }[/math].

More Examples

Grouped per Configuration Example

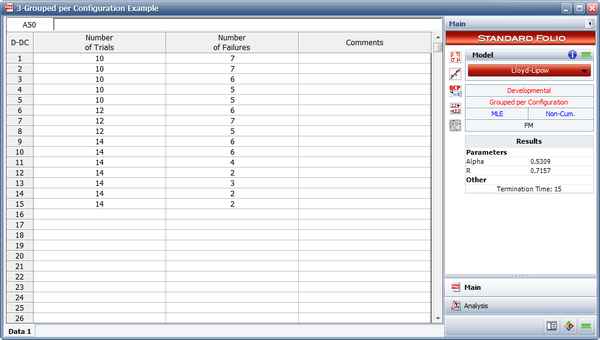

A 15-stage reliability development test program was performed. The grouped per configuration data set is shown in the following table. Do the following:

- Fit the Lloyd-Lipow model to the data using MLE.

- What is the maximum reliability attained as the number of test stages approaches infinity?

- What is the maximum achievable reliability with a 90% confidence level?

| Stage, [math]\displaystyle{ k\,\! }[/math] | Number of Tests ([math]\displaystyle{ n_k\,\! }[/math]) | Number of Successes ([math]\displaystyle{ S_k\,\! }[/math]) |

|---|---|---|

| 1 | 10 | 3 |

| 2 | 10 | 3 |

| 3 | 10 | 4 |

| 4 | 10 | 5 |

| 5 | 10 | 5 |

| 6 | 12 | 6 |

| 7 | 12 | 5 |

| 8 | 12 | 7 |

| 9 | 14 | 8 |

| 10 | 14 | 8 |

| 11 | 14 | 10 |

| 12 | 14 | 12 |

| 13 | 14 | 11 |

| 14 | 14 | 12 |

| 15 | 14 | 12 |

Solution

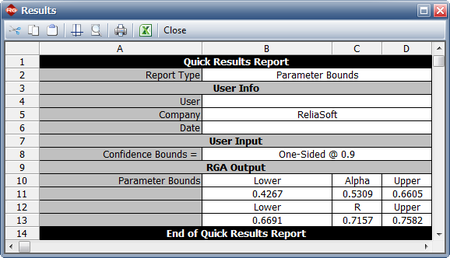

- The figure below displays the entered data and the estimated Lloyd-Lipow parameters.

- The maximum achievable reliability as the number of test stages approaches infinity is equal to the value of [math]\displaystyle{ R\,\! }[/math]. Therefore, [math]\displaystyle{ R=0.7157\,\! }[/math].

- The maximum achievable reliability with a 90% confidence level can be estimated by viewing the confidence bounds on the parameters in the QCP, as shown in the figure below. The lower bound on the value of [math]\displaystyle{ R\,\! }[/math] is equal to 0.6691 .

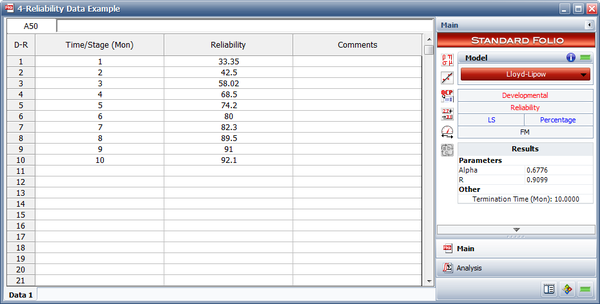

Reliability Data Example

Given the reliability data in the table below, do the following:

- Fit the Lloyd-Lipow model to the data using least squares analysis.

- Plot the Lloyd-Lipow reliability with 90% 2-sided confidence bounds.

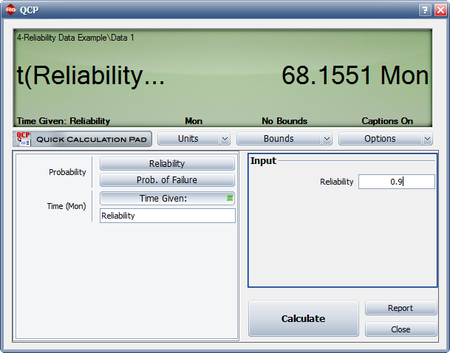

- How many months of testing are required to achieve a reliability goal of 90%?

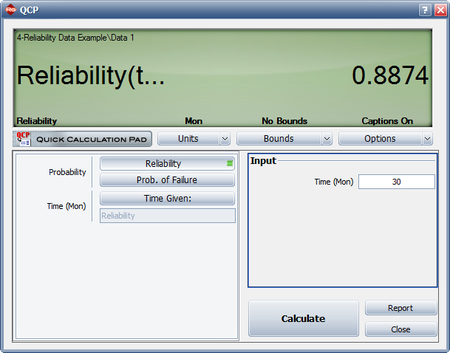

- What is the attainable reliability if the maximum duration of testing is 30 months?

| Time (months) | Reliability (%) |

|---|---|

| 1 | 33.35 |

| 2 | 42.50 |

| 3 | 58.02 |

| 4 | 68.50 |

| 5 | 74.20 |

| 6 | 80.00 |

| 7 | 82.30 |

| 8 | 89.50 |

| 9 | 91.00 |

| 10 | 92.10 |

Solution

- The next figure displays the estimated parameters.

- The figure below displays Reliability vs. Time plot with 90% 2-sided confidence bounds.

- The next figure shows the number of months of testing required to achieve a reliability goal of 90%.

- The figure below displays the reliability achieved after 30 months of testing.

Sequential Data Example

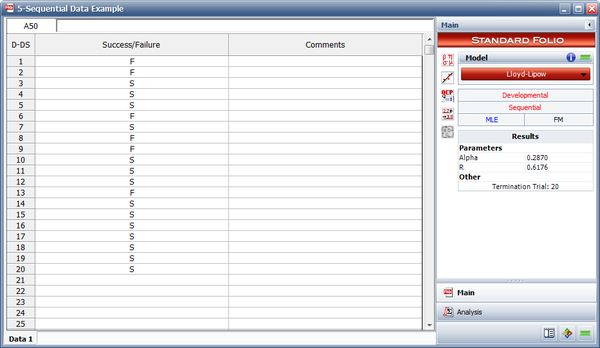

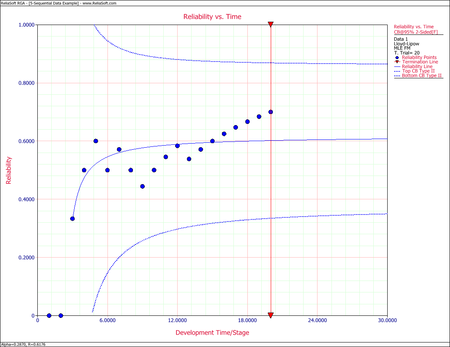

Use MLE to find the Lloyd-Lipow model that represents the data in the following table, and plot it along with the 95% 2-sided confidence bounds. Does the model follow the data?

| Run Number | Result |

|---|---|

| 1 | F |

| 2 | F |

| 3 | S |

| 4 | S |

| 5 | S |

| 6 | F |

| 7 | S |

| 8 | F |

| 9 | F |

| 10 | S |

| 11 | S |

| 12 | S |

| 13 | F |

| 14 | S |

| 15 | S |

| 16 | S |

| 17 | S |

| 18 | S |

| 19 | S |

| 20 | S |

Solution

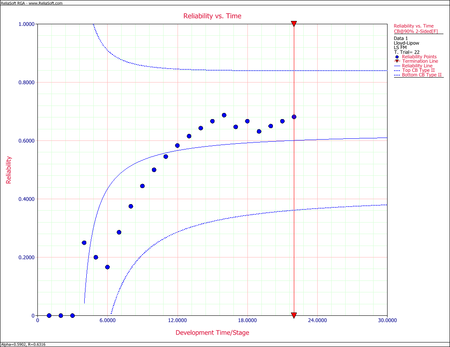

The two figures below demonstrate the solution. As can be seen from the Reliability vs. Time plot with 95% 2-sided confidence bounds, the model does not seem to follow the data. You may want to consider another model for this data set.

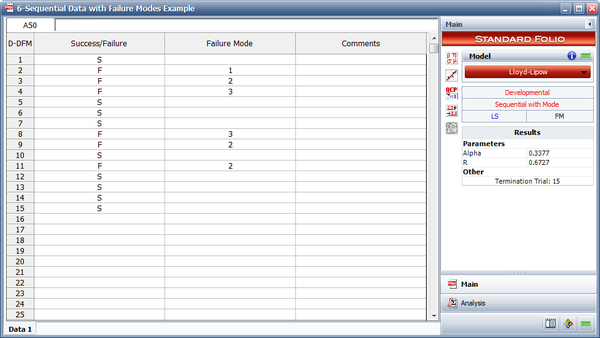

Sequential Data with Failure Modes Example

Use least squares to find the Lloyd-Lipow model that represents the data in the following table. This data set includes information about the failure mode that was responsible for each failure, so that the probability of each failure mode recurring is taken into account in the analysis.

| Run Number | Result | Mode |

|---|---|---|

| 1 | S | |

| 2 | F | 1 |

| 3 | F | 2 |

| 4 | F | 3 |

| 5 | S | |

| 6 | S | |

| 7 | S | |

| 8 | F | 3 |

| 9 | F | 2 |

| 10 | S | |

| 11 | F | 2 |

| 12 | S | |

| 13 | S | |

| 14 | S | |

| 15 | S |

Solution

The following figure shows the analysis.