Multiple Linear Regression Analysis: Difference between revisions

No edit summary |

Chuck Smith (talk | contribs) No edit summary |

||

| (249 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

{{Template:Doebook|4}} | {{Template:Doebook|4}} | ||

This chapter expands on the analysis of simple linear regression models and discusses the analysis of multiple linear regression models. A major portion of the results displayed in [https://koi-3QN72QORVC.marketingautomation.services/net/m?md=Rw01CJDOxn%2FabhkPlZsy6DwBQ%2BaCXsGR Weibull++] DOE folios are explained in this chapter because these results are associated with multiple linear regression. One of the applications of multiple linear regression models is Response Surface Methodology (RSM). RSM is a method used to locate the optimum value of the response and is one of the final stages of experimentation. It is discussed in [[Response_Surface_Methods_for_Optimization| Response Surface Methods]]. Towards the end of this chapter, the concept of using indicator variables in regression models is explained. Indicator variables are used to represent qualitative factors in regression models. The concept of using indicator variables is important to gain an understanding of ANOVA models, which are the models used to analyze data obtained from experiments. These models can be thought of as first order multiple linear regression models where all the factors are treated as qualitative factors. ANOVA models are discussed in the [[One Factor Designs]] and [[General Full Factorial Designs]] chapters. | |||

==Multiple Linear Regression Model== | |||

A linear regression model that contains more than one predictor variable is called a ''multiple linear regression model''. The following model is a multiple linear regression model with two predictor variables, <math>{{x}_{1}}\,\!</math> and <math>{{x}_{2}}\,\!</math>. | |||

::<math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+{{\beta }_{2}}{{x}_{2}}+\epsilon\,\!</math> | |||

The model is linear because it is linear in the parameters <math>{{\beta }_{0}}\,\!</math>, <math>{{\beta }_{1}}\,\!</math> and <math>{{\beta }_{2}}\,\!</math>. The model describes a plane in the three-dimensional space of <math>Y\,\!</math>, <math>{{x}_{1}}\,\!</math> and <math>{{x}_{2}}\,\!</math>. The parameter <math>{{\beta }_{0}}\,\!</math> is the intercept of this plane. Parameters <math>{{\beta }_{1}}\,\!</math> and <math>{{\beta }_{2}}\,\!</math> are referred to as ''partial regression coefficients''. Parameter <math>{{\beta }_{1}}\,\!</math> represents the change in the mean response corresponding to a unit change in <math>{{x}_{1}}\,\!</math> when <math>{{x}_{2}}\,\!</math> is held constant. Parameter <math>{{\beta }_{2}}\,\!</math> represents the change in the mean response corresponding to a unit change in <math>{{x}_{2}}\,\!</math> when <math>{{x}_{1}}\,\!</math> is held constant. | |||

Consider the following example of a multiple linear regression model with two predictor variables, <math>{{x}_{1}}\,\!</math> and <math>{{x}_{2}}\,\!</math> : | |||

::<math>Y=30+5{{x}_{1}}+7{{x}_{2}}+\epsilon \,\!</math> | |||

This regression model is a first order multiple linear regression model. This is because the maximum power of the variables in the model is | This regression model is a first order multiple linear regression model. This is because the maximum power of the variables in the model is 1. (The regression plane corresponding to this model is shown in the figure below.) Also shown is an observed data point and the corresponding random error, <math>\epsilon\,\!</math>. The true regression model is usually never known (and therefore the values of the random error terms corresponding to observed data points remain unknown). However, the regression model can be estimated by calculating the parameters of the model for an observed data set. This is explained in [[Multiple_Linear_Regression_Analysis#Estimating_Regression_Models_Using_Least_Squares| Estimating Regression Models Using Least Squares]]. | ||

One of the following figures shows the contour plot for the regression model the above equation. The contour plot shows lines of constant mean response values as a function of <math>{{x}_{1}}\,\!</math> and <math>{{x}_{2}}\,\!</math>. The contour lines for the given regression model are straight lines as seen on the plot. Straight contour lines result for first order regression models with no interaction terms. | |||

A linear regression model may also take the following form: | A linear regression model may also take the following form: | ||

::<math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+{{\beta }_{2}}{{x}_{2}}+{{\beta }_{12}}{{x}_{1}}{{x}_{2}}+\epsilon\,\!</math> | |||

A cross-product term, | A cross-product term, <math>{{x}_{1}}{{x}_{2}}\,\!</math>, is included in the model. This term represents an interaction effect between the two variables <math>{{x}_{1}}\,\!</math> and <math>{{x}_{2}}\,\!</math>. Interaction means that the effect produced by a change in the predictor variable on the response depends on the level of the other predictor variable(s). As an example of a linear regression model with interaction, consider the model given by the equation <math>Y=30+5{{x}_{1}}+7{{x}_{2}}+3{{x}_{1}}{{x}_{2}}+\epsilon\,\!</math>. The regression plane and contour plot for this model are shown in the following two figures, respectively. | ||

[[Image:doe5.1.png|center|437px|Regression plane for the model <math>Y=30+5 x_1+7 x_2+\epsilon\,\!</math>]] | |||

[[Image:doe5.2.png|center|337px|Countour plot for the model <math>Y=30+5 x_1+7 x_2+\epsilon\,\!</math>]] | |||

Now consider the regression model shown next: | Now consider the regression model shown next: | ||

::<math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+{{\beta }_{2}}x_{1}^{2}+{{\beta }_{3}}x_{1}^{3}+\epsilon\,\!</math> | |||

This model is also a linear regression model and is referred to as a ''polynomial regression model''. Polynomial regression models contain squared and higher order terms of the predictor variables making the response surface curvilinear. As an example of a polynomial regression model with an interaction term consider the following equation: | |||

::<math>Y=500+5{{x}_{1}}+7{{x}_{2}}-3x_{1}^{2}-5x_{2}^{2}+3{{x}_{1}}{{x}_{2}}+\epsilon\,\!</math> | |||

This model is a ''second order'' model because the maximum power of the terms in the model is two. The regression surface for this model is shown in the following figure. Such regression models are used in RSM to find the optimum value of the response, <math>Y\,\!</math> (for details see [[Response_Surface_Methods_for_Optimization| Response Surface Methods for Optimization]]). Notice that, although the shape of the regression surface is curvilinear, the regression model is still linear because the model is linear in the parameters. The contour plot for this model is shown in the second of the following two figures. | |||

[[Image:doe5.3.png | [[Image:doe5.3.png|center|437px|Regression plane for the model <math>Y=30+5 x_1+7 x_2+3 x_1 x_2+\epsilon\,\!</math>]] | ||

[[Image:doe5.4.png | [[Image:doe5.4.png|center|337px|Countour plot for the model <math>Y=30+5 x_1+7 x_2+3 x_1 x_2+\epsilon\,\!</math>]] | ||

All multiple linear regression models can be expressed in the following general form: | All multiple linear regression models can be expressed in the following general form: | ||

where | ::<math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+{{\beta }_{2}}{{x}_{2}}+...+{{\beta }_{k}}{{x}_{k}}+\epsilon\,\!</math> | ||

where <math>k\,\!</math> denotes the number of terms in the model. For example, the model can be written in the general form using <math>{{x}_{3}}=x_{1}^{2}\,\!</math>, <math>{{x}_{4}}=x_{2}^{3}\,\!</math> and <math>{{x}_{5}}={{x}_{1}}{{x}_{2}}\,\!</math> as follows: | |||

::<math>Y=500+5{{x}_{1}}+7{{x}_{2}}-3{{x}_{3}}-5{{x}_{4}}+3{{x}_{5}}+\epsilon </math> | ::<math>Y=500+5{{x}_{1}}+7{{x}_{2}}-3{{x}_{3}}-5{{x}_{4}}+3{{x}_{5}}+\epsilon\,\!</math> | ||

==Estimating Regression Models Using Least Squares== | ==Estimating Regression Models Using Least Squares== | ||

Consider a multiple linear regression model with | Consider a multiple linear regression model with <math>k\,\!</math> predictor variables: | ||

::<math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+{{\beta }_{2}}{{x}_{2}}+...+{{\beta }_{k}}{{x}_{k}}+\epsilon\,\!</math> | |||

Let each of the <math>k\,\!</math> predictor variables, <math>{{x}_{1}}\,\!</math>, <math>{{x}_{2}}\,\!</math>... <math>{{x}_{k}}\,\!</math>, have <math>n\,\!</math> levels. Then <math>{{x}_{ij}}\,\!</math> represents the <math>i\,\!</math> th level of the <math>j\,\!</math> th predictor variable <math>{{x}_{j}}\,\!</math>. For example, <math>{{x}_{51}}\,\!</math> represents the fifth level of the first predictor variable <math>{{x}_{1}}\,\!</math>, while <math>{{x}_{19}}\,\!</math> represents the first level of the ninth predictor variable, <math>{{x}_{9}}\,\!</math>. Observations, <math>{{y}_{1}}\,\!</math>, <math>{{y}_{2}}\,\!</math>... <math>{{y}_{n}}\,\!</math>, recorded for each of these <math>n\,\!</math> levels can be expressed in the following way: | |||

::<math>\begin{align} | |||

{{y}_{1}}= & {{\beta }_{0}}+{{\beta }_{1}}{{x}_{11}}+{{\beta }_{2}}{{x}_{12}}+...+{{\beta }_{k}}{{x}_{1k}}+{{\epsilon }_{1}} \\ | |||

{{y}_{2}}= & {{\beta }_{0}}+{{\beta }_{1}}{{x}_{21}}+{{\beta }_{2}}{{x}_{22}}+...+{{\beta }_{k}}{{x}_{2k}}+{{\epsilon }_{2}} \\ | |||

& .. \\ | |||

{{y}_{i}}= & {{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}}+...+{{\beta }_{k}}{{x}_{ik}}+{{\epsilon }_{i}} \\ | |||

& .. \\ | |||

{{y}_{n}}= & {{\beta }_{0}}+{{\beta }_{1}}{{x}_{n1}}+{{\beta }_{2}}{{x}_{n2}}+...+{{\beta }_{k}}{{x}_{nk}}+{{\epsilon }_{n}} | |||

\end{align}\,\!</math> | |||

The system of <math>n\,\!</math> equations shown previously can be represented in matrix notation as follows: | |||

::<math>y=X\beta +\epsilon </math> | ::<math>y=X\beta +\epsilon\,\!</math> | ||

:where | :where | ||

::<math>y=\left[ \begin{matrix} | ::<math>y=\left[ \begin{matrix} | ||

| Line 105: | Line 112: | ||

. & . & . & {} & {} & {} & . \\ | . & . & . & {} & {} & {} & . \\ | ||

1 & {{x}_{n1}} & {{x}_{n2}} & . & . & . & {{x}_{nn}} \\ | 1 & {{x}_{n1}} & {{x}_{n2}} & . & . & . & {{x}_{nn}} \\ | ||

\end{matrix} \right]</math> | \end{matrix} \right]\,\!</math> | ||

| Line 122: | Line 129: | ||

. \\ | . \\ | ||

{{\epsilon }_{n}} \\ | {{\epsilon }_{n}} \\ | ||

\end{matrix} \right]</math> | \end{matrix} \right]\,\!</math> | ||

The matrix | The matrix <math>X\,\!</math> is referred to as the ''design matrix''. It contains information about the levels of the predictor variables at which the observations are obtained. The vector <math>\beta\,\!</math> contains all the regression coefficients. To obtain the regression model, <math>\beta\,\!</math> should be known. <math>\beta\,\!</math> is estimated using least square estimates. The following equation is used: | ||

::<math>\hat{\beta }={{({{X}^{\prime }}X)}^{-1}}{{X}^{\prime }}y\,\!</math> | |||

where <math>^{\prime }\,\!</math> represents the transpose of the matrix while <math>^{-1}\,\!</math> represents the matrix inverse. Knowing the estimates, <math>\hat{\beta }\,\!</math>, the multiple linear regression model can now be estimated as: | |||

The fitted model of | ::<math>\hat{y}=X\hat{\beta }\,\!</math> | ||

The estimated regression model is also referred to as the ''fitted model''. The observations, <math>{{y}_{i}}\,\!</math>, may be different from the fitted values <math>{{\hat{y}}_{i}}\,\!</math> obtained from this model. The difference between these two values is the residual, <math>{{e}_{i}}\,\!</math>. The vector of residuals, <math>e\,\!</math>, is obtained as: | |||

::<math>e=y-\hat{y}\,\!</math> | |||

The fitted model can also be written as follows, using <math>\hat{\beta }={{({{X}^{\prime }}X)}^{-1}}{{X}^{\prime }}y\,\!</math>: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

\hat{y} &= & X\hat{\beta } \\ | |||

& = & X{{({{X}^{\prime }}X)}^{-1}}{{X}^{\prime }}y \\ | & = & X{{({{X}^{\prime }}X)}^{-1}}{{X}^{\prime }}y \\ | ||

& = & Hy | & = & Hy | ||

\end{align}</math> | \end{align}\,\!</math> | ||

where <math>H=X{{({{X}^{\prime }}X)}^{-1}}{{X}^{\prime }}\,\!</math>. The matrix, <math>H\,\!</math>, is referred to as the hat matrix. It transforms the vector of the observed response values, <math>y\,\!</math>, to the vector of fitted values, <math>\hat{y}\,\!</math>. | |||

===Example=== | |||

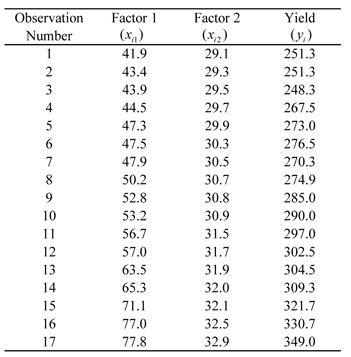

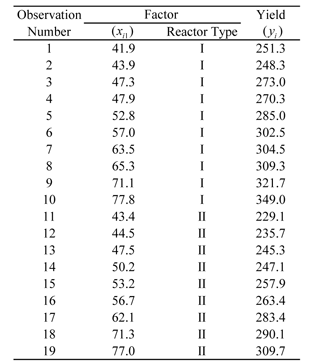

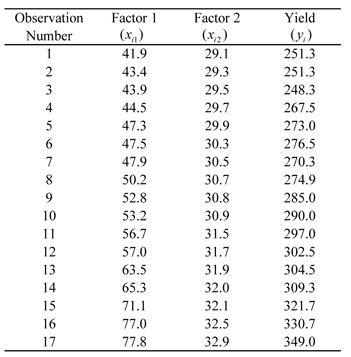

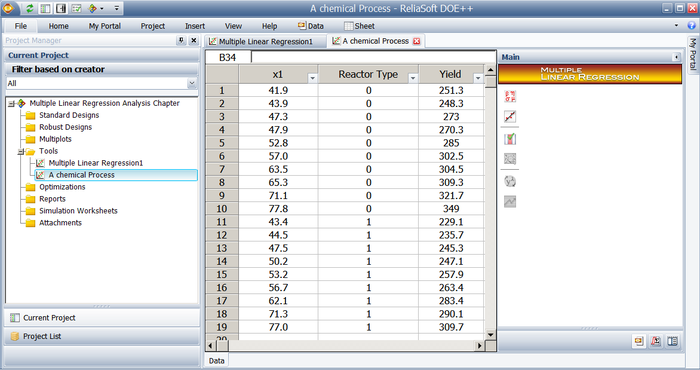

An analyst studying a chemical process expects the yield to be affected by the levels of two factors, <math>{{x}_{1}}\,\!</math> and <math>{{x}_{2}}\,\!</math>. Observations recorded for various levels of the two factors are shown in the following table. The analyst wants to fit a first order regression model to the data. Interaction between <math>{{x}_{1}}\,\!</math> and <math>{{x}_{2}}\,\!</math> is not expected based on knowledge of similar processes. Units of the factor levels and the yield are ignored for the analysis. | |||

[[Image:doet5.1.png||center|351px|Observed yield data for various levels of two factors.|link=]] | |||

[[Image: | |||

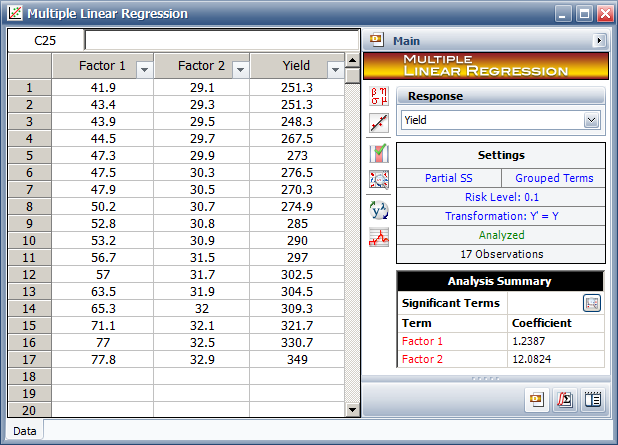

The data of the above table can be entered into the Weibull++ DOE folio using the multiple linear regression folio tool as shown in the following figure. | |||

[[Image:doe5_7.png|center|618px|Multiple Regression tool in Webibull++ with the data in the table.|link=]] | |||

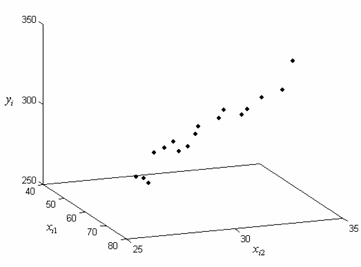

A scatter plot for the data is shown next. | |||

[[Image:doe5.8.png|center|361px|Three-dimensional scatter plot for the observed data in the table.|link=]] | |||

The first order regression model applicable to this data set having two predictor variables is: | |||

::<math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+{{\beta }_{2}}{{x}_{2}}+\epsilon </math> | ::<math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+{{\beta }_{2}}{{x}_{2}}+\epsilon\,\!</math> | ||

where the dependent variable, | where the dependent variable, <math>Y\,\!</math>, represents the yield and the predictor variables, <math>{{x}_{1}}\,\!</math> and <math>{{x}_{2}}\,\!</math>, represent the two factors respectively. The <math>X\,\!</math> and <math>y\,\!</math> matrices for the data can be obtained as: | ||

| Line 178: | Line 204: | ||

. \\ | . \\ | ||

349.0 \\ | 349.0 \\ | ||

\end{matrix} \right]</math> | \end{matrix} \right]\,\!</math> | ||

The least square estimates, <math>\hat{\beta }\,\!</math>, can now be obtained: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

\hat{\beta } &= & {{({{X}^{\prime }}X)}^{-1}}{{X}^{\prime }}y \\ | |||

& = & {{\left[ \begin{matrix} | & = & {{\left[ \begin{matrix} | ||

17 & 941 & 525.3 \\ | 17 & 941 & 525.3 \\ | ||

| Line 205: | Line 226: | ||

12.08 \\ | 12.08 \\ | ||

\end{matrix} \right] | \end{matrix} \right] | ||

\end{align}</math> | \end{align}\,\!</math> | ||

:Thus: | :Thus: | ||

::<math>\hat{\beta }=\left[ \begin{matrix} | ::<math>\hat{\beta }=\left[ \begin{matrix} | ||

| Line 218: | Line 240: | ||

1.24 \\ | 1.24 \\ | ||

12.08 \\ | 12.08 \\ | ||

\end{matrix} \right]</math> | \end{matrix} \right]\,\!</math> | ||

and the estimated regression coefficients are | and the estimated regression coefficients are <math>{{\hat{\beta }}_{0}}=-153.51\,\!</math>, <math>{{\hat{\beta }}_{1}}=1.24\,\!</math> and <math>{{\hat{\beta }}_{2}}=12.08\,\!</math>. The fitted regression model is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

\hat{y} & = & {{{\hat{\beta }}}_{0}}+{{{\hat{\beta }}}_{1}}{{x}_{1}}+{{{\hat{\beta }}}_{2}}{{x}_{2}} \\ | |||

& = & -153.5+1.24{{x}_{1}}+12.08{{x}_{2}} | & = & -153.5+1.24{{x}_{1}}+12.08{{x}_{2}} | ||

\end{align}</math> | \end{align}\,\!</math> | ||

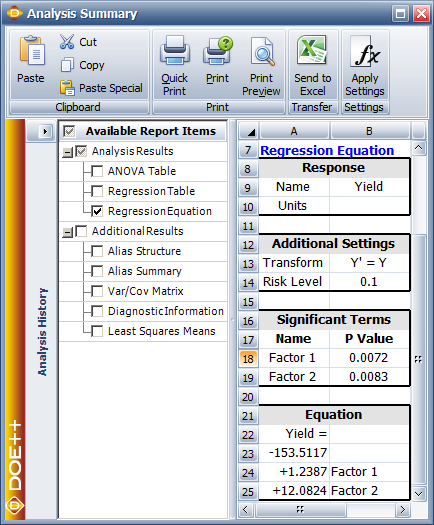

The fitted regression model can be viewed in the Weibull++ DOE folio, as shown next. | |||

[[Image:doe5_9.png|center|434px|Equation of the fitted regression model for the data from the table.|link=]] | |||

A plot of the fitted regression plane is shown in the following figure. | |||

[[Image:doe5.10.png|center|362px|Fitted regression plane <math>\hat{y}=-153.5+1.24 x_1+12.08 x_2\,\!</math> for the data from the table.|link=]] | |||

The fitted regression model can be used to obtain fitted values, <math>{{\hat{y}}_{i}}\,\!</math>, corresponding to an observed response value, <math>{{y}_{i}}\,\!</math>. For example, the fitted value corresponding to the fifth observation is: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{{\hat{y}}}_{i}} &= & -153.5+1.24{{x}_{i1}}+12.08{{x}_{i2}} \\ | |||

{{{\hat{y}}}_{5}} & = & -153.5+1.24{{x}_{51}}+12.08{{x}_{52}} \\ | |||

& = & -153.5+1.24(47.3)+12.08(29.9) \\ | & = & -153.5+1.24(47.3)+12.08(29.9) \\ | ||

& = & 266.3 | & = & 266.3 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The observed fifth response value is <math>{{y}_{5}}=273.0\,\!</math>. The residual corresponding to this value is: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{e}_{i}} & = & {{y}_{i}}-{{{\hat{y}}}_{i}} \\ | |||

{{e}_{5}}& = & {{y}_{5}}-{{{\hat{y}}}_{5}} \\ | |||

& = & 273.0-266.3 \\ | & = & 273.0-266.3 \\ | ||

& = & 6.7 | & = & 6.7 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

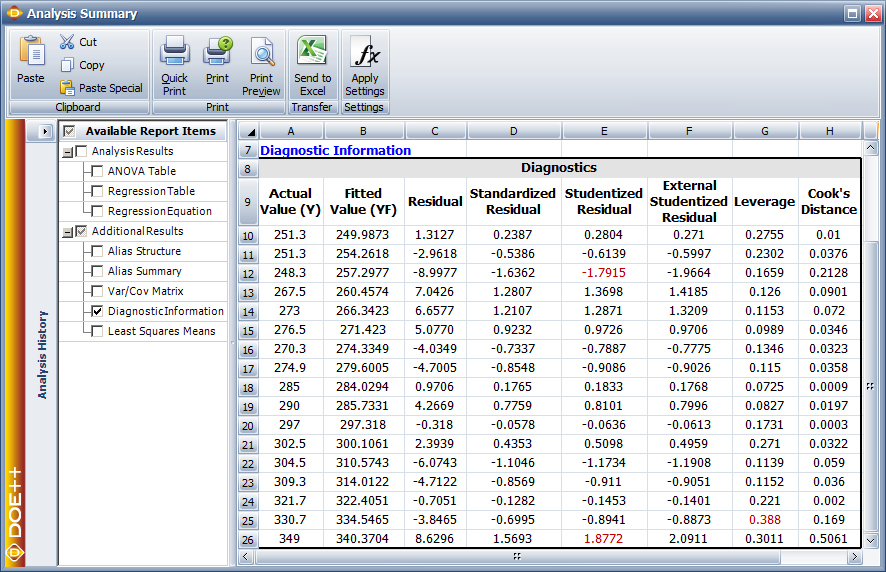

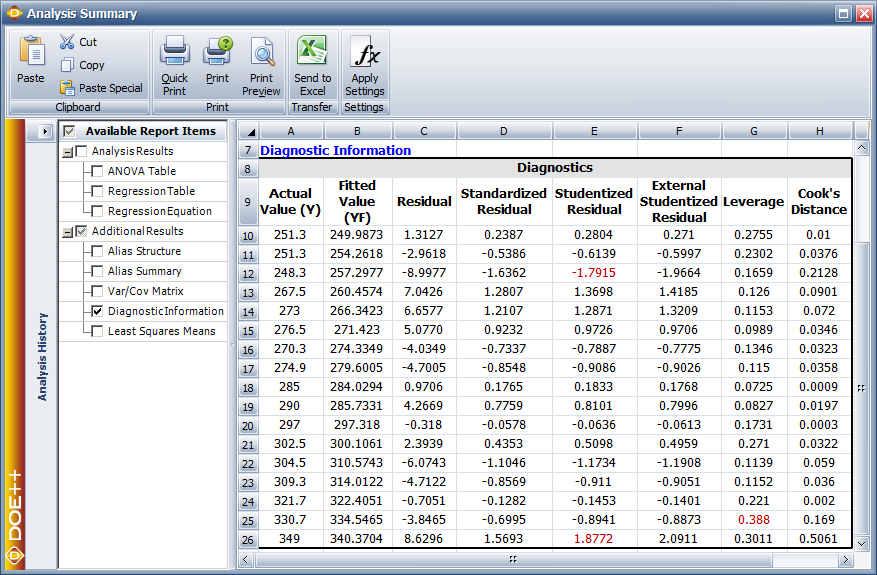

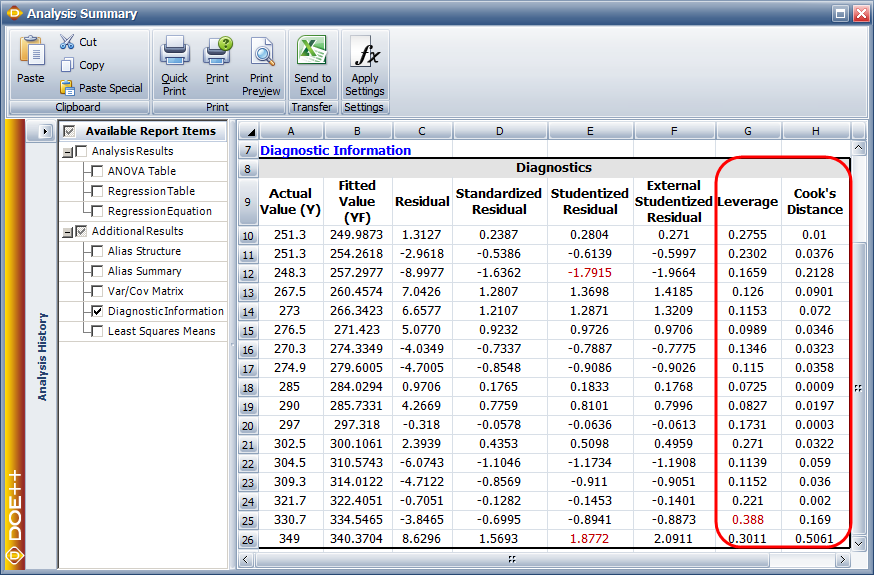

In Weibull++ DOE folios, fitted values and residuals are shown in the Diagnostic Information table of the detailed summary of results. The values are shown in the following figure. | |||

[[Image:doe5_11.png|center|886px|Fitted values and residuals for the data in the table.|link=]] | |||

The fitted regression model can also be used to predict response values. For example, to obtain the response value for a new observation corresponding to 47 units of <math>{{x}_{1}}\,\!</math> and 31 units of <math>{{x}_{2}}\,\!</math>, the value is calculated using: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

\hat{y}(47,31)& = & -153.5+1.24(47)+12.08(31) \\ | |||

& = & 279.26 | & = & 279.26 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

===Properties of the Least Square Estimators for Beta=== | |||

===Properties of the Least Square Estimators | The least square estimates, <math>{{\hat{\beta }}_{0}}\,\!</math>, <math>{{\hat{\beta }}_{1}}\,\!</math>, <math>{{\hat{\beta }}_{2}}\,\!</math>... <math>{{\hat{\beta }}_{k}}\,\!</math>, are unbiased estimators of <math>{{\beta }_{0}}\,\!</math>, <math>{{\beta }_{1}}\,\!</math>, <math>{{\beta }_{2}}\,\!</math>... <math>{{\beta }_{k}}\,\!</math>, provided that the random error terms, <math>{{\epsilon }_{i}}\,\!</math>, are normally and independently distributed. The variances of the <math>\hat{\beta }\,\!</math> s are obtained using the <math>{{({{X}^{\prime }}X)}^{-1}}\,\!</math> matrix. The variance-covariance matrix of the estimated regression coefficients is obtained as follows: | ||

The least square estimates, | |||

::<math>C={{\hat{\sigma }}^{2}}{{({{X}^{\prime }}X)}^{-1}}\,\!</math> | |||

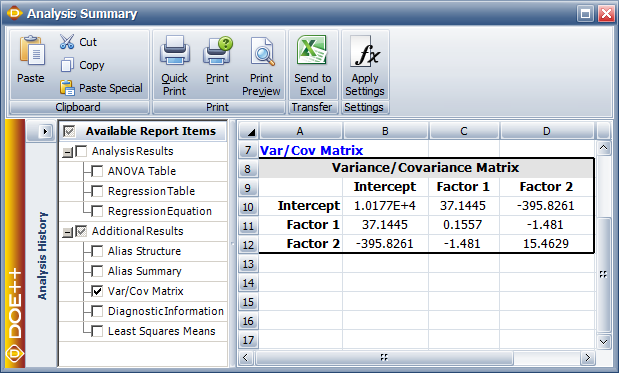

<math>C\,\!</math> is a symmetric matrix whose diagonal elements, <math>{{C}_{jj}}\,\!</math>, represent the variance of the estimated <math>j\,\!</math> th regression coefficient, <math>{{\hat{\beta }}_{j}}\,\!</math>. The off-diagonal elements, <math>{{C}_{ij}}\,\!</math>, represent the covariance between the <math>i\,\!</math> th and <math>j\,\!</math> th estimated regression coefficients, <math>{{\hat{\beta }}_{i}}\,\!</math> and <math>{{\hat{\beta }}_{j}}\,\!</math>. The value of <math>{{\hat{\sigma }}^{2}}\,\!</math> is obtained using the error mean square, <math>M{{S}_{E}}\,\!</math>. The variance-covariance matrix for the data in the table (see [[Multiple_Linear_Regression_Analysis#Estimating_Regression_Models_Using_Least_Squares| Estimating Regression Models Using Least Squares]]) can be viewed in the DOE folio, as shown next. | |||

[[Image:doe5_12.png|center|619px|The variance-covariance matrix for the data in table.|link=]] | |||

Calculations to obtain the matrix are given in this [[Multiple_Linear_Regression_Analysis#Example| example]]. The positive square root of <math>{{C}_{jj}}\,\!</math> represents the estimated standard deviation of the <math>j\,\!</math> th regression coefficient, <math>{{\hat{\beta }}_{j}}\,\!</math>, and is called the estimated standard error of <math>{{\hat{\beta }}_{j}}\,\!</math> (abbreviated <math>se({{\hat{\beta }}_{j}})\,\!</math> ). | |||

::<math>se({{\hat{\beta }}_{j}})=\sqrt{{{C}_{jj}}}\,\!</math> | |||

==Hypothesis Tests in Multiple Linear Regression== | |||

This section discusses hypothesis tests on the regression coefficients in multiple linear regression. As in the case of simple linear regression, these tests can only be carried out if it can be assumed that the random error terms, <math>{{\epsilon }_{i}}\,\!</math>, are normally and independently distributed with a mean of zero and variance of <math>{{\sigma }^{2}}\,\!</math>. | |||

Three types of hypothesis tests can be carried out for multiple linear regression models: | |||

This test can be used to simultaneously check the significance of a number of regression coefficients. It can also be used to test individual coefficients. | #Test for significance of regression: This test checks the significance of the whole regression model. | ||

#<math>t\,\!</math> test: This test checks the significance of individual regression coefficients. | |||

#<math>F\,\!</math> test: This test can be used to simultaneously check the significance of a number of regression coefficients. It can also be used to test individual coefficients. | |||

===Test for Significance of Regression=== | ===Test for Significance of Regression=== | ||

The test for significance of regression in the case of multiple linear regression analysis is carried out using the analysis of variance. The test is used to check if a linear statistical relationship exists between the response variable and at least one of the predictor variables. The statements for the hypotheses are: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

& {{H}_{0}}:& {{\beta }_{1}}={{\beta }_{2}}=...={{\beta }_{k}}=0 \\ | |||

& {{H}_{1}}: & {{\beta }_{j}}\ne 0\text{ | & {{H}_{1}}:& {{\beta }_{j}}\ne 0\text{ for at least one }j | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The test for | The test for <math>{{H}_{0}}\,\!</math> is carried out using the following statistic: | ||

::<math>{{F}_{0}}=\frac{M{{S}_{R}}}{M{{S}_{E}}}</math> | ::<math>{{F}_{0}}=\frac{M{{S}_{R}}}{M{{S}_{E}}}\,\!</math> | ||

where <math>M{{S}_{R}}\,\!</math> is the regression mean square and <math>M{{S}_{E}}\,\!</math> is the error mean square. If the null hypothesis, <math>{{H}_{0}}\,\!</math>, is true then the statistic <math>{{F}_{0}}\,\!</math> follows the <math>F\,\!</math> distribution with <math>k\,\!</math> degrees of freedom in the numerator and <math>n-\,\!</math> ( <math>k+1\,\!</math> ) degrees of freedom in the denominator. The null hypothesis, <math>{{H}_{0}}\,\!</math>, is rejected if the calculated statistic, <math>{{F}_{0}}\,\!</math>, is such that: | |||

::<math>{{F}_{0}}>{{f}_{\alpha ,k,n-(k+1)}}\,\!</math> | |||

To calculate the statistic | ====Calculation of the Statistic <math>{{F}_{0}}\,\!</math>==== | ||

To calculate the statistic <math>{{F}_{0}}\,\!</math>, the mean squares <math>M{{S}_{R}}\,\!</math> and <math>M{{S}_{E}}\,\!</math> must be known. As explained in [http://reliawiki.com/index.php/Simple_Linear_Regression_Analysis| Simple Linear Regression Analysis], the mean squares are obtained by dividing the sum of squares by their degrees of freedom. For example, the total mean square, <math>M{{S}_{T}}\,\!</math>, is obtained as follows: | |||

::<math>M{{S}_{T}}=\frac{S{{S}_{T}}}{dof(S{{S}_{T}})}\,\!</math> | |||

where | where <math>S{{S}_{T}}\,\!</math> is the total sum of squares and <math>dof(S{{S}_{T}})\,\!</math> is the number of degrees of freedom associated with <math>S{{S}_{T}}\,\!</math>. In multiple linear regression, the following equation is used to calculate <math>S{{S}_{T}}\,\!</math> : | ||

::<math> | ::<math>S{{S}_{T}}={{y}^{\prime }}\left[ I-(\frac{1}{n})J \right]y\,\!</math> | ||

: | where <math>n\,\!</math> is the total number of observations, <math>y\,\!</math> is the vector of observations (that was defined in [http://reliawiki.com/index.php/Multiple_Linear_Regression_Analysis#Estimating_Regression_Models_Using_Least_Squares| Estimating Regression Models Using Least Squares]), <math>I\,\!</math> is the identity matrix of order <math>n\,\!</math> and <math>J\,\!</math> represents an <math>n\times n\,\!</math> square matrix of ones. The number of degrees of freedom associated with <math>S{{S}_{T}}\,\!</math>, <math>dof(S{{S}_{T}})\,\!</math>, is ( <math>n-1\,\!</math> ). Knowing <math>S{{S}_{T}}\,\!</math> and <math>dof(S{{S}_{T}})\,\!</math> the total mean square, <math>M{{S}_{T}}\,\!</math>, can be calculated. | ||

The regression mean square, <math>M{{S}_{R}}\,\!</math>, is obtained by dividing the regression sum of squares, <math>S{{S}_{R}}\,\!</math>, by the respective degrees of freedom, <math>dof(S{{S}_{R}})\,\!</math>, as follows: | |||

::<math>M{{S}_{R}}=\frac{S{{S}_{R}}}{dof(S{{S}_{R}})}\,\!</math> | |||

The regression sum of squares, <math>S{{S}_{R}}\,\!</math>, is calculated using the following equation: | |||

::<math>S{{S}_{R}}={{y}^{\prime }}\left[ H-(\frac{1}{n})J \right]y\,\!</math> | |||

where <math>n\,\!</math> is the total number of observations, <math>y\,\!</math> is the vector of observations, <math>H\,\!</math> is the hat matrix and <math>J\,\!</math> represents an <math>n\times n\,\!</math> square matrix of ones. The number of degrees of freedom associated with <math>S{{S}_{R}}\,\!</math>, <math>dof(S{{S}_{E}})\,\!</math>, is <math>k\,\!</math>, where <math>k\,\!</math> is the number of predictor variables in the model. Knowing <math>S{{S}_{R}}\,\!</math> and <math>dof(S{{S}_{R}})\,\!</math> the regression mean square, <math>M{{S}_{R}}\,\!</math>, can be calculated. | |||

The error mean square, <math>M{{S}_{E}}\,\!</math>, is obtained by dividing the error sum of squares, <math>S{{S}_{E}}\,\!</math>, by the respective degrees of freedom, <math>dof(S{{S}_{E}})\,\!</math>, as follows: | |||

::<math>{{ | ::<math>M{{S}_{E}}=\frac{S{{S}_{E}}}{dof(S{{S}_{E}})}\,\!</math> | ||

The | The error sum of squares, <math>S{{S}_{E}}\,\!</math>, is calculated using the following equation: | ||

::<math>{{ | ::<math>S{{S}_{E}}={{y}^{\prime }}(I-H)y\,\!</math> | ||

where <math>y\,\!</math> is the vector of observations, <math>I\,\!</math> is the identity matrix of order <math>n\,\!</math> and <math>H\,\!</math> is the hat matrix. The number of degrees of freedom associated with <math>S{{S}_{E}}\,\!</math>, <math>dof(S{{S}_{E}})\,\!</math>, is <math>n-(k+1)\,\!</math>, where <math>n\,\!</math> is the total number of observations and <math>k\,\!</math> is the number of predictor variables in the model. Knowing <math>S{{S}_{E}}\,\!</math> and <math>dof(S{{S}_{E}})\,\!</math>, the error mean square, <math>M{{S}_{E}}\,\!</math>, can be calculated. The error mean square is an estimate of the variance, <math>{{\sigma }^{2}}\,\!</math>, of the random error terms, <math>{{\epsilon }_{i}}\,\!</math>. | |||

::<math> | ::<math>{{\hat{\sigma }}^{2}}=M{{S}_{E}}\,\!</math> | ||

=====Example===== | |||

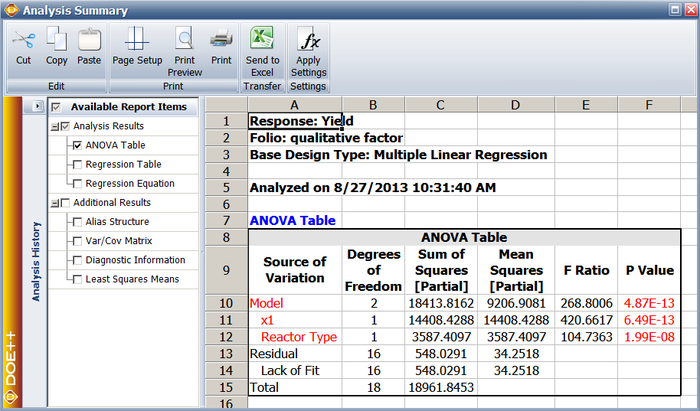

The test for the significance of regression, for the regression model obtained for the data in the table (see [[Multiple_Linear_Regression_Analysis#Estimating_Regression_Models_Using_Least_Squares| Estimating Regression Models Using Least Squares]]), is illustrated in this example. The null hypothesis for the model is: | |||

::<math>{{H}_{0}}: {{\beta }_{1}}={{\beta }_{2}}=0\,\!</math> | |||

The statistic to test <math>{{H}_{0}}\,\!</math> is: | |||

::<math>{{F}_{0}}=\frac{M{{S}_{R}}}{M{{S}_{E}}}\,\!</math> | |||

To calculate <math>{{F}_{0}}\,\!</math>, first the sum of squares are calculated so that the mean squares can be obtained. Then the mean squares are used to calculate the statistic <math>{{F}_{0}}\,\!</math> to carry out the significance test. | |||

The regression sum of squares, <math>S{{S}_{R}}\,\!</math>, can be obtained as: | |||

::<math>S{{S}_{R}}={{y}^{\prime }}\left[ H-(\frac{1}{n})J \right]y\,\!</math> | |||

The hat matrix, <math>H\,\!</math> is calculated as follows using the design matrix <math>X\,\!</math> from the previous [[Multiple_Linear_Regression_Analysis#Example| example]]: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

H & = & X{{({{X}^{\prime }}X)}^{-1}}{{X}^{\prime }} \\ | |||

& = & \left[ \begin{matrix} | & = & \left[ \begin{matrix} | ||

0.27552 & 0.25154 & . & . & -0.04030 \\ | 0.27552 & 0.25154 & . & . & -0.04030 \\ | ||

| Line 382: | Line 425: | ||

-0.04030 & -0.02920 & . & . & 0.30115 \\ | -0.04030 & -0.02920 & . & . & 0.30115 \\ | ||

\end{matrix} \right] | \end{matrix} \right] | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Knowing <math>y\,\!</math>, <math>H\,\!</math> and <math>J\,\!</math>, the regression sum of squares, <math>S{{S}_{R}}\,\!</math>, can be calculated: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

S{{S}_{R}} & = & {{y}^{\prime }}\left[ H-(\frac{1}{n})J \right]y \\ | |||

& = & 12816.35 | & = & 12816.35 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The degrees of freedom associated with <math>S{{S}_{R}}\,\!</math> is <math>k\,\!</math>, which equals to a value of two since there are two predictor variables in the data in the table (see [[Multiple_Linear_Regression_Analysis#Estimating_Regression_Models_Using_Least_Squares| Multiple Linear Regression Analysis]]). Therefore, the regression mean square is: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

M{{S}_{R}}& = & \frac{S{{S}_{R}}}{dof(S{{S}_{R}})} \\ | |||

& = & \frac{12816.35}{2} \\ | & = & \frac{12816.35}{2} \\ | ||

& = & 6408.17 | & = & 6408.17 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Similarly to calculate the error mean square, <math>M{{S}_{E}}\,\!</math>, the error sum of squares, <math>S{{S}_{E}}\,\!</math>, can be obtained as: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

S{{S}_{E}} &= & {{y}^{\prime }}\left[ I-H \right]y \\ | |||

& = & 423.37 | & = & 423.37 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The degrees of freedom associated with <math>S{{S}_{E}}\,\!</math> is <math>n-(k+1)\,\!</math>. Therefore, the error mean square, <math>M{{S}_{E}}\,\!</math>, is: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

M{{S}_{E}} &= & \frac{S{{S}_{E}}}{dof(S{{S}_{E}})} \\ | |||

& = & \frac{S{{S}_{E}}}{(n-(k+1))} \\ | & = & \frac{S{{S}_{E}}}{(n-(k+1))} \\ | ||

& = & \frac{423.37}{(17-(2+1))} \\ | & = & \frac{423.37}{(17-(2+1))} \\ | ||

& = & 30.24 | & = & 30.24 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The statistic to test the significance of regression can now be calculated as: | The statistic to test the significance of regression can now be calculated as: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{f}_{0}}& = & \frac{M{{S}_{R}}}{M{{S}_{E}}} \\ | |||

& = & \frac{6408.17}{423.37/(17-3)} \\ | & = & \frac{6408.17}{423.37/(17-3)} \\ | ||

& = & 211.9 | & = & 211.9 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The critical value for this test, corresponding to a significance level of 0.1, is: | The critical value for this test, corresponding to a significance level of 0.1, is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{f}_{\alpha ,k,n-(k+1)}} &= & {{f}_{0.1,2,14}} \\ | |||

& = & 2.726 | & = & 2.726 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

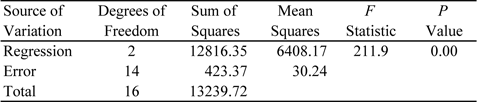

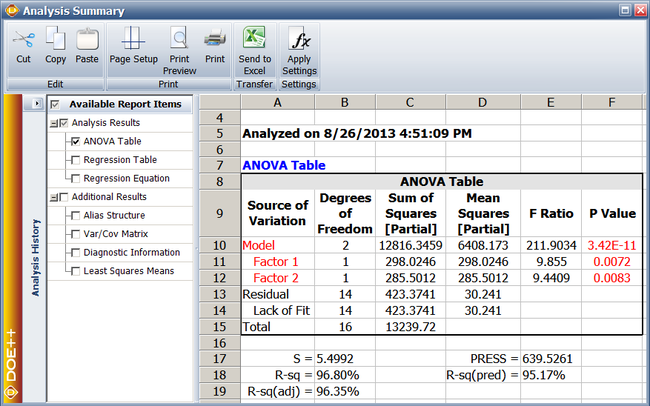

Since <math>{{f}_{0}}>{{f}_{0.1,2,14}}\,\!</math>, <math>{{H}_{0}}:\,\!</math> <math>{{\beta }_{1}}={{\beta }_{2}}=0\,\!</math> is rejected and it is concluded that at least one coefficient out of <math>{{\beta }_{1}}\,\!</math> and <math>{{\beta }_{2}}\,\!</math> is significant. In other words, it is concluded that a regression model exists between yield and either one or both of the factors in the table. The analysis of variance is summarized in the following table. | |||

===Test on Individual Regression Coefficients ( | [[Image:doet5.2.png|center|477px|ANOVA table for the significance of regression test.|link=]] | ||

The | |||

===Test on Individual Regression Coefficients (''t'' Test)=== | |||

The <math>t\,\!</math> test is used to check the significance of individual regression coefficients in the multiple linear regression model. Adding a significant variable to a regression model makes the model more effective, while adding an unimportant variable may make the model worse. The hypothesis statements to test the significance of a particular regression coefficient, <math>{{\beta }_{j}}\,\!</math>, are: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

& {{H}_{0}}: & {{\beta }_{j}}=0 \\ | & {{H}_{0}}: & {{\beta }_{j}}=0 \\ | ||

& {{H}_{1}}: & {{\beta }_{j}}\ne 0 | & {{H}_{1}}: & {{\beta }_{j}}\ne 0 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The test statistic for this test is based on the <math>t\,\!</math> distribution (and is similar to the one used in the case of simple linear regression models in [[Simple_Linear_Regression_Analysis| Simple Linear Regression Anaysis]]): | |||

::<math> | ::<math>{{T}_{0}}=\frac{{{{\hat{\beta }}}_{j}}}{se({{{\hat{\beta }}}_{j}})}\,\!</math> | ||

where the standard error, <math>se({{\hat{\beta }}_{j}})\,\!</math>, is obtained. The analyst would fail to reject the null hypothesis if the test statistic lies in the acceptance region: | |||

::<math>-{{t}_{\alpha /2,n-2}}<{{T}_{0}}<{{t}_{\alpha /2,n-2}}\,\!</math> | |||

The null hypothesis to test | This test measures the contribution of a variable while the remaining variables are included in the model. For the model <math>\hat{y}={{\hat{\beta }}_{0}}+{{\hat{\beta }}_{1}}{{x}_{1}}+{{\hat{\beta }}_{2}}{{x}_{2}}+{{\hat{\beta }}_{3}}{{x}_{3}}\,\!</math>, if the test is carried out for <math>{{\beta }_{1}}\,\!</math>, then the test will check the significance of including the variable <math>{{x}_{1}}\,\!</math> in the model that contains <math>{{x}_{2}}\,\!</math> and <math>{{x}_{3}}\,\!</math> (i.e., the model <math>\hat{y}={{\hat{\beta }}_{0}}+{{\hat{\beta }}_{2}}{{x}_{2}}+{{\hat{\beta }}_{3}}{{x}_{3}}\,\!</math> ). Hence the test is also referred to as partial or marginal test. In DOE folios, this test is displayed in the Regression Information table. | ||

In | |||

====Example==== | |||

The test to check the significance of the estimated regression coefficients for the data is illustrated in this example. The null hypothesis to test the coefficient <math>{{\beta }_{2}}\,\!</math> is: | |||

::<math>{{H}_{0}}:{{\beta }_{2}}=0\,\!</math> | |||

The null hypothesis to test <math>{{\beta }_{1}}\,\!</math> can be obtained in a similar manner. To calculate the test statistic, <math>{{T}_{0}}\,\!</math>, we need to calculate the standard error. | |||

In the [[Multiple_Linear_Regression_Analysis#Example_2|example]], the value of the error mean square, <math>M{{S}_{E}}\,\!</math>, was obtained as 30.24. The error mean square is an estimate of the variance, <math>{{\sigma }^{2}}\,\!</math>. | |||

:Therefore: | :Therefore: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{{\hat{\sigma }}}^{2}} &= & M{{S}_{E}} \\ | |||

& = & 30.24 | & = & 30.24 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The variance-covariance matrix of the estimated regression coefficients is: | The variance-covariance matrix of the estimated regression coefficients is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

C &= & {{{\hat{\sigma }}}^{2}}{{({{X}^{\prime }}X)}^{-1}} \\ | |||

& = & 30.24\left[ \begin{matrix} | & = & 30.24\left[ \begin{matrix} | ||

336.5 & 1.2 & -13.1 \\ | 336.5 & 1.2 & -13.1 \\ | ||

| Line 486: | Line 550: | ||

-395.83 & -1.481 & 15.463 \\ | -395.83 & -1.481 & 15.463 \\ | ||

\end{matrix} \right] | \end{matrix} \right] | ||

\end{align}</math> | \end{align}\,\!</math> | ||

From the diagonal elements of | From the diagonal elements of <math>C\,\!</math>, the estimated standard error for <math>{{\hat{\beta }}_{1}}\,\!</math> and <math>{{\hat{\beta }}_{2}}\,\!</math> is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

se({{{\hat{\beta }}}_{1}}) &= & \sqrt{0.1557}=0.3946 \\ | |||

se({{{\hat{\beta }}}_{2}})& = & \sqrt{15.463}=3.93 | |||

\end{align}</math> | \end{align}\,\!</math> | ||

The corresponding test statistics for these coefficients are: | The corresponding test statistics for these coefficients are: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{({{t}_{0}})}_{{{{\hat{\beta }}}_{1}}}} &= & \frac{{{{\hat{\beta }}}_{1}}}{se({{{\hat{\beta }}}_{1}})}=\frac{1.24}{0.3946}=3.1393 \\ | |||

{{({{t}_{0}})}_{{{{\hat{\beta }}}_{2}}}} &= & \frac{{{{\hat{\beta }}}_{2}}}{se({{{\hat{\beta }}}_{2}})}=\frac{12.08}{3.93}=3.0726 | |||

\end{align}</math> | \end{align}\,\!</math> | ||

The critical values for the present <math>t\,\!</math> test at a significance of 0.1 are: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{t}_{\alpha /2,n-(k+1)}} &= & {{t}_{0.05,14}}=1.761 \\ | |||

-{{t}_{\alpha /2,n-(k+1)}} & = & -{{t}_{0.05,14}}=-1.761 | |||

\end{align}</math> | \end{align}\,\!</math> | ||

Considering <math>{{\hat{\beta }}_{2}}\,\!</math>, it can be seen that <math>{{({{t}_{0}})}_{{{{\hat{\beta }}}_{2}}}}\,\!</math> does not lie in the acceptance region of <math>-{{t}_{0.05,14}}<{{t}_{0}}<{{t}_{0.05,14}}\,\!</math>. The null hypothesis, <math>{{H}_{0}}:{{\beta }_{2}}=0\,\!</math>, is rejected and it is concluded that <math>{{\beta }_{2}}\,\!</math> is significant at <math>\alpha =0.1\,\!</math>. This conclusion can also be arrived at using the <math>p\,\!</math> value noting that the hypothesis is two-sided. The <math>p\,\!</math> value corresponding to the test statistic, <math>{{({{t}_{0}})}_{{{{\hat{\beta }}}_{2}}}} = 3.0726\,\!</math>, based on the <math>t\,\!</math> distribution with 14 degrees of freedom is: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

p\text{ }value & = & 2\times (1-P(T\le |{{t}_{0}}|) \\ | |||

& = & 2\times (1-0.9959) \\ | & = & 2\times (1-0.9959) \\ | ||

& = & 0.0083 | & = & 0.0083 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Since the <math>p\,\!</math> value is less than the significance, <math>\alpha =0.1\,\!</math>, it is concluded that <math>{{\beta }_{2}}\,\!</math> is significant. The hypothesis test on <math>{{\beta }_{1}}\,\!</math> can be carried out in a similar manner. | |||

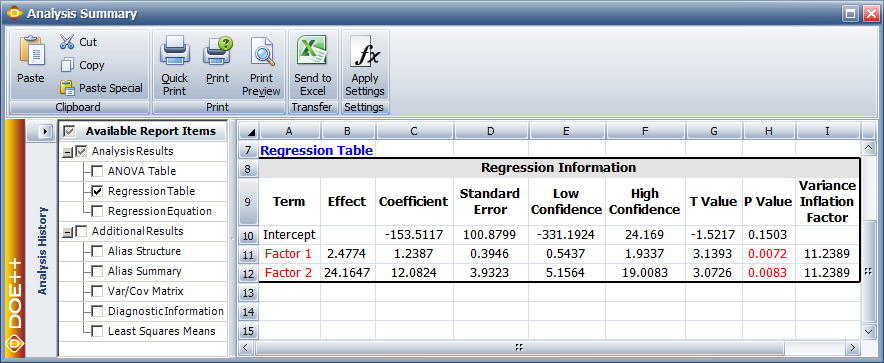

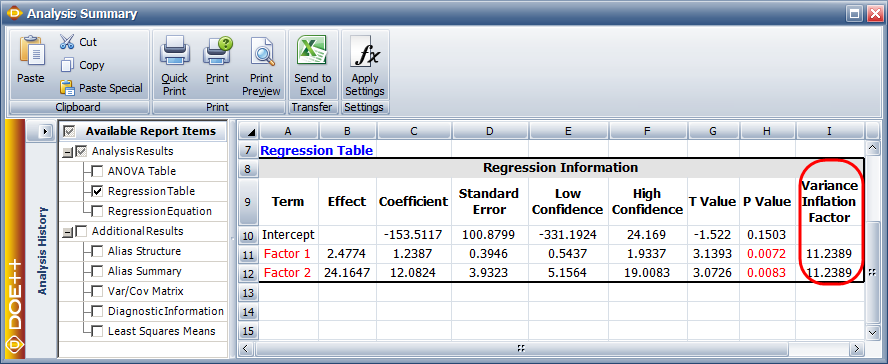

As explained in [[Simple_Linear_Regression_Analysis| Simple Linear Regression Analysis]], in DOE folios, the information related to the <math>t\,\!</math> test is displayed in the Regression Information table as shown in the figure below. | |||

: | [[Image:doe5_13.png|center|884px|Regression results for the data.|link=]] | ||

[[ | In this table, the <math>t\,\!</math> test for <math>{{\beta }_{2}}\,\!</math> is displayed in the row for the term Factor 2 because <math>{{\beta }_{2}}\,\!</math> is the coefficient that represents this factor in the regression model. Columns labeled Standard Error, T Value and P Value represent the standard error, the test statistic for the <math>t\,\!</math> test and the <math>p\,\!</math> value for the <math>t\,\!</math> test, respectively. These values have been calculated for <math>{{\beta }_{2}}\,\!</math> in this example. The Coefficient column represents the estimate of regression coefficients. These values are calculated as shown in [[Multiple_Linear_Regression_Analysis#Example|this]] example. The Effect column represents values obtained by multiplying the coefficients by a factor of 2. This value is useful in the case of two factor experiments and is explained in [[Two_Level_Factorial_Experiments| Two-Level Factorial Experiments]]. Columns labeled Low Confidence and High Confidence represent the limits of the confidence intervals for the regression coefficients and are explained in [[Multiple_Linear_Regression_Analysis#Confidence_Intervals_in_Multiple_Linear_Regression|Confidence Intervals in Multiple Linear Regression]]. The Variance Inflation Factor column displays values that give a measure of ''multicollinearity''. This is explained in [[Multiple_Linear_Regression_Analysis#Multicollinearity|Multicollinearity]]. | ||

===Test on Subsets of Regression Coefficients (Partial | ===Test on Subsets of Regression Coefficients (Partial ''F'' Test)=== | ||

This test can be considered to be the general form of the <math>t\,\!</math> test mentioned in the previous section. This is because the test simultaneously checks the significance of including many (or even one) regression coefficients in the multiple linear regression model. Adding a variable to a model increases the regression sum of squares, <math>S{{S}_{R}}\,\!</math>. The test is based on this increase in the regression sum of squares. The increase in the regression sum of squares is called the ''extra sum of squares''. | |||

Assume that the vector of the regression coefficients, <math>\beta\,\!</math>, for the multiple linear regression model, <math>y=X\beta +\epsilon\,\!</math>, is partitioned into two vectors with the second vector, <math>{{\theta}_{2}}\,\!</math>, containing the last <math>r\,\!</math> regression coefficients, and the first vector, <math>{{\theta}_{1}}\,\!</math>, containing the first ( <math>k+1-r\,\!</math> ) coefficients as follows: | |||

::<math>\beta =\left[ \begin{matrix} | ::<math>\beta =\left[ \begin{matrix} | ||

{{\ | {{\theta}_{1}} \\ | ||

{{\ | {{\theta}_{2}} \\ | ||

\end{matrix} \right]</math> | \end{matrix} \right]\,\!</math> | ||

:with: | :with: | ||

::<math>{{\theta}_{1}}=[{{\beta }_{0}},{{\beta }_{1}}...{{\beta }_{k-r}}{]}'\text{ and }{{\theta}_{2}}=[{{\beta }_{k-r+1}},{{\beta }_{k-r+2}}...{{\beta }_{k}}{]}'\text{ }\,\!</math> | |||

The hypothesis statements to test the significance of adding the regression coefficients in <math>{{\theta}_{2}}\,\!</math> to a model containing the regression coefficients in <math>{{\theta}_{1}}\,\!</math> may be written as: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

& {{H}_{0}}: & {{\ | & {{H}_{0}}: & {{\theta}_{2}}=0 \\ | ||

& {{H}_{1}}: & {{\ | & {{H}_{1}}: & {{\theta}_{2}}\ne 0 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The test statistic for this test follows the <math>F\,\!</math> distribution and can be calculated as follows: | |||

::<math>{{F}_{0}}=\frac{S{{S}_{R}}({{\ | ::<math>{{F}_{0}}=\frac{S{{S}_{R}}({{\theta}_{2}}|{{\theta}_{1}})/r}{M{{S}_{E}}}\,\!</math> | ||

where | where <math>S{{S}_{R}}({{\theta}_{2}}|{{\theta}_{1}})\,\!</math> is the the increase in the regression sum of squares when the variables corresponding to the coefficients in <math>{{\theta}_{2}}\,\!</math> are added to a model already containing <math>{{\theta}_{1}}\,\!</math>, and <math>M{{S}_{E}}\,\!</math> is obtained from the equation given in [[Simple_Linear_Regression_Analysis#Mean_Squares|Simple Linear Regression Analysis]]. The value of the extra sum of squares is obtained as explained in the next section. | ||

The null hypothesis, | The null hypothesis, <math>{{H}_{0}}\,\!</math>, is rejected if <math>{{F}_{0}}>{{f}_{\alpha ,r,n-(k+1)}}\,\!</math>. Rejection of <math>{{H}_{0}}\,\!</math> leads to the conclusion that at least one of the variables in <math>{{x}_{k-r+1}}\,\!</math>, <math>{{x}_{k-r+2}}\,\!</math>... <math>{{x}_{k}}\,\!</math> contributes significantly to the regression model. In a DOE folio, the results from the partial <math>F\,\!</math> test are displayed in the ANOVA table. | ||

[[Image:doe5_14.png|center|650px|ANOVA Table for Extra Sum of Squares in Weibull++.]] | |||

===Types of Extra Sum of Squares=== | ===Types of Extra Sum of Squares=== | ||

The extra sum of squares can be calculated using either the partial (or adjusted) sum of squares or the sequential sum of squares. The type of extra sum of squares used affects the calculation of the test statistic | The extra sum of squares can be calculated using either the partial (or adjusted) sum of squares or the sequential sum of squares. The type of extra sum of squares used affects the calculation of the test statistic for the partial <math>F\,\!</math> test described above. In DOE folios, selection for the type of extra sum of squares is available as shown in the figure below. The partial sum of squares is used as the default setting. The reason for this is explained in the following section on the partial sum of squares. | ||

====Partial Sum of Squares==== | ====Partial Sum of Squares==== | ||

The partial sum of squares for a term is the extra sum of squares when all terms, except the term under consideration, are included in the model. For example, consider the model: | The partial sum of squares for a term is the extra sum of squares when all terms, except the term under consideration, are included in the model. For example, consider the model: | ||

::<math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+{{\beta }_{2}}{{x}_{2}}+{{\beta }_{12}}{{x}_{1}}{{x}_{2}}+\epsilon\,\!</math> | |||

The sum of squares of regression of this model is denoted by <math>S{{S}_{R}}({{\beta }_{0}},{{\beta }_{1}},{{\beta }_{2}},{{\beta }_{12}})\,\!</math>. Assume that we need to know the partial sum of squares for <math>{{\beta }_{2}}\,\!</math>. The partial sum of squares for <math>{{\beta }_{2}}\,\!</math> is the increase in the regression sum of squares when <math>{{\beta }_{2}}\,\!</math> is added to the model. This increase is the difference in the regression sum of squares for the full model of the equation given above and the model that includes all terms except <math>{{\beta }_{2}}\,\!</math>. These terms are <math>{{\beta }_{0}}\,\!</math>, <math>{{\beta }_{1}}\,\!</math> and <math>{{\beta }_{12}}\,\!</math>. The model that contains these terms is: | |||

The partial sum of squares for | ::<math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+{{\beta }_{12}}{{x}_{1}}{{x}_{2}}+\epsilon\,\!</math> | ||

The sum of squares of regression of this model is denoted by <math>S{{S}_{R}}({{\beta }_{0}},{{\beta }_{1}},{{\beta }_{12}})\,\!</math>. The partial sum of squares for <math>{{\beta }_{2}}\,\!</math>can be represented as <math>S{{S}_{R}}({{\beta }_{2}}|{{\beta }_{0}},{{\beta }_{1}},{{\beta }_{12}})\,\!</math> and is calculated as follows: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

S{{S}_{R}}({{\beta }_{2}}|{{\beta }_{0}},{{\beta }_{1}},{{\beta }_{12}})& = & S{{S}_{R}}({{\beta }_{0}},{{\beta }_{1}},{{\beta }_{2}},{{\beta }_{12}})-S{{S}_{R}}({{\beta }_{0}},{{\beta }_{1}},{{\beta }_{12}}) | |||

\end{align}\,\!</math> | |||

\end{align}</math> | |||

For the present case, | For the present case, <math>{{\theta}_{2}}=[{{\beta }_{2}}{]}'\,\!</math> and <math>{{\theta}_{1}}=[{{\beta }_{0}},{{\beta }_{1}},{{\beta }_{12}}{]}'\,\!</math>. It can be noted that for the partial sum of squares <math>{{\beta }_{1}}\,\!</math> contains all coefficients other than the coefficient being tested. | ||

A Weibull++ DOE folio has the partial sum of squares as the default selection. This is because the <math>t\,\!</math> test is a partial test, i.e., the <math>t\,\!</math> test on an individual coefficient is carried by assuming that all the remaining coefficients are included in the model (similar to the way the partial sum of squares is calculated). The results from the <math>t\,\!</math> test are displayed in the Regression Information table. The results from the partial <math>F\,\!</math> test are displayed in the ANOVA table. To keep the results in the two tables consistent with each other, the partial sum of squares is used as the default selection for the results displayed in the ANOVA table. | |||

The partial sum of squares for all terms of a model may not add up to the regression sum of squares for the full model when the regression coefficients are correlated. If it is preferred that the extra sum of squares for all terms in the model always add up to the regression sum of squares for the full model then the sequential sum of squares should be used. | The partial sum of squares for all terms of a model may not add up to the regression sum of squares for the full model when the regression coefficients are correlated. If it is preferred that the extra sum of squares for all terms in the model always add up to the regression sum of squares for the full model then the sequential sum of squares should be used. | ||

=====Example===== | |||

This example illustrates the <math>F\,\!</math> test using the partial sum of squares. The test is conducted for the coefficient <math>{{\beta }_{1}}\,\!</math> corresponding to the predictor variable <math>{{x}_{1}}\,\!</math> for the data. The regression model used for this data set in the [[Multiple_Linear_Regression_Analysis#Example| example]] is: | |||

::<math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+{{\beta }_{2}}{{x}_{2}}+\epsilon\,\!</math> | |||

The null hypothesis to test the significance of <math>{{\beta }_{1}}\,\!</math> is: | |||

::<math>{{H}_{0}}: {{\beta }_{1}}=0\,\!</math> | |||

::<math>{{H}_{0}} | |||

| Line 613: | Line 685: | ||

::<math>{{F}_{0}}=\frac{S{{S}_{R}}({{\beta }_{ | ::<math>{{F}_{0}}=\frac{S{{S}_{R}}({{\beta }_{1}}|{{\beta }_{2}})/r}{M{{S}_{E}}}\,\!</math> | ||

where | where <math>S{{S}_{R}}({{\beta }_{1}}|{{\beta }_{2}})\,\!</math> represents the partial sum of squares for <math>{{\beta }_{1}}\,\!</math>, <math>r\,\!</math> represents the number of degrees of freedom for <math>S{{S}_{R}}({{\beta }_{1}}|{{\beta }_{2}})\,\!</math> (which is one because there is just one coefficient, <math>{{\beta }_{1}}\,\!</math>, being tested) and <math>M{{S}_{E}}\,\!</math> is the error mean square and has been calculated in the second [[Multiple_Linear_Regression_Analysis#Example_2|example]] as 30.24. | ||

The partial sum of squares for | The partial sum of squares for <math>{{\beta }_{1}}\,\!</math> is the difference between the regression sum of squares for the full model, <math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+{{\beta }_{2}}{{x}_{2}}+\epsilon\,\!</math>, and the regression sum of squares for the model excluding <math>{{\beta }_{1}}\,\!</math>, <math>Y={{\beta }_{0}}+{{\beta }_{2}}{{x}_{2}}+\epsilon\,\!</math>. The regression sum of squares for the full model has been calculated in the second [[Multiple_Linear_Regression_Analysis#Example_2|example]] as 12816.35. Therefore: | ||

::<math>S{{S}_{R}}({{\beta }_{0}},{{\beta }_{1}},{{\beta }_{2}})=12816.35</math> | ::<math>S{{S}_{R}}({{\beta }_{0}},{{\beta }_{1}},{{\beta }_{2}})=12816.35\,\!</math> | ||

The regression sum of squares for the model | The regression sum of squares for the model <math>Y={{\beta }_{0}}+{{\beta }_{2}}{{x}_{2}}+\epsilon\,\!</math> is obtained as shown next. First the design matrix for this model, <math>{{X}_{{{\beta }_{0}},{{\beta }_{2}}}}\,\!</math>, is obtained by dropping the second column in the design matrix of the full model, <math>X\,\!</math> (the full design matrix, <math>X\,\!</math>, was obtained in the [[Multiple_Linear_Regression_Analysis#Example| example]]). The second column of <math>X\,\!</math> corresponds to the coefficient <math>{{\beta }_{1}}\,\!</math> which is no longer in the model. Therefore, the design matrix for the model, <math>Y={{\beta }_{0}}+{{\beta }_{2}}{{x}_{2}}+\epsilon\,\!</math>, is: | ||

| Line 633: | Line 705: | ||

. & . \\ | . & . \\ | ||

1 & 32.9 \\ | 1 & 32.9 \\ | ||

\end{matrix} \right]</math> | \end{matrix} \right]\,\!</math> | ||

The hat matrix corresponding to this design matrix is | The hat matrix corresponding to this design matrix is <math>{{H}_{{{\beta }_{0}},{{\beta }_{2}}}}\,\!</math>. It can be calculated using <math>{{H}_{{{\beta }_{0}},{{\beta }_{2}}}}={{X}_{{{\beta }_{0}},{{\beta }_{2}}}}{{(X_{{{\beta }_{0}},{{\beta }_{2}}}^{\prime }{{X}_{{{\beta }_{0}},{{\beta }_{2}}}})}^{-1}}X_{{{\beta }_{0}},{{\beta }_{2}}}^{\prime }\,\!</math>. Once <math>{{H}_{{{\beta }_{0}},{{\beta }_{2}}}}\,\!</math> is known, the regression sum of squares for the model <math>Y={{\beta }_{0}}+{{\beta }_{2}}{{x}_{2}}+\epsilon\,\!</math>, can be calculated as: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

S{{S}_{R}}({{\beta }_{0}},{{\beta }_{2}}) & = & {{y}^{\prime }}\left[ {{H}_{{{\beta }_{0}},{{\beta }_{2}}}}-(\frac{1}{n})J \right]y \\ | |||

& = & 12518.32 | & = & 12518.32 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Therefore, the partial sum of squares for | Therefore, the partial sum of squares for <math>{{\beta }_{1}}\,\!</math> is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

S{{S}_{R}}({{\beta }_{1}}|{{\beta }_{2}})& = & S{{S}_{R}}({{\beta }_{0}},{{\beta }_{1}},{{\beta }_{2}})-S{{S}_{R}}({{\beta }_{0}},{{\beta }_{2}}) \\ | |||

& = & 12816.35-12518.32 \\ | & = & 12816.35-12518.32 \\ | ||

& = & 298.03 | & = & 298.03 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Knowing the partial sum of squares, the statistic to test the significance of | Knowing the partial sum of squares, the statistic to test the significance of <math>{{\beta }_{1}}\,\!</math> is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{f}_{0}} &= & \frac{S{{S}_{R}}({{\beta }_{1}}|{{\beta }_{2}})/r}{M{{S}_{E}}} \\ | |||

& = & \frac{298.03/1}{30.24} \\ | & = & \frac{298.03/1}{30.24} \\ | ||

& = & 9.855 | & = & 9.855 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The | The <math>p\,\!</math> value corresponding to this statistic based on the <math>F\,\!</math> distribution with 1 degree of freedom in the numerator and 14 degrees of freedom in the denominator is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

p\text{ }value &= & 1-P(F\le {{f}_{0}}) \\ | |||

& = & 1-0.9928 \\ | & = & 1-0.9928 \\ | ||

& = & 0.0072 | & = & 0.0072 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

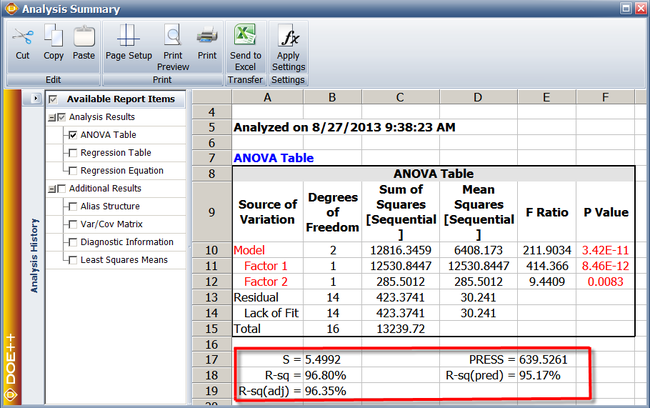

Assuming that the desired significance is 0.1, since <math>p\,\!</math> value < 0.1, <math>{{H}_{0}}:{{\beta }_{1}}=0\,\!</math> is rejected and it can be concluded that <math>{{\beta }_{1}}\,\!</math> is significant. The test for <math>{{\beta }_{2}}\,\!</math> can be carried out in a similar manner. In the results obtained from the DOE folio, the calculations for this test are displayed in the ANOVA table as shown in the following figure. Note that the conclusion obtained in this example can also be obtained using the <math>t\,\!</math> test as explained in the [[Multiple_Linear_Regression_Analysis#Example_3|example]] in [[Multiple_Linear_Regression_Analysis#Test_on_Individual_Regression_Coefficients_.28t__Test.29|Test on Individual Regression Coefficients (t Test)]]. The ANOVA and Regression Information tables in the DOE folio represent two different ways to test for the significance of the variables included in the multiple linear regression model. | |||

====Sequential Sum of Squares==== | ====Sequential Sum of Squares==== | ||

The sequential sum of squares for a coefficient is the extra sum of squares when coefficients are added to the model in a sequence. For example, consider the model: | The sequential sum of squares for a coefficient is the extra sum of squares when coefficients are added to the model in a sequence. For example, consider the model: | ||

::<math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+{{\beta }_{2}}{{x}_{2}}+{{\beta }_{12}}{{x}_{1}}{{x}_{2}}+{{\beta }_{3}}{{x}_{3}}+{{\beta }_{13}}{{x}_{1}}{{x}_{3}}+{{\beta }_{23}}{{x}_{2}}{{x}_{3}}+{{\beta }_{123}}{{x}_{1}}{{x}_{2}}{{x}_{3}}+\epsilon </math> | |||

::<math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+{{\beta }_{2}}{{x}_{2}}+{{\beta }_{12}}{{x}_{1}}{{x}_{2}}+{{\beta }_{3}}{{x}_{3}}+{{\beta }_{13}}{{x}_{1}}{{x}_{3}}+{{\beta }_{23}}{{x}_{2}}{{x}_{3}}+{{\beta }_{123}}{{x}_{1}}{{x}_{2}}{{x}_{3}}+\epsilon\,\!</math> | |||

The sequential sum of squares for <math>{{\beta }_{13}}\,\!</math> is the increase in the sum of squares when <math>{{\beta }_{13}}\,\!</math> is added to the model observing the sequence of the equation given above. Therefore this extra sum of squares can be obtained by taking the difference between the regression sum of squares for the model after <math>{{\beta }_{13}}\,\!</math> was added and the regression sum of squares for the model before <math>{{\beta }_{13}}\,\!</math> was added to the model. The model after <math>{{\beta }_{13}}\,\!</math> is added is as follows: | |||

::<math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+{{\beta }_{2}}{{x}_{2}}+{{\beta }_{12}}{{x}_{1}}{{x}_{2}}+{{\beta }_{3}}{{x}_{3}}+{{\beta }_{13}}{{x}_{1}}{{x}_{3}}+\epsilon\,\!</math> | |||

This is because to maintain the sequence all coefficients preceding <math>{{\beta }_{13}}\,\!</math> must be included in the model. These are the coefficients <math>{{\beta }_{0}}\,\!</math>, <math>{{\beta }_{1}}\,\!</math>, <math>{{\beta }_{2}}\,\!</math>, <math>{{\beta }_{12}}\,\!</math> and <math>{{\beta }_{3}}\,\!</math>. | |||

Similarly the model before <math>{{\beta }_{13}}\,\!</math> is added must contain all coefficients of the equation given above except <math>{{\beta }_{13}}\,\!</math>. This model can be obtained as follows: | |||

::<math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+{{\beta }_{2}}{{x}_{2}}+{{\beta }_{12}}{{x}_{1}}{{x}_{2}}+{{\beta }_{3}}{{x}_{3}}+\epsilon\,\!</math> | |||

The sequential sum of squares for <math>{{\beta }_{13}}\,\!</math> can be calculated as follows: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

S{{S}_{R}}({{\beta }_{13}}|{{\beta }_{0}},{{\beta }_{1}},{{\beta }_{2}},{{\beta }_{12}},{{\beta }_{3}}) & = & S{{S}_{R}}({{\beta }_{0}},{{\beta }_{1}},{{\beta }_{2}},{{\beta }_{12}},{{\beta }_{3}},{{\beta }_{13}})- S{{S}_{R}}({{\beta }_{0}},{{\beta }_{1}},{{\beta }_{2}},{{\beta }_{12}},{{\beta }_{3}}) | |||

& = & S{{S}_{R}}({{\beta }_{0}},{{\beta }_{1}},{{\beta }_{2}},{{\beta }_{12}},{{\beta }_{3}},{{\beta }_{13}})- | \end{align}\,\!</math> | ||

\end{align}</math> | |||

For the present case, | For the present case, <math>{{\theta}_{2}}=[{{\beta }_{13}}{]}'\,\!</math> and <math>{{\theta}_{1}}=[{{\beta }_{0}},{{\beta }_{1}},{{\beta }_{2}},{{\beta }_{12}},{{\beta }_{3}}{]}'\,\!</math>. It can be noted that for the sequential sum of squares <math>{{\beta }_{1}}\,\!</math> contains all coefficients proceeding the coefficient being tested. | ||

The sequential sum of squares for all terms will add up to the regression sum of squares for the full model, but the sequential sum of squares are order dependent. | The sequential sum of squares for all terms will add up to the regression sum of squares for the full model, but the sequential sum of squares are order dependent. | ||

=====Example===== | |||

This example illustrates the partial <math>F\,\!</math> test using the sequential sum of squares. The test is conducted for the coefficient <math>{{\beta }_{1}}\,\!</math> corresponding to the predictor variable <math>{{x}_{1}}\,\!</math> for the data. The regression model used for this data set in the [[Multiple_Linear_Regression_Analysis#Example|example]] is: | |||

::<math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+{{\beta }_{2}}{{x}_{2}}+\epsilon \,\!</math> | |||

The null hypothesis to test the significance of <math>{{\beta }_{1}}\,\!</math> is: | |||

::<math>{{H}_{0}} | ::<math>{{H}_{0}}:{{\beta }_{1}}=0\,\!</math> | ||

The statistic to test this hypothesis is: | The statistic to test this hypothesis is: | ||

::<math>{{F}_{0}}=\frac{S{{S}_{R}}({{\beta }_{ | |||

::<math>{{F}_{0}}=\frac{S{{S}_{R}}({{\beta }_{0}},{{\beta }_{1}})/r}{M{{S}_{E}}}\,\!</math> | |||

where | where <math>S{{S}_{R}}({{\beta }_{0}},{{\beta }_{1}})\,\!</math> represents the sequential sum of squares for <math>{{\beta }_{1}}\,\!</math>, <math>r\,\!</math> represents the number of degrees of freedom for <math>S{{S}_{R}}({{\beta }_{0}},{{\beta }_{1}})\,\!</math> (which is one because there is just one coefficient, <math>{{\beta }_{1}}\,\!</math>, being tested) and <math>M{{S}_{E}}\,\!</math> is the error mean square and has been calculated in the second [[Multiple_Linear_Regression_Analysis#Example_2|example]] as 30.24. | ||

The sequential sum of squares for <math>{{\beta }_{1}}\,\!</math> is the difference between the regression sum of squares for the model after adding <math>{{\beta }_{1}}\,\!</math>, <math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+\epsilon\,\!</math>, and the regression sum of squares for the model before adding <math>{{\beta }_{1}}\,\!</math>, <math>Y={{\beta }_{0}}+\epsilon\,\!</math>. | |||

The regression sum of squares for the model <math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+\epsilon\,\!</math> is obtained as shown next. First the design matrix for this model, <math>{{X}_{{{\beta }_{0}},{{\beta }_{1}}}}\,\!</math>, is obtained by dropping the third column in the design matrix for the full model, <math>X\,\!</math> (the full design matrix, <math>X\,\!</math>, was obtained in the [[Multiple_Linear_Regression_Analysis#Example|example]]). The third column of <math>X\,\!</math> corresponds to coefficient <math>{{\beta }_{2}}\,\!</math> which is no longer used in the present model. Therefore, the design matrix for the model, <math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+\epsilon\,\!</math>, is: | |||

::<math>{{X}_{{{\beta }_{0}},{{\beta }_{1}}}}=\left[ \begin{matrix} | ::<math>{{X}_{{{\beta }_{0}},{{\beta }_{1}}}}=\left[ \begin{matrix} | ||

| Line 736: | Line 811: | ||

. & . \\ | . & . \\ | ||

1 & 77.8 \\ | 1 & 77.8 \\ | ||

\end{matrix} \right]</math> | \end{matrix} \right]\,\!</math> | ||

The hat matrix corresponding to this design matrix is <math>{{H}_{{{\beta }_{0}},{{\beta }_{1}}}}\,\!</math>. It can be calculated using <math>{{H}_{{{\beta }_{0}},{{\beta }_{1}}}}={{X}_{{{\beta }_{0}},{{\beta }_{1}}}}{{(X_{{{\beta }_{0}},{{\beta }_{1}}}^{\prime }{{X}_{{{\beta }_{0}},{{\beta }_{1}}}})}^{-1}}X_{{{\beta }_{0}},{{\beta }_{1}}}^{\prime }\,\!</math>. Once <math>{{H}_{{{\beta }_{0}},{{\beta }_{1}}}}\,\!</math> is known, the regression sum of squares for the model <math>Y={{\beta }_{0}}+{{\beta }_{1}}{{x}_{1}}+\epsilon\,\!</math> can be calculated as: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

S{{S}_{R}}({{\beta }_{0}},{{\beta }_{1}})& = & {{y}^{\prime }}\left[ {{H}_{{{\beta }_{0}},{{\beta }_{1}}}}-(\frac{1}{n})J \right]y \\ | |||

& = & 12530.85 | & = & 12530.85 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

[[Image:doe5_16.png|center|650px|Sequential sum of squares for the data.]] | |||

The regression sum of squares for the model <math>Y={{\beta }_{0}}+\epsilon\,\!</math> is equal to zero since this model does not contain any variables. Therefore: | |||

The sequential sum of squares for | ::<math>S{{S}_{R}}({{\beta }_{0}})=0\,\!</math> | ||

The sequential sum of squares for <math>{{\beta }_{1}}\,\!</math> is: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

S{{S}_{R}}({{\beta }_{1}}|{{\beta }_{0}}) &= & S{{S}_{R}}({{\beta }_{0}},{{\beta }_{1}})-S{{S}_{R}}({{\beta }_{0}}) \\ | |||

& = & 12530.85-0 \\ | & = & 12530.85-0 \\ | ||

& = & 12530.85 | & = & 12530.85 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Knowing the sequential sum of squares, the statistic to test the significance of <math>{{\beta }_{1}}\,\!</math> is: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{f}_{0}} &= & \frac{S{{S}_{R}}({{\beta }_{0}},{{\beta }_{1}})/r}{M{{S}_{E}}} \\ | |||

& = & \frac{12530.85/1}{30.24} \\ | & = & \frac{12530.85/1}{30.24} \\ | ||

& = & 414.366 | & = & 414.366 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The <math>p\,\!</math> value corresponding to this statistic based on the <math>F\,\!</math> distribution with 1 degree of freedom in the numerator and 14 degrees of freedom in the denominator is: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

p\text{ }value &= & 1-P(F\le {{f}_{0}}) \\ | |||

& = & 1-0.999999 \\ | & = & 1-0.999999 \\ | ||

& = & 8.46\times {{10}^{-12}} | & = & 8.46\times {{10}^{-12}} | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Assuming that the desired significance is 0.1, since | |||

Assuming that the desired significance is 0.1, since <math>p\,\!</math> value < 0.1, <math>{{H}_{0}}:{{\beta }_{1}}=0\,\!</math> is rejected and it can be concluded that <math>{{\beta }_{1}}\,\!</math> is significant. The test for <math>{{\beta }_{2}}\,\!</math> can be carried out in a similar manner. This result is shown in the following figure. | |||

==Confidence Intervals in Multiple Linear Regression== | ==Confidence Intervals in Multiple Linear Regression== | ||

Calculation of confidence intervals for multiple linear regression models are similar to those for simple linear regression models explained in | Calculation of confidence intervals for multiple linear regression models are similar to those for simple linear regression models explained in [[Simple_Linear_Regression_Analysis| Simple Linear Regression Analysis]]. | ||

===Confidence Interval on Regression Coefficients=== | |||

A 100 (<math>1-\alpha\,\!</math>) percent confidence interval on the regression coefficient, <math>{{\beta }_{j}}\,\!</math>, is obtained as follows: | |||

::<math>{{\hat{\beta }}_{j}}\pm {{t}_{\alpha /2,n-(k+1)}}\sqrt{{{C}_{jj}}}\,\!</math> | |||

The confidence interval on the regression coefficients are displayed in the Regression Information table under the Low Confidence and High Confidence columns as shown in the following figure. | |||

[[Image:doe5_17.png|center|710px|Confidence interval for the fitted value corresponding to the fifth observation.|link=]] | |||

A 100( <math>1-\alpha </math> ) percent confidence interval on | Confidence Interval on Fitted Values, <math>{{\hat{y}}_{i}}\,\!</math> | ||

A 100 (<math>1-\alpha\,\!</math>) percent confidence interval on any fitted value, <math>{{\hat{y}}_{i}}\,\!</math>, is given by: | |||

::<math>{{\hat{y}}_{i}}\pm {{t}_{\alpha /2,n-(k+1)}}\sqrt{{{{\hat{\sigma }}}^{2}}x_{i}^{\prime }{{({{X}^{\prime }}X)}^{-1}}{{x}_{i}}}\,\!</math> | |||

: | where: | ||

::<math>{{x}_{i}}=\left[ \begin{matrix} | ::<math>{{x}_{i}}=\left[ \begin{matrix} | ||

| Line 808: | Line 900: | ||

. \\ | . \\ | ||

{{x}_{ik}} \\ | {{x}_{ik}} \\ | ||

\end{matrix} \right]</math> | \end{matrix} \right]\,\!</math> | ||

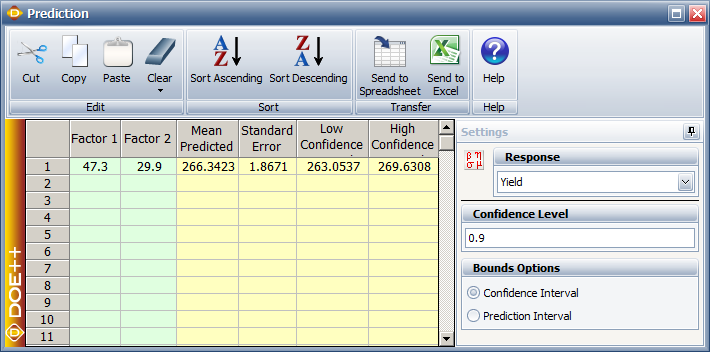

In the above [[Multiple_Linear_Regression_Analysis#Example| example]], the fitted value corresponding to the fifth observation was calculated as <math>{{\hat{y}}_{5}}=266.3\,\!</math>. The 90% confidence interval on this value can be obtained as shown in the figure below. The values of 47.3 and 29.9 used in the figure are the values of the predictor variables corresponding to the fifth observation the [[Multiple_Linear_Regression_Analysis#Example|table]]. | |||

[[ | |||

===Confidence Interval on New Observations=== | ===Confidence Interval on New Observations=== | ||

As explained in [[Simple_Linear_Regression_Analysis| Simple Linear Regression Analysis]], the confidence interval on a new observation is also referred to as the prediction interval. The prediction interval takes into account both the error from the fitted model and the error associated with future observations. A 100 (<math>1-\alpha\,\!</math>) percent confidence interval on a new observation, <math>{{\hat{y}}_{p}}\,\!</math>, is obtained as follows: | |||

::<math>{{\hat{y}}_{p}}\pm {{t}_{\alpha /2,n-(k+1)}}\sqrt{{{{\hat{\sigma }}}^{2}}(1+x_{p}^{\prime }{{({{X}^{\prime }}X)}^{-1}}{{x}_{p}})}</math> | ::<math>{{\hat{y}}_{p}}\pm {{t}_{\alpha /2,n-(k+1)}}\sqrt{{{{\hat{\sigma }}}^{2}}(1+x_{p}^{\prime }{{({{X}^{\prime }}X)}^{-1}}{{x}_{p}})}\,\!</math> | ||

where: | where: | ||

::<math>{{x}_{p}}=\left[ \begin{matrix} | ::<math>{{x}_{p}}=\left[ \begin{matrix} | ||

| Line 832: | Line 922: | ||

. \\ | . \\ | ||

{{x}_{pk}} \\ | {{x}_{pk}} \\ | ||

\end{matrix} \right]</math> | \end{matrix} \right]\,\!</math> | ||

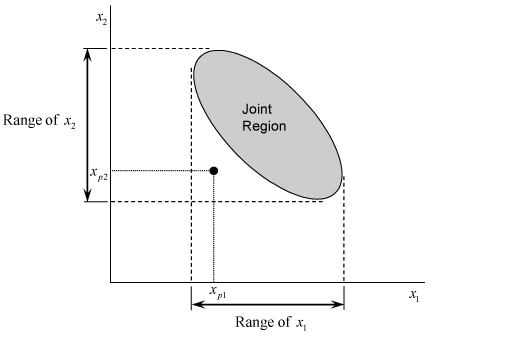

<math>{{x}_{p1}}</math> ,..., | <math>{{x}_{p1}}\,\!</math>,..., <math>{{x}_{pk}}\,\!</math> are the levels of the predictor variables at which the new observation, <math>{{\hat{y}}_{p}}\,\!</math>, needs to be obtained. | ||

In multiple linear regression, prediction intervals should only be obtained at the levels of the predictor variables where the regression model applies. In the case of multiple linear regression it is easy to miss this. Having values lying within the range of the predictor variables does not necessarily mean that the new observation lies in the region to which the model is applicable. For example, consider the next figure where the shaded area shows the region to which a two variable regression model is applicable. The point corresponding to <math>p\,\!</math> th level of first predictor variable, <math>{{x}_{1}}\,\!</math>, and <math>p\,\!</math> th level of the second predictor variable, <math>{{x}_{2}}\,\!</math>, does not lie in the shaded area, although both of these levels are within the range of the first and second predictor variables respectively. In this case, the regression model is not applicable at this point. | |||

[[Image:doe5.18.png|center|519px|Predicted values and region of model application in multiple linear regression.|link=]] | |||

==Measures of Model Adequacy== | ==Measures of Model Adequacy== | ||

As in the case of simple linear regression, analysis of a fitted multiple linear regression model is important before inferences based on the model are undertaken. This section presents some techniques that can be used to check the appropriateness of the multiple linear regression model. | As in the case of simple linear regression, analysis of a fitted multiple linear regression model is important before inferences based on the model are undertaken. This section presents some techniques that can be used to check the appropriateness of the multiple linear regression model. | ||

===Coefficient of Multiple Determination, ''R''<sup>2</sup>=== | |||

===Coefficient of Multiple Determination, | |||

The coefficient of multiple determination is similar to the coefficient of determination used in the case of simple linear regression. It is defined as: | The coefficient of multiple determination is similar to the coefficient of determination used in the case of simple linear regression. It is defined as: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{R}^{2}} & = & \frac{S{{S}_{R}}}{S{{S}_{T}}} \\ | |||

& = & 1-\frac{S{{S}_{E}}}{S{{S}_{T}}} | & = & 1-\frac{S{{S}_{E}}}{S{{S}_{T}}} | ||

\end{align}</math> | \end{align}\,\!</math> | ||

<math>{{R}^{2}}</math> | <math>{{R}^{2}}\,\!</math> indicates the amount of total variability explained by the regression model. The positive square root of <math>{{R}^{2}}\,\!</math> is called the multiple correlation coefficient and measures the linear association between <math>Y\,\!</math> and the predictor variables, <math>{{x}_{1}}\,\!</math>, <math>{{x}_{2}}\,\!</math>... <math>{{x}_{k}}\,\!</math>. | ||

The value of | The value of <math>{{R}^{2}}\,\!</math> increases as more terms are added to the model, even if the new term does not contribute significantly to the model. An increase in the value of <math>{{R}^{2}}\,\!</math> cannot be taken as a sign to conclude that the new model is superior to the older model. A better statistic to use is the adjusted <math>{{R}^{2}}\,\!</math> statistic defined as follows: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

R_{adj}^{2} &= & 1-\frac{M{{S}_{E}}}{M{{S}_{T}}} \\ | |||

& = & 1-\frac{S{{S}_{E}}/(n-(k+1))}{S{{S}_{T}}/(n-1)} \\ | & = & 1-\frac{S{{S}_{E}}/(n-(k+1))}{S{{S}_{T}}/(n-1)} \\ | ||

& = & 1-(\frac{n-1}{n-(k+1)})(1-{{R}^{2}}) | & = & 1-(\frac{n-1}{n-(k+1)})(1-{{R}^{2}}) | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The adjusted <math>{{R}^{2}}\,\!</math> only increases when significant terms are added to the model. Addition of unimportant terms may lead to a decrease in the value of <math>R_{adj}^{2}\,\!</math>. | |||

In a DOE folio, <math>{{R}^{2}}\,\!</math> and <math>R_{adj}^{2}\,\!</math> values are displayed as R-sq and R-sq(adj), respectively. Other values displayed along with these values are S, PRESS and R-sq(pred). As explained in [[Simple_Linear_Regression_Analysis| Simple Linear Regression Analysis]], the value of S is the square root of the error mean square, <math>M{{S}_{E}}\,\!</math>, and represents the "standard error of the model." | |||

PRESS is an abbreviation for prediction error sum of squares. It is the error sum of squares calculated using the PRESS residuals in place of the residuals, <math>{{e}_{i}}\,\!</math>, in the equation for the error sum of squares. The PRESS residual, <math>{{e}_{(i)}}\,\!</math>, for a particular observation, <math>{{y}_{i}}\,\!</math>, is obtained by fitting the regression model to the remaining observations. Then the value for a new observation, <math>{{\hat{y}}_{p}}\,\!</math>, corresponding to the observation in question, <math>{{y}_{i}}\,\!</math>, is obtained based on the new regression model. The difference between <math>{{y}_{i}}\,\!</math> and <math>{{\hat{y}}_{p}}\,\!</math> gives <math>{{e}_{(i)}}\,\!</math>. The PRESS residual, <math>{{e}_{(i)}}\,\!</math>, can also be obtained using <math>{{h}_{ii}}\,\!</math>, the diagonal element of the hat matrix, <math>H\,\!</math>, as follows: | |||

::<math>{{e}_{(i)}}=\frac{{{e}_{i}}}{1-{{h}_{ii}}}\,\!</math> | |||

R-sq(pred), also referred to as prediction <math>{{R}^{2}}\,\!</math>, is obtained using PRESS as shown next: | |||

::<math>R_{pred}^{2}=1-\frac{PRESS}{S{{S}_{T}}}\,\!</math> | |||

The values of R-sq, R-sq(adj) and S are indicators of how well the regression model fits the observed data. The values of PRESS and R-sq(pred) are indicators of how well the regression model predicts new observations. For example, higher values of PRESS or lower values of R-sq(pred) indicate a model that predicts poorly. | The values of R-sq, R-sq(adj) and S are indicators of how well the regression model fits the observed data. The values of PRESS and R-sq(pred) are indicators of how well the regression model predicts new observations. For example, higher values of PRESS or lower values of R-sq(pred) indicate a model that predicts poorly. The figure below shows these values for the data. The values indicate that the regression model fits the data well and also predicts well. | ||

[[Image:doe5_19.png|center|650px|Coefficient of multiple determination and related results for the data.]] | |||

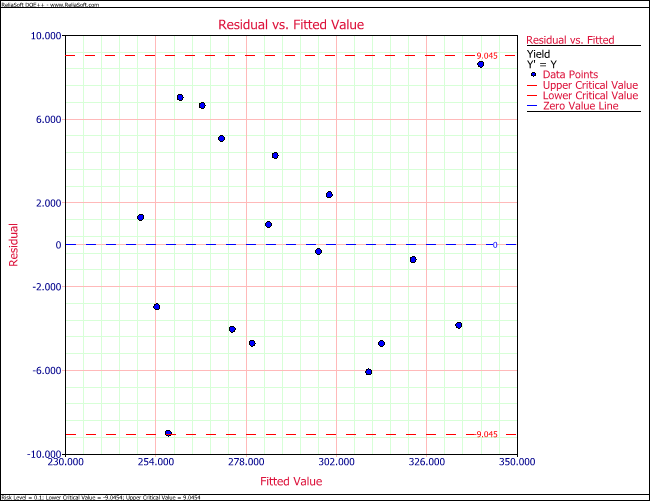

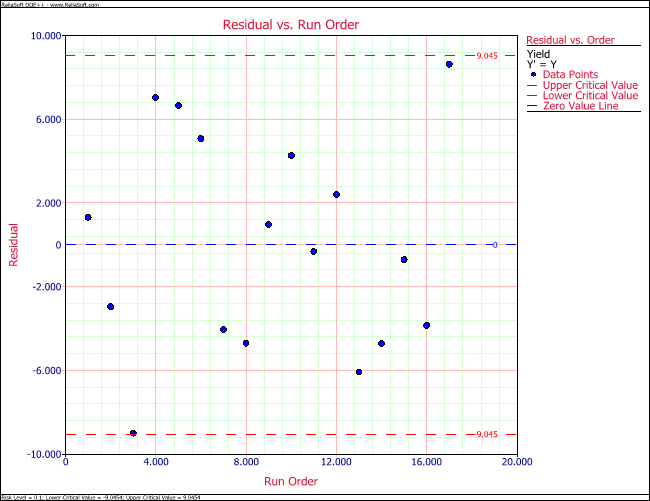

===Residual Analysis=== | ===Residual Analysis=== | ||

Plots of residuals, <math>{{e}_{i}}\,\!</math>, similar to the ones discussed in [[Simple_Linear_Regression_Analysis| Simple Linear Regression Analysis]] for simple linear regression, are used to check the adequacy of a fitted multiple linear regression model. The residuals are expected to be normally distributed with a mean of zero and a constant variance of <math>{{\sigma }^{2}}\,\!</math>. In addition, they should not show any patterns or trends when plotted against any variable or in a time or run-order sequence. Residual plots may also be obtained using standardized and studentized residuals. Standardized residuals, <math>{{d}_{i}}\,\!</math>, are obtained using the following equation: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{d}_{i}}&= & \frac{{{e}_{i}}}{\sqrt{{{{\hat{\sigma }}}^{2}}}} \\ | |||

& = & \frac{{{e}_{i}}}{\sqrt{M{{S}_{E}}}} | & = & \frac{{{e}_{i}}}{\sqrt{M{{S}_{E}}}} | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Standardized residuals are scaled so that the standard deviation of the residuals is approximately equal to one. This helps to identify possible outliers or unusual observations. However, standardized residuals may understate the true residual magnitude, hence studentized residuals, <math>{{r}_{i}}\,\!</math>, are used in their place. Studentized residuals are calculated as follows: | |||

::<math> | |||

\begin{align} | |||

{{r}_{i}} & = & \frac{{{e}_{i}}}{\sqrt{{{{\hat{\sigma }}}^{2}}(1-{{h}_{ii}})}} \\ | |||

& = & \frac{{{e}_{i}}}{\sqrt{M{{S}_{E}}(1-{{h}_{ii}})}} | |||

\end{align} | |||

\,\! | |||

</math> | |||

\ | where <math>{{h}_{ii}}\,\!</math> is the <math>i\,\!</math> th diagonal element of the hat matrix, <math>H\,\!</math>. External studentized (or the studentized deleted) residuals may also be used. These residuals are based on the PRESS residuals mentioned in [[Multiple_Linear_Regression_Analysis#Coefficient_of_Multiple_Determination.2C_R2|Coefficient of Multiple Determination, ''R''<sup>2</sup>]]. The reason for using the external studentized residuals is that if the <math>i\,\!</math> th observation is an outlier, it may influence the fitted model. In this case, the residual <math>{{e}_{i}}\,\!</math> will be small and may not disclose that <math>i\,\!</math> th observation is an outlier. The external studentized residual for the <math>i\,\!</math> th observation, <math>{{t}_{i}}\,\!</math>, is obtained as follows: | ||

::<math>{{t}_{i}}={{e}_{i}}{{\left[ \frac{n-k}{S{{S}_{E}}(1-{{h}_{ii}})-e_{i}^{2}} \right]}^{0.5}}\,\!</math> | |||

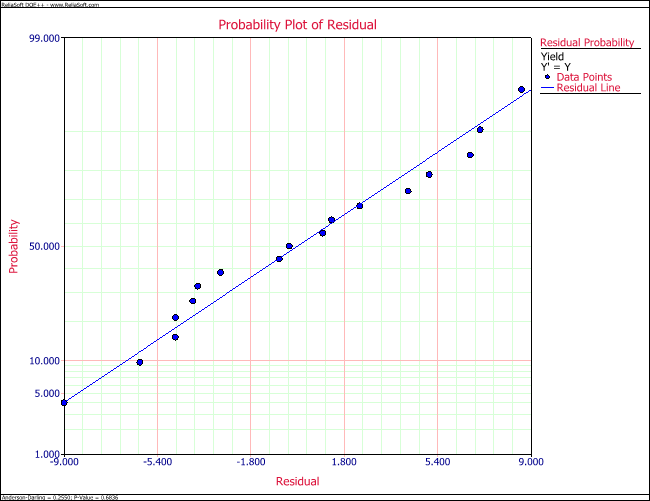

Residual values for the data are shown in the figure below. Standardized residual plots for the data are shown in next two figures. The Weibull++ DOE folio compares the residual values to the critical values on the <math>t\,\!</math> distribution for studentized and external studentized residuals. | |||

[[Image:doe5_20.png|center|877px|Residual values for the data.|link=]] | |||

= | [[Image:doe5_21.png|center|650px|Residual probability plot for the data.|link=]] | ||