Parametric Recurrent Event Data Analysis: Difference between revisions

Lisa Hacker (talk | contribs) No edit summary |

|||

| (14 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

<noinclude>{{Banner Weibull Articles}} | <noinclude>{{Banner Weibull Articles}} | ||

''This article appears in the [ | ''This article appears in the [https://help.reliasoft.com/reference/life_data_analysis Life data analysis reference].'' | ||

{{Navigation box}} | {{Navigation box}} | ||

| Line 6: | Line 6: | ||

The parametric analysis approach utilizes the General Renewal Process (GRP) model [[Appendix: | The parametric analysis approach utilizes the General Renewal Process (GRP) model, as discussed in Mettas and Zhao [[Appendix:_Life_Data_Analysis_References|[28]]]. In this model, the repair time is assumed to be negligible so that the processes can be viewed as point processes. This model provides a way to describe the rate of occurrence of events over time, such as in the case of data obtained from a repairable system. This model is particularly useful in modeling the failure behavior of a specific system and understanding the effects of the repairs on the age of that system. For example, consider a system that is repaired after a failure, where the repair does not bring the system to an ''as-good-as-new'' or an ''as-bad-as-old'' condition. In other words, the system is partially rejuvenated after the repair. Traditionally, in as-bad-as-old repairs, also known as ''minimal repairs'', the failure data from such a system would have been modeled using a homogeneous or non-homogeneous Poisson process (NHPP). On rare occasions, a Weibull distribution has been used as well in cases where the system is almost as-good-as-new after the repair, also known as a ''perfect renewal process'' (PRP). However, for the intermediate states after the repair, there has not been a commercially available model, even though many models have been proposed in literature. In Weibull++, the GRP model provides the capability to model systems with partial renewal (''general repair'' or ''imperfect repair/maintenance'') and allows for a variety of predictions such as reliability, expected failures, etc. | ||

== The GRP Model == | == The GRP Model == | ||

In this model, the concept of virtual age is introduced. Let <math>{{t}_{1}},{{t}_{2}},\cdots ,{{t}_{n}}</math> represent the successive failure times and let <math>{{x}_{1}},{{x}_{2}},\cdots ,{{x}_{n}}</math> represent the time between failures ( <math>{{t}_{i}}=\sum_{j=1}^{i}{{x}_{j}})</math> . Assume that after each event, actions are taken to improve the system performance. Let <math>q</math> be the action effectiveness factor. There are two GRP models: | In this model, the concept of virtual age is introduced. Let <math>{{t}_{1}},{{t}_{2}},\cdots ,{{t}_{n}}\,\!</math> represent the successive failure times and let <math>{{x}_{1}},{{x}_{2}},\cdots ,{{x}_{n}}\,\!</math> represent the time between failures ( <math>{{t}_{i}}=\sum_{j=1}^{i}{{x}_{j}})\,\!</math>. Assume that after each event, actions are taken to improve the system performance. Let <math>q\,\!</math> be the action effectiveness factor. There are two GRP models: | ||

Type I: | Type I: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

v_{i}=v_{i | v_{i}=v_{i-1}+qx_{i}=qt_{i} | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Type II: | Type II: | ||

::<math>{{v}_{i}}=q({{v}_{i-1}}+{{x}_{i}})={{q}^{i}}{{x}_{1}}+{{q}^{i-1}}{{x}_{2}}+\cdots +{{x}_{i}}</math> | ::<math>{{v}_{i}}=q({{v}_{i-1}}+{{x}_{i}})={{q}^{i}}{{x}_{1}}+{{q}^{i-1}}{{x}_{2}}+\cdots +{{q}{x}_{i}}\,\!</math> | ||

where < | where <math>{{v}_{i}}\,\!</math> is the virtual age of the system right after <math>i\,\!</math>th repair. The Type I model assumes that the <math>i\,\!</math>th repair cannot remove the damage incurred before the <math>(i-1)\,\!</math> th repair. It can only reduce the additional age <math>{{x}_{i}}\,\!</math> to <math>{{qx}_{i}}\,\!</math>. The Type II model assumes that at the <math>i\,\!</math>th repair, the virtual age has been accumulated to <math>v_{i-1} + {{x}_{i}}\,\!</math>. The <math>i\,\!</math>th repair will remove the cumulative damage from both current and previous failures by reducing the virtual age to <math>q(v_{i-1} + x_{i})\,\!</math>. | ||

The power law function is used to model the rate of recurrence, which is: | The power law function is used to model the rate of recurrence, which is: | ||

| Line 28: | Line 28: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

\lambda(t)=\lambda \beta t^{\beta -1} | \lambda(t)=\lambda \beta t^{\beta -1} | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The conditional ''pdf'' is: | The conditional ''pdf'' is: | ||

::<math>f({{t}_{i}}|{{t}_{i-1}})=\lambda \beta {{({{x}_{i}}+{{v}_{i-1}})}^{\beta -1}}{{e}^{-\lambda \left[ {{\left( {{x}_{i}}+{{v}_{i-1}} \right)}^{\beta }}-v_{i-1}^{\beta } \right]}}</math> | ::<math>f({{t}_{i}}|{{t}_{i-1}})=\lambda \beta {{({{x}_{i}}+{{v}_{i-1}})}^{\beta -1}}{{e}^{-\lambda \left[ {{\left( {{x}_{i}}+{{v}_{i-1}} \right)}^{\beta }}-v_{i-1}^{\beta } \right]}}\,\!</math> | ||

MLE method is used to estimate the model parameters. The log likelihood function is [[Appendix: | MLE method is used to estimate the model parameters. The log likelihood function is discussed in Mettas and Zhao [[Appendix:_Life_Data_Analysis_References|[28]]]: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

& \ln (L)= n(\ln \lambda +\ln \beta )-\lambda \left[ {{\left( T-{{t}_{n}}+{{v}_{n}} \right)}^{\beta }}-v_{n}^{\beta } \right] \\ | & \ln (L)= n(\ln \lambda +\ln \beta )-\lambda \left[ {{\left( T-{{t}_{n}}+{{v}_{n}} \right)}^{\beta }}-v_{n}^{\beta } \right] \\ | ||

& -\lambda \underset{i=1}{\overset{n}{\mathop \sum }}\,\left[ {{\left( {{x}_{i}}+{{v}_{i-1}} \right)}^{\beta }}-v_{i}^{\beta } \right]+(\beta -1)\underset{i=1}{\overset{n}{\mathop \sum }}\,\ln ({{x}_{i}}+{{v}_{i-1}}) | & -\lambda \underset{i=1}{\overset{n}{\mathop \sum }}\,\left[ {{\left( {{x}_{i}}+{{v}_{i-1}} \right)}^{\beta }}-v_{i-1}^{\beta } \right]+(\beta -1)\underset{i=1}{\overset{n}{\mathop \sum }}\,\ln ({{x}_{i}}+{{v}_{i-1}}) | ||

\end{align}</math> | \end{align}\,\!</math> | ||

where < | where <math>n\,\!</math> is the total number of events during the entire observation period. <math>T\,\!</math> is the stop time of the observation. <math>T = t_{n}\,\!</math> if the observation stops right after the last event. | ||

== Confidence Bounds == | == Confidence Bounds == | ||

| Line 48: | Line 48: | ||

=== Bounds on Cumulative Failure (Event) Numbers === | === Bounds on Cumulative Failure (Event) Numbers === | ||

The variance of the cumulative failure number < | The variance of the cumulative failure number <math>N(t)\,\!</math> is: | ||

::<math>Var[N(t)]=Var\left[ E(N(t)|\lambda ,\beta ,q) \right]+E\left[ Var(N(t)|\lambda ,\beta ,q) \right]</math> | ::<math>Var[N(t)]=Var\left[ E(N(t)|\lambda ,\beta ,q) \right]+E\left[ Var(N(t)|\lambda ,\beta ,q) \right]\,\!</math> | ||

The first term accounts for the uncertainty of the parameter estimation. The second term considers the uncertainty caused by the renewal process even when model parameters are fixed. However, unless < | The first term accounts for the uncertainty of the parameter estimation. The second term considers the uncertainty caused by the renewal process even when model parameters are fixed. However, unless <math>q = 1\,\!</math> , <math>Var\left[ E(N(t)|\lambda ,\beta ,q) \right]\,\!</math> cannot be calculated because <math>E(N(t))\,\!</math> cannot be expressed as a closed-form function of <math>\lambda,\beta\,\,</math>, and <math>q\,\!</math>. In order to consider the uncertainty of the parameter estimation, <math>Var\left[ E(N(t)|\lambda ,\beta ,q) \right]\,\!</math> is approximated by: | ||

::<math>Var\left[ E(N(t)|\lambda ,\beta ,q) \right]=Var[E(N({{v}_{t}})|\lambda ,\beta )]=Var[\lambda v_{t}^{\beta }]</math> | ::<math>Var\left[ E(N(t)|\lambda ,\beta ,q) \right]=Var[E(N({{v}_{t}})|\lambda ,\beta )]=Var[\lambda v_{t}^{\beta }]\,\!</math> | ||

where < | where <math>v_{t}\,\!</math> is the expected virtual age at time <math>t\,\!</math> and <math>Var[\lambda v_{t}^{\beta }]\,\!</math> is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

& Var[\lambda v_{t}^{\beta }]= & {{\left( \frac{\partial (\lambda v_{t}^{\beta })}{\partial \beta } \right)}^{2}}Var(\hat{\beta })+{{\left( \frac{\partial (\lambda v_{t}^{\beta })}{\partial \lambda } \right)}^{2}}Var(\hat{\lambda }) \\ | & Var[\lambda v_{t}^{\beta }]= & {{\left( \frac{\partial (\lambda v_{t}^{\beta })}{\partial \beta } \right)}^{2}}Var(\hat{\beta })+{{\left( \frac{\partial (\lambda v_{t}^{\beta })}{\partial \lambda } \right)}^{2}}Var(\hat{\lambda }) \\ | ||

& +2\frac{\partial (\lambda v_{t}^{\beta })}{\partial \beta }\frac{\partial (\lambda v_{t}^{\beta })}{\partial \lambda }Cov(\hat{\beta },\hat{\lambda }) | & +2\frac{\partial (\lambda v_{t}^{\beta })}{\partial \beta }\frac{\partial (\lambda v_{t}^{\beta })}{\partial \lambda }Cov(\hat{\beta },\hat{\lambda }) | ||

\end{align}</math> | \end{align}\,\!</math> | ||

By conducting this approximation, the uncertainty of < | By conducting this approximation, the uncertainty of <math>\lambda\,\!</math> and <math>\beta\,\!</math> are considered. The value of <math>v_{t}\,\!</math> and the value of the second term in the equation for the variance of number of failures are obtained through the Monte Carlo simulation using parameters <math>\hat{\lambda },\hat{\beta },\hat{q},\,\!</math> which are the ML estimators. The same simulation is used to estimate the cumulative number of failures <math>\hat{N}(t)=E(N(t)|\hat{\lambda },\hat{\beta },\hat{q})\,\!</math>. | ||

Once the variance and the expected value of < | Once the variance and the expected value of <math>N(t)\,\!</math> have been obtained, the bounds can be calculated by assuming that <math>N(t)\,\!</math> is lognormally distributed as: | ||

::<math>\frac{\ln N(t)-\ln \hat{N}(t)}{\sqrt{Var(\ln N(t))}}\tilde{\ }N(0,1)</math> | ::<math>\frac{\ln N(t)-\ln \hat{N}(t)}{\sqrt{Var(\ln N(t))}}\tilde{\ }N(0,1)\,\!</math> | ||

The upper and lower bounds for a given confidence level < | The upper and lower bounds for a given confidence level <math>\alpha\,\!</math> can be calculated by: | ||

::<math>N{{(t)}_{U,L}}=\hat{N}(t){{e}^{\pm {{z}_{a}}\sqrt{Var(N(t))}/\hat{N}(t)}}</math> | ::<math>N{{(t)}_{U,L}}=\hat{N}(t){{e}^{\pm {{z}_{a}}\sqrt{Var(N(t))}/\hat{N}(t)}}\,\!</math> | ||

where < | where <math>z_{a}\,\!</math> is the standard normal distribution. | ||

If < | If <math>N(t)\,\!</math> is assumed to be normally distributed, the bounds can be calculated by: | ||

::<math>N{{(t)}_{U}}=\hat{N}(t)+{{z}_{a}}\sqrt{Var(N(t))}</math> | ::<math>N{{(t)}_{U}}=\hat{N}(t)+{{z}_{a}}\sqrt{Var(N(t))}\,\!</math> | ||

::<math>N{{(t)}_{L}}=\hat{N}(t)-{{z}_{a}}\sqrt{Var(N(t))}</math> | ::<math>N{{(t)}_{L}}=\hat{N}(t)-{{z}_{a}}\sqrt{Var(N(t))}\,\!</math> | ||

In Weibull++, the < | In Weibull++, the <math>N(t)_{U}\,\!</math> is the smaller of the upper bounds obtained from lognormal and normal distribution appoximation. The <math>N(t)_{L}\,\!</math> is set to the largest of the lower bounds obtained from lognormal and normal distribution appoximation. This combined method can prevent the out-of-range values of bounds for some small <math>t\,\!</math> values. | ||

=== Bounds of Cumulative Failure Intensity and MTBF === | === Bounds of Cumulative Failure Intensity and MTBF === | ||

For a given time < | For a given time <math>t\,\!</math> , the expected value of cumulative MTBF <math>m_{c}(t)\,\!</math> and cumulative failure intensity <math>\lambda_{c}(t)\,\!</math> can be calculated using the following equations: | ||

::<math>{{\hat{\lambda }}_{c}}(t)=\frac{\hat{N}(t)}{t};{{\hat{m}}_{c}}(t)=\frac{t}{\hat{N}(t)}</math> | ::<math>{{\hat{\lambda }}_{c}}(t)=\frac{\hat{N}(t)}{t};{{\hat{m}}_{c}}(t)=\frac{t}{\hat{N}(t)}\,\!</math> | ||

The bounds can be easily obtained from the corresponding bounds of < | The bounds can be easily obtained from the corresponding bounds of <math>N(t)\,\!</math>. | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

& {{{\hat{\lambda }}}_{c}}{{(t)}_{L}}= & \frac{\hat{N}{{(t)}_{L}}}{t};\text{ }{{{\hat{\lambda }}}_{c}}{{(t)}_{L}}=\frac{\hat{N}{{(t)}_{L}}}{t};\text{ } \\ | & {{{\hat{\lambda }}}_{c}}{{(t)}_{L}}= & \frac{\hat{N}{{(t)}_{L}}}{t};\text{ }{{{\hat{\lambda }}}_{c}}{{(t)}_{L}}=\frac{\hat{N}{{(t)}_{L}}}{t};\text{ } \\ | ||

& {{{\hat{m}}}_{c}}{{(t)}_{L}}= & \frac{t}{\hat{N}{{(t)}_{U}}};\text{ }{{{\hat{m}}}_{c}}{{(t)}_{U}}=\frac{t}{\hat{N}{{(t)}_{L}}} | & {{{\hat{m}}}_{c}}{{(t)}_{L}}= & \frac{t}{\hat{N}{{(t)}_{U}}};\text{ }{{{\hat{m}}}_{c}}{{(t)}_{U}}=\frac{t}{\hat{N}{{(t)}_{L}}} | ||

\end{align}</math> | \end{align}\,\!</math> | ||

=== Bounds on Instantaneous Failure Intensity and MTBF === | === Bounds on Instantaneous Failure Intensity and MTBF === | ||

The instantaneous failure intensity is given by: | The instantaneous failure intensity is given by: | ||

::<math>{{\lambda }_{i}}(t)=\lambda \beta v_{t}^{\beta -1}</math> | ::<math>{{\lambda }_{i}}(t)=\lambda \beta v_{t}^{\beta -1}\,\!</math> | ||

where < | where <math>v_{t}\,\!</math> is the virtual age at time <math>t\,\!</math>. When <math>q\ne 1,\,\!</math> it is obtained from simulation. When <math>q = 1\,\!</math>, <math>v_{t} = t\,\!</math> from model Type I and Type II. | ||

The variance of instantaneous failure intensity can be calculated by: | The variance of instantaneous failure intensity can be calculated by: | ||

| Line 107: | Line 107: | ||

& Var({{\lambda }_{i}}(t))= {{\left( \frac{\partial {{\lambda }_{i}}(t)}{\partial \beta } \right)}^{2}}Var(\hat{\beta })+{{\left( \frac{\partial {{\lambda }_{i}}(t)}{\partial \lambda } \right)}^{2}}Var(\hat{\lambda }) \\ | & Var({{\lambda }_{i}}(t))= {{\left( \frac{\partial {{\lambda }_{i}}(t)}{\partial \beta } \right)}^{2}}Var(\hat{\beta })+{{\left( \frac{\partial {{\lambda }_{i}}(t)}{\partial \lambda } \right)}^{2}}Var(\hat{\lambda }) \\ | ||

& +2\frac{\partial {{\lambda }_{i}}(t)}{\partial \beta }\frac{\partial {{\lambda }_{i}}(t)}{\partial \lambda }Cov(\hat{\beta },\hat{\lambda })+{{\left( \frac{\partial {{\lambda }_{i}}(t)}{\partial v(t)} \right)}^{2}}Var({{{\hat{v}}}_{t}}) | & +2\frac{\partial {{\lambda }_{i}}(t)}{\partial \beta }\frac{\partial {{\lambda }_{i}}(t)}{\partial \lambda }Cov(\hat{\beta },\hat{\lambda })+{{\left( \frac{\partial {{\lambda }_{i}}(t)}{\partial v(t)} \right)}^{2}}Var({{{\hat{v}}}_{t}}) | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The expected value and variance of < | The expected value and variance of <math>v_{t}\,\!</math> are obtained from the Monte Carlo simulation with parameters <math>\hat{\lambda },\hat{\beta },\hat{q}.\,\!</math> Because of the simulation accuracy and the convergence problem in calculation of <math>Var(\hat{\beta }),Var(\hat{\lambda })\,\!</math> and <math>Cov(\hat{\beta },\hat{\lambda }),\,\!</math> <math>Var(\lambda_{i}(t))\,\!</math> can be a negative value at some time points. When this case happens, the bounds of instantaneous failure intensity are not provided. | ||

Once the variance and the expected value of < | Once the variance and the expected value of <math>\lambda_{i}(t)\,\!</math> are obtained, the bounds can be calculated by assuming that <math>\lambda_{i}(t)\,\!</math> is lognormally distributed as: | ||

::<math>\frac{\ln {{\lambda }_{i}}(t)-\ln {{{\hat{\lambda }}}_{i}}(t)}{\sqrt{Var(\ln {{\lambda }_{i}}(t))}}\tilde{\ }N(0,1)</math> | ::<math>\frac{\ln {{\lambda }_{i}}(t)-\ln {{{\hat{\lambda }}}_{i}}(t)}{\sqrt{Var(\ln {{\lambda }_{i}}(t))}}\tilde{\ }N(0,1)\,\!</math> | ||

The upper and lower bounds for a given confidence level < | The upper and lower bounds for a given confidence level <math>\alpha\,\!</math> can be calculated by: | ||

::<math>{{\lambda }_{i}}(t)={{\hat{\lambda }}_{i}}(t){{e}^{\pm {{z}_{a}}\sqrt{Var({{\lambda }_{i}}(t))}/{{{\hat{\lambda }}}_{i}}(t)}}</math> | ::<math>{{\lambda }_{i}}(t)={{\hat{\lambda }}_{i}}(t){{e}^{\pm {{z}_{a}}\sqrt{Var({{\lambda }_{i}}(t))}/{{{\hat{\lambda }}}_{i}}(t)}}\,\!</math> | ||

where < | where <math>z_{a}\,\!</math> is the standard normal distribution. | ||

If < | If <math>\lambda_{i}(t)\,\!</math> is assumed to be normally distributed, the bounds can be calculated by: | ||

::<math>{{\lambda }_{i}}{{(t)}_{U}}={{\hat{\lambda }}_{i}}(t)+{{z}_{a}}\sqrt{Var(N(t))}</math> | ::<math>{{\lambda }_{i}}{{(t)}_{U}}={{\hat{\lambda }}_{i}}(t)+{{z}_{a}}\sqrt{Var(N(t))}\,\!</math> | ||

::<math>{{\lambda }_{i}}{{(t)}_{L}}={{\hat{\lambda }}_{i}}(t)-{{z}_{a}}\sqrt{Var(N(t))}</math> | ::<math>{{\lambda }_{i}}{{(t)}_{L}}={{\hat{\lambda }}_{i}}(t)-{{z}_{a}}\sqrt{Var(N(t))}\,\!</math> | ||

In Weibull++, < | In Weibull++, <math>\lambda_{i}(t)_{U}\,\!</math> is set to the smaller of the two upper bounds obtained from the above lognormal and normal distribution appoximation. <math>\lambda_{i}(t)_{L}\,\!</math> is set to the largest of the two lower bounds obtained from the above lognormal and normal distribution appoximation. This combination method can prevent the out of range values of bounds when <math>t\,\!</math> values are small. | ||

For a given time < | For a given time <math>t\,\!</math>, the expected value of cumulative MTBF <math>m_{i}(t)\,\!</math> is: | ||

::<math>{{\hat{m}}_{i}}(t)=\frac{1}{{{{\hat{\lambda }}}_{i}}(t)}\text{ }</math> | ::<math>{{\hat{m}}_{i}}(t)=\frac{1}{{{{\hat{\lambda }}}_{i}}(t)}\text{ }\,\!</math> | ||

The upper and lower bounds can be easily obtained from the corresponding bounds of < | The upper and lower bounds can be easily obtained from the corresponding bounds of <math>\lambda_{i}(t)\,\!</math>: | ||

::<math>{{\hat{m}}_{i}}{{(t)}_{U}}=\frac{1}{{{{\hat{\lambda }}}_{i}}{{(t)}_{L}}}</math> | ::<math>{{\hat{m}}_{i}}{{(t)}_{U}}=\frac{1}{{{{\hat{\lambda }}}_{i}}{{(t)}_{L}}}\,\!</math> | ||

::<math>{{\hat{m}}_{i}}{{(t)}_{L}}=\frac{1}{{{{\hat{\lambda }}}_{i}}{{(t)}_{U}}}</math> | ::<math>{{\hat{m}}_{i}}{{(t)}_{L}}=\frac{1}{{{{\hat{\lambda }}}_{i}}{{(t)}_{U}}}\,\!</math> | ||

=== Bounds on Conditional Reliability === | === Bounds on Conditional Reliability === | ||

Given mission start time < | Given mission start time <math>t_{0}\,\!</math> and mission time <math>T\,\!</math>, the conditional reliability can be calculated by: | ||

::<math>R(T|{{t}_{0}})=\frac{R(T+{{v}_{0}})}{R({{v}_{0}})}={{e}^{-\lambda [{{({{v}_{0}}+T)}^{\beta }}-{{v}_{0}}]}}</math> | ::<math>R(T|{{t}_{0}})=\frac{R(T+{{v}_{0}})}{R({{v}_{0}})}={{e}^{-\lambda [{{({{v}_{0}}+T)}^{\beta }}-{{v}_{0}}]}}\,\!</math> | ||

< | <math>v_{0}\,\!</math> is the virtual age corresponding to time <math>t_{0}\,\!</math>. The expected value and the variance of <math>v_{0}\,\!</math> are obtained from Monte Carlo simulation. The variance of the conditional reliability <math>R(T|t_{0})\,\!</math> is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

& Var(R)= {{\left( \frac{\partial R}{\partial \beta } \right)}^{2}}Var(\hat{\beta })+{{\left( \frac{\partial R}{\partial \lambda } \right)}^{2}}Var(\hat{\lambda }) \\ | & Var(R)= {{\left( \frac{\partial R}{\partial \beta } \right)}^{2}}Var(\hat{\beta })+{{\left( \frac{\partial R}{\partial \lambda } \right)}^{2}}Var(\hat{\lambda }) \\ | ||

& +2\frac{\partial R}{\partial \beta }\frac{\partial R}{\partial \lambda }Cov(\hat{\beta },\hat{\lambda })+{{\left( \frac{\partial R}{\partial {{v}_{0}}} \right)}^{2}}Var({{{\hat{v}}}_{0}}) | & +2\frac{\partial R}{\partial \beta }\frac{\partial R}{\partial \lambda }Cov(\hat{\beta },\hat{\lambda })+{{\left( \frac{\partial R}{\partial {{v}_{0}}} \right)}^{2}}Var({{{\hat{v}}}_{0}}) | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Because of the simulation accuracy and the convergence problem in calculation of <math>Var(\hat{\beta }),Var(\hat{\lambda })</math> and <math>Cov(\hat{\beta },\hat{\lambda }),</math> < | Because of the simulation accuracy and the convergence problem in calculation of <math>Var(\hat{\beta }),Var(\hat{\lambda })\,\!</math> and <math>Cov(\hat{\beta },\hat{\lambda }),\,\!</math> <math>Var(R)\,\!</math> can be a negative value at some time points. When this case happens, the bounds are not provided. | ||

The bounds are based on: | The bounds are based on: | ||

::<math>\log \text{it}(\hat{R}(T))\tilde{\ }N(0,1)</math> | ::<math>\log \text{it}(\hat{R}(T))\tilde{\ }N(0,1)\,\!</math> | ||

::<math>\log \text{it}(\hat{R}(T))=\ln \left\{ \frac{\hat{R}(T)}{1-\hat{R}(T)} \right\}</math> | ::<math>\log \text{it}(\hat{R}(T))=\ln \left\{ \frac{\hat{R}(T)}{1-\hat{R}(T)} \right\}\,\!</math> | ||

The confidence bounds on reliability are given by: | The confidence bounds on reliability are given by: | ||

::<math>R=\frac{{\hat{R}}}{\hat{R}+(1-\hat{R}){{e}^{\pm \sqrt{Var(R)}/[\hat{R}(1-\hat{R})]}}}</math> | ::<math>R=\frac{{\hat{R}}}{\hat{R}+(1-\hat{R}){{e}^{\pm \sqrt{Var(R)}/[\hat{R}(1-\hat{R})]}}}\,\!</math> | ||

It will be compared with the bounds obtained from: | It will be compared with the bounds obtained from: | ||

::<math>R=\hat{R}{{e}^{\pm {{z}_{a}}\sqrt{Var(R)}/\hat{R}}}</math> | ::<math>R=\hat{R}{{e}^{\pm {{z}_{a}}\sqrt{Var(R)}/\hat{R}}}\,\!</math> | ||

The smaller of the two upper bounds will be the final upper bound and the larger of the two lower bounds will be the final lower bound. | The smaller of the two upper bounds will be the final upper bound and the larger of the two lower bounds will be the final lower bound. | ||

Latest revision as of 21:47, 18 September 2023

|

This article appears in the Life data analysis reference.

Weibull++'s parametric RDA folio is a tool for modeling recurrent event data. It can capture the trend, estimate the rate and predict the total number of recurrences. The failure and repair data of a repairable system can be treated as one type of recurrence data. Past and current repairs may affect the future failure process. For most recurrent events, time (distance, cycles, etc.) is a key factor. With time, the recurrence rate may remain constant, increase or decrease. For other recurrent events, not only the time, but also the number of events can affect the recurrence process (e.g., the debugging process in software development).

The parametric analysis approach utilizes the General Renewal Process (GRP) model, as discussed in Mettas and Zhao [28]. In this model, the repair time is assumed to be negligible so that the processes can be viewed as point processes. This model provides a way to describe the rate of occurrence of events over time, such as in the case of data obtained from a repairable system. This model is particularly useful in modeling the failure behavior of a specific system and understanding the effects of the repairs on the age of that system. For example, consider a system that is repaired after a failure, where the repair does not bring the system to an as-good-as-new or an as-bad-as-old condition. In other words, the system is partially rejuvenated after the repair. Traditionally, in as-bad-as-old repairs, also known as minimal repairs, the failure data from such a system would have been modeled using a homogeneous or non-homogeneous Poisson process (NHPP). On rare occasions, a Weibull distribution has been used as well in cases where the system is almost as-good-as-new after the repair, also known as a perfect renewal process (PRP). However, for the intermediate states after the repair, there has not been a commercially available model, even though many models have been proposed in literature. In Weibull++, the GRP model provides the capability to model systems with partial renewal (general repair or imperfect repair/maintenance) and allows for a variety of predictions such as reliability, expected failures, etc.

The GRP Model

In this model, the concept of virtual age is introduced. Let [math]\displaystyle{ {{t}_{1}},{{t}_{2}},\cdots ,{{t}_{n}}\,\! }[/math] represent the successive failure times and let [math]\displaystyle{ {{x}_{1}},{{x}_{2}},\cdots ,{{x}_{n}}\,\! }[/math] represent the time between failures ( [math]\displaystyle{ {{t}_{i}}=\sum_{j=1}^{i}{{x}_{j}})\,\! }[/math]. Assume that after each event, actions are taken to improve the system performance. Let [math]\displaystyle{ q\,\! }[/math] be the action effectiveness factor. There are two GRP models:

Type I:

- [math]\displaystyle{ \begin{align} v_{i}=v_{i-1}+qx_{i}=qt_{i} \end{align}\,\! }[/math]

Type II:

- [math]\displaystyle{ {{v}_{i}}=q({{v}_{i-1}}+{{x}_{i}})={{q}^{i}}{{x}_{1}}+{{q}^{i-1}}{{x}_{2}}+\cdots +{{q}{x}_{i}}\,\! }[/math]

where [math]\displaystyle{ {{v}_{i}}\,\! }[/math] is the virtual age of the system right after [math]\displaystyle{ i\,\! }[/math]th repair. The Type I model assumes that the [math]\displaystyle{ i\,\! }[/math]th repair cannot remove the damage incurred before the [math]\displaystyle{ (i-1)\,\! }[/math] th repair. It can only reduce the additional age [math]\displaystyle{ {{x}_{i}}\,\! }[/math] to [math]\displaystyle{ {{qx}_{i}}\,\! }[/math]. The Type II model assumes that at the [math]\displaystyle{ i\,\! }[/math]th repair, the virtual age has been accumulated to [math]\displaystyle{ v_{i-1} + {{x}_{i}}\,\! }[/math]. The [math]\displaystyle{ i\,\! }[/math]th repair will remove the cumulative damage from both current and previous failures by reducing the virtual age to [math]\displaystyle{ q(v_{i-1} + x_{i})\,\! }[/math].

The power law function is used to model the rate of recurrence, which is:

- [math]\displaystyle{ \begin{align} \lambda(t)=\lambda \beta t^{\beta -1} \end{align}\,\! }[/math]

The conditional pdf is:

- [math]\displaystyle{ f({{t}_{i}}|{{t}_{i-1}})=\lambda \beta {{({{x}_{i}}+{{v}_{i-1}})}^{\beta -1}}{{e}^{-\lambda \left[ {{\left( {{x}_{i}}+{{v}_{i-1}} \right)}^{\beta }}-v_{i-1}^{\beta } \right]}}\,\! }[/math]

MLE method is used to estimate the model parameters. The log likelihood function is discussed in Mettas and Zhao [28]:

- [math]\displaystyle{ \begin{align} & \ln (L)= n(\ln \lambda +\ln \beta )-\lambda \left[ {{\left( T-{{t}_{n}}+{{v}_{n}} \right)}^{\beta }}-v_{n}^{\beta } \right] \\ & -\lambda \underset{i=1}{\overset{n}{\mathop \sum }}\,\left[ {{\left( {{x}_{i}}+{{v}_{i-1}} \right)}^{\beta }}-v_{i-1}^{\beta } \right]+(\beta -1)\underset{i=1}{\overset{n}{\mathop \sum }}\,\ln ({{x}_{i}}+{{v}_{i-1}}) \end{align}\,\! }[/math]

where [math]\displaystyle{ n\,\! }[/math] is the total number of events during the entire observation period. [math]\displaystyle{ T\,\! }[/math] is the stop time of the observation. [math]\displaystyle{ T = t_{n}\,\! }[/math] if the observation stops right after the last event.

Confidence Bounds

In general, in order to obtain the virtual age, the exact occurrence time of each event (failure) should be available (see equations for Type I and Type II models). However, the times are unknown until the corresponding events occur. For this reason, there are no closed-form expressions for total failure number and failure intensity, which are functions of failure times and virtual age. Therefore, in Weibull++, a Monte Carlo simulation is used to predict values of virtual time, failure number, MTBF and failure rate. The approximate confidence bounds obtained from simulation are provided. The uncertainty of model parameters is also considered in the bounds.

Bounds on Cumulative Failure (Event) Numbers

The variance of the cumulative failure number [math]\displaystyle{ N(t)\,\! }[/math] is:

- [math]\displaystyle{ Var[N(t)]=Var\left[ E(N(t)|\lambda ,\beta ,q) \right]+E\left[ Var(N(t)|\lambda ,\beta ,q) \right]\,\! }[/math]

The first term accounts for the uncertainty of the parameter estimation. The second term considers the uncertainty caused by the renewal process even when model parameters are fixed. However, unless [math]\displaystyle{ q = 1\,\! }[/math] , [math]\displaystyle{ Var\left[ E(N(t)|\lambda ,\beta ,q) \right]\,\! }[/math] cannot be calculated because [math]\displaystyle{ E(N(t))\,\! }[/math] cannot be expressed as a closed-form function of [math]\displaystyle{ \lambda,\beta\,\, }[/math], and [math]\displaystyle{ q\,\! }[/math]. In order to consider the uncertainty of the parameter estimation, [math]\displaystyle{ Var\left[ E(N(t)|\lambda ,\beta ,q) \right]\,\! }[/math] is approximated by:

- [math]\displaystyle{ Var\left[ E(N(t)|\lambda ,\beta ,q) \right]=Var[E(N({{v}_{t}})|\lambda ,\beta )]=Var[\lambda v_{t}^{\beta }]\,\! }[/math]

where [math]\displaystyle{ v_{t}\,\! }[/math] is the expected virtual age at time [math]\displaystyle{ t\,\! }[/math] and [math]\displaystyle{ Var[\lambda v_{t}^{\beta }]\,\! }[/math] is:

- [math]\displaystyle{ \begin{align} & Var[\lambda v_{t}^{\beta }]= & {{\left( \frac{\partial (\lambda v_{t}^{\beta })}{\partial \beta } \right)}^{2}}Var(\hat{\beta })+{{\left( \frac{\partial (\lambda v_{t}^{\beta })}{\partial \lambda } \right)}^{2}}Var(\hat{\lambda }) \\ & +2\frac{\partial (\lambda v_{t}^{\beta })}{\partial \beta }\frac{\partial (\lambda v_{t}^{\beta })}{\partial \lambda }Cov(\hat{\beta },\hat{\lambda }) \end{align}\,\! }[/math]

By conducting this approximation, the uncertainty of [math]\displaystyle{ \lambda\,\! }[/math] and [math]\displaystyle{ \beta\,\! }[/math] are considered. The value of [math]\displaystyle{ v_{t}\,\! }[/math] and the value of the second term in the equation for the variance of number of failures are obtained through the Monte Carlo simulation using parameters [math]\displaystyle{ \hat{\lambda },\hat{\beta },\hat{q},\,\! }[/math] which are the ML estimators. The same simulation is used to estimate the cumulative number of failures [math]\displaystyle{ \hat{N}(t)=E(N(t)|\hat{\lambda },\hat{\beta },\hat{q})\,\! }[/math].

Once the variance and the expected value of [math]\displaystyle{ N(t)\,\! }[/math] have been obtained, the bounds can be calculated by assuming that [math]\displaystyle{ N(t)\,\! }[/math] is lognormally distributed as:

- [math]\displaystyle{ \frac{\ln N(t)-\ln \hat{N}(t)}{\sqrt{Var(\ln N(t))}}\tilde{\ }N(0,1)\,\! }[/math]

The upper and lower bounds for a given confidence level [math]\displaystyle{ \alpha\,\! }[/math] can be calculated by:

- [math]\displaystyle{ N{{(t)}_{U,L}}=\hat{N}(t){{e}^{\pm {{z}_{a}}\sqrt{Var(N(t))}/\hat{N}(t)}}\,\! }[/math]

where [math]\displaystyle{ z_{a}\,\! }[/math] is the standard normal distribution.

If [math]\displaystyle{ N(t)\,\! }[/math] is assumed to be normally distributed, the bounds can be calculated by:

- [math]\displaystyle{ N{{(t)}_{U}}=\hat{N}(t)+{{z}_{a}}\sqrt{Var(N(t))}\,\! }[/math]

- [math]\displaystyle{ N{{(t)}_{L}}=\hat{N}(t)-{{z}_{a}}\sqrt{Var(N(t))}\,\! }[/math]

In Weibull++, the [math]\displaystyle{ N(t)_{U}\,\! }[/math] is the smaller of the upper bounds obtained from lognormal and normal distribution appoximation. The [math]\displaystyle{ N(t)_{L}\,\! }[/math] is set to the largest of the lower bounds obtained from lognormal and normal distribution appoximation. This combined method can prevent the out-of-range values of bounds for some small [math]\displaystyle{ t\,\! }[/math] values.

Bounds of Cumulative Failure Intensity and MTBF

For a given time [math]\displaystyle{ t\,\! }[/math] , the expected value of cumulative MTBF [math]\displaystyle{ m_{c}(t)\,\! }[/math] and cumulative failure intensity [math]\displaystyle{ \lambda_{c}(t)\,\! }[/math] can be calculated using the following equations:

- [math]\displaystyle{ {{\hat{\lambda }}_{c}}(t)=\frac{\hat{N}(t)}{t};{{\hat{m}}_{c}}(t)=\frac{t}{\hat{N}(t)}\,\! }[/math]

The bounds can be easily obtained from the corresponding bounds of [math]\displaystyle{ N(t)\,\! }[/math].

- [math]\displaystyle{ \begin{align} & {{{\hat{\lambda }}}_{c}}{{(t)}_{L}}= & \frac{\hat{N}{{(t)}_{L}}}{t};\text{ }{{{\hat{\lambda }}}_{c}}{{(t)}_{L}}=\frac{\hat{N}{{(t)}_{L}}}{t};\text{ } \\ & {{{\hat{m}}}_{c}}{{(t)}_{L}}= & \frac{t}{\hat{N}{{(t)}_{U}}};\text{ }{{{\hat{m}}}_{c}}{{(t)}_{U}}=\frac{t}{\hat{N}{{(t)}_{L}}} \end{align}\,\! }[/math]

Bounds on Instantaneous Failure Intensity and MTBF

The instantaneous failure intensity is given by:

- [math]\displaystyle{ {{\lambda }_{i}}(t)=\lambda \beta v_{t}^{\beta -1}\,\! }[/math]

where [math]\displaystyle{ v_{t}\,\! }[/math] is the virtual age at time [math]\displaystyle{ t\,\! }[/math]. When [math]\displaystyle{ q\ne 1,\,\! }[/math] it is obtained from simulation. When [math]\displaystyle{ q = 1\,\! }[/math], [math]\displaystyle{ v_{t} = t\,\! }[/math] from model Type I and Type II.

The variance of instantaneous failure intensity can be calculated by:

- [math]\displaystyle{ \begin{align} & Var({{\lambda }_{i}}(t))= {{\left( \frac{\partial {{\lambda }_{i}}(t)}{\partial \beta } \right)}^{2}}Var(\hat{\beta })+{{\left( \frac{\partial {{\lambda }_{i}}(t)}{\partial \lambda } \right)}^{2}}Var(\hat{\lambda }) \\ & +2\frac{\partial {{\lambda }_{i}}(t)}{\partial \beta }\frac{\partial {{\lambda }_{i}}(t)}{\partial \lambda }Cov(\hat{\beta },\hat{\lambda })+{{\left( \frac{\partial {{\lambda }_{i}}(t)}{\partial v(t)} \right)}^{2}}Var({{{\hat{v}}}_{t}}) \end{align}\,\! }[/math]

The expected value and variance of [math]\displaystyle{ v_{t}\,\! }[/math] are obtained from the Monte Carlo simulation with parameters [math]\displaystyle{ \hat{\lambda },\hat{\beta },\hat{q}.\,\! }[/math] Because of the simulation accuracy and the convergence problem in calculation of [math]\displaystyle{ Var(\hat{\beta }),Var(\hat{\lambda })\,\! }[/math] and [math]\displaystyle{ Cov(\hat{\beta },\hat{\lambda }),\,\! }[/math] [math]\displaystyle{ Var(\lambda_{i}(t))\,\! }[/math] can be a negative value at some time points. When this case happens, the bounds of instantaneous failure intensity are not provided.

Once the variance and the expected value of [math]\displaystyle{ \lambda_{i}(t)\,\! }[/math] are obtained, the bounds can be calculated by assuming that [math]\displaystyle{ \lambda_{i}(t)\,\! }[/math] is lognormally distributed as:

- [math]\displaystyle{ \frac{\ln {{\lambda }_{i}}(t)-\ln {{{\hat{\lambda }}}_{i}}(t)}{\sqrt{Var(\ln {{\lambda }_{i}}(t))}}\tilde{\ }N(0,1)\,\! }[/math]

The upper and lower bounds for a given confidence level [math]\displaystyle{ \alpha\,\! }[/math] can be calculated by:

- [math]\displaystyle{ {{\lambda }_{i}}(t)={{\hat{\lambda }}_{i}}(t){{e}^{\pm {{z}_{a}}\sqrt{Var({{\lambda }_{i}}(t))}/{{{\hat{\lambda }}}_{i}}(t)}}\,\! }[/math]

where [math]\displaystyle{ z_{a}\,\! }[/math] is the standard normal distribution.

If [math]\displaystyle{ \lambda_{i}(t)\,\! }[/math] is assumed to be normally distributed, the bounds can be calculated by:

- [math]\displaystyle{ {{\lambda }_{i}}{{(t)}_{U}}={{\hat{\lambda }}_{i}}(t)+{{z}_{a}}\sqrt{Var(N(t))}\,\! }[/math]

- [math]\displaystyle{ {{\lambda }_{i}}{{(t)}_{L}}={{\hat{\lambda }}_{i}}(t)-{{z}_{a}}\sqrt{Var(N(t))}\,\! }[/math]

In Weibull++, [math]\displaystyle{ \lambda_{i}(t)_{U}\,\! }[/math] is set to the smaller of the two upper bounds obtained from the above lognormal and normal distribution appoximation. [math]\displaystyle{ \lambda_{i}(t)_{L}\,\! }[/math] is set to the largest of the two lower bounds obtained from the above lognormal and normal distribution appoximation. This combination method can prevent the out of range values of bounds when [math]\displaystyle{ t\,\! }[/math] values are small.

For a given time [math]\displaystyle{ t\,\! }[/math], the expected value of cumulative MTBF [math]\displaystyle{ m_{i}(t)\,\! }[/math] is:

- [math]\displaystyle{ {{\hat{m}}_{i}}(t)=\frac{1}{{{{\hat{\lambda }}}_{i}}(t)}\text{ }\,\! }[/math]

The upper and lower bounds can be easily obtained from the corresponding bounds of [math]\displaystyle{ \lambda_{i}(t)\,\! }[/math]:

- [math]\displaystyle{ {{\hat{m}}_{i}}{{(t)}_{U}}=\frac{1}{{{{\hat{\lambda }}}_{i}}{{(t)}_{L}}}\,\! }[/math]

- [math]\displaystyle{ {{\hat{m}}_{i}}{{(t)}_{L}}=\frac{1}{{{{\hat{\lambda }}}_{i}}{{(t)}_{U}}}\,\! }[/math]

Bounds on Conditional Reliability

Given mission start time [math]\displaystyle{ t_{0}\,\! }[/math] and mission time [math]\displaystyle{ T\,\! }[/math], the conditional reliability can be calculated by:

- [math]\displaystyle{ R(T|{{t}_{0}})=\frac{R(T+{{v}_{0}})}{R({{v}_{0}})}={{e}^{-\lambda [{{({{v}_{0}}+T)}^{\beta }}-{{v}_{0}}]}}\,\! }[/math]

[math]\displaystyle{ v_{0}\,\! }[/math] is the virtual age corresponding to time [math]\displaystyle{ t_{0}\,\! }[/math]. The expected value and the variance of [math]\displaystyle{ v_{0}\,\! }[/math] are obtained from Monte Carlo simulation. The variance of the conditional reliability [math]\displaystyle{ R(T|t_{0})\,\! }[/math] is:

- [math]\displaystyle{ \begin{align} & Var(R)= {{\left( \frac{\partial R}{\partial \beta } \right)}^{2}}Var(\hat{\beta })+{{\left( \frac{\partial R}{\partial \lambda } \right)}^{2}}Var(\hat{\lambda }) \\ & +2\frac{\partial R}{\partial \beta }\frac{\partial R}{\partial \lambda }Cov(\hat{\beta },\hat{\lambda })+{{\left( \frac{\partial R}{\partial {{v}_{0}}} \right)}^{2}}Var({{{\hat{v}}}_{0}}) \end{align}\,\! }[/math]

Because of the simulation accuracy and the convergence problem in calculation of [math]\displaystyle{ Var(\hat{\beta }),Var(\hat{\lambda })\,\! }[/math] and [math]\displaystyle{ Cov(\hat{\beta },\hat{\lambda }),\,\! }[/math] [math]\displaystyle{ Var(R)\,\! }[/math] can be a negative value at some time points. When this case happens, the bounds are not provided.

The bounds are based on:

- [math]\displaystyle{ \log \text{it}(\hat{R}(T))\tilde{\ }N(0,1)\,\! }[/math]

- [math]\displaystyle{ \log \text{it}(\hat{R}(T))=\ln \left\{ \frac{\hat{R}(T)}{1-\hat{R}(T)} \right\}\,\! }[/math]

The confidence bounds on reliability are given by:

- [math]\displaystyle{ R=\frac{{\hat{R}}}{\hat{R}+(1-\hat{R}){{e}^{\pm \sqrt{Var(R)}/[\hat{R}(1-\hat{R})]}}}\,\! }[/math]

It will be compared with the bounds obtained from:

- [math]\displaystyle{ R=\hat{R}{{e}^{\pm {{z}_{a}}\sqrt{Var(R)}/\hat{R}}}\,\! }[/math]

The smaller of the two upper bounds will be the final upper bound and the larger of the two lower bounds will be the final lower bound.

Example: Air Condition Unit

The following table gives the failure times for the air conditioning unit of an aircraft. The observation ended by the time the last failure occurred, as discussed in Cox [3].

1. Estimate the GRP model parameters using the Type I virtual age option.

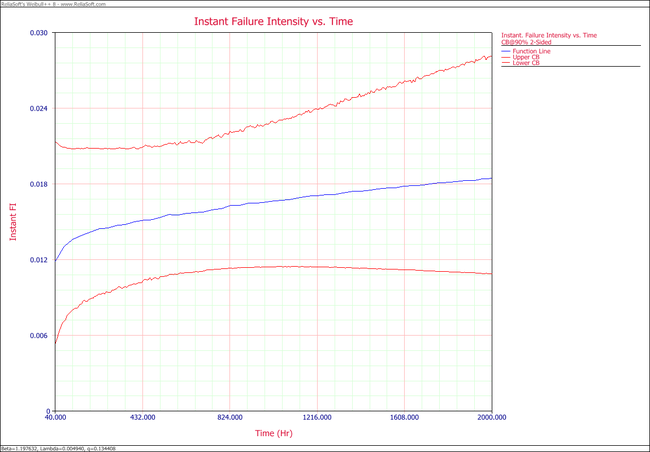

2. Plot the failure number and instantaneous failure intensity vs. time with 90% two-sided confidence bounds.

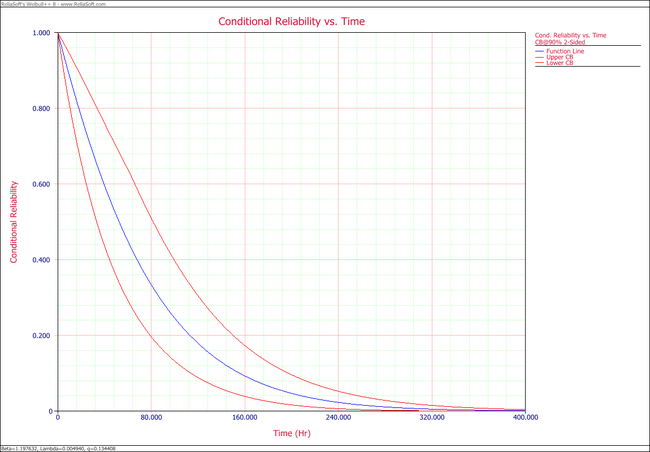

3. Plot the conditional reliability vs. time with 90% two-sided confidence bounds. The mission start time is 40 and mission time is varying.

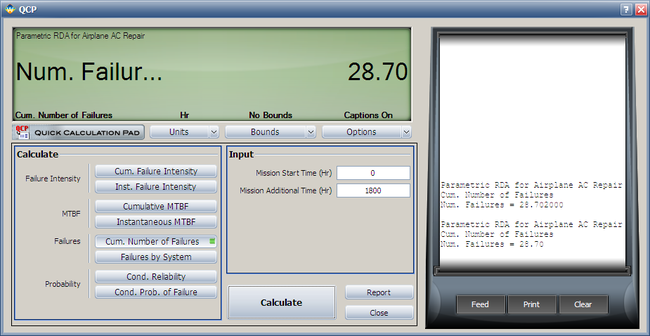

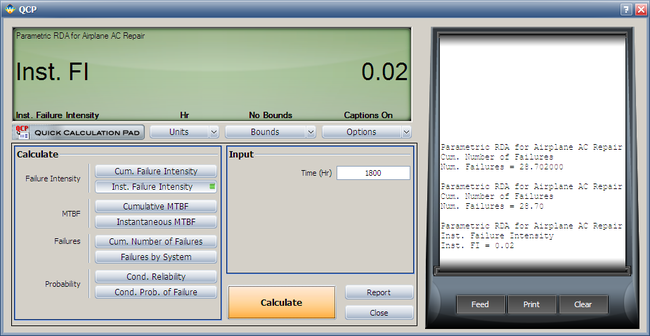

4. Using the QCP, calculate the expected failure number and expected instantaneous failure intensity by time 1800.

Solution

Enter the data into a parametric RDA folio in Weibull++. On the control panel, select the 3 parameters option and the Type I setting. Keep the default simulation settings. Click Calculate.

- 1. The estimated parameters are [math]\displaystyle{ \hat{\beta }=1.1976\,\! }[/math], [math]\displaystyle{ \hat{\lambda }=4.94E-03\,\! }[/math], [math]\displaystyle{ \hat{q}=0.1344\,\! }[/math].

- 2. The following plots show the cumulative number of failures and instantaneous failure intensity, respectively.

- 3. The following plot shows the conditional reliability.

- 4. Using the QCP, the failure number and instantaneous failure intensity are: