Reliability DOE for Life Tests: Difference between revisions

| (136 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

{{Template:Doebook| | {{Template:Doebook|15}} | ||

Reliability analysis is commonly thought of as an approach to model failures of existing products. The usual reliability analysis involves characterization of failures of the products using distributions such as exponential, Weibull and lognormal. Based on the fitted distribution, failures are mitigated, or warranty returns are predicted, or maintenance actions are planned. However, by adopting the methodology of Design for Reliability (DFR), reliability analysis can also be used as a powerful tool to design robust products that operate with minimal failures. In DFR, reliability analysis is carried out in conjunction with physics of failure and experiment design techniques. Under this approach, Design of Experiments (DOE) uses life data to "build" reliability into the products, not just quantify the existing reliability. Such an approach, if properly implemented, can result in significant cost savings, especially in terms of fewer warranty returns or repair and maintenance actions. Although DOE techniques can be used to improve product reliability and also make this reliability robust to noise factors, the discussion in this chapter is focused on reliability improvement. The robust parameter design method discussed in [[Robust Parameter Design|Robust Parameter Design]] can be used to produce robust and reliable product. | |||

=Reliability DOE Analysis= | |||

Reliability DOE (R-DOE) analysis is fairly similar to the analysis of other designed experiments except that the response is the life of the product in the respective units (e.g., for an automobile component the units of life may be miles, for a mechanical component this may be cycles, and for a pharmaceutical product this may be months or years). However, two important differences exist that make R-DOE analysis unique. The first is that life data of most products are typically well modeled by either the lognormal, Weibull or exponential distribution, but usually do not follow the normal distribution. Traditional DOE techniques follow the assumption that response values at any treatment level follow the normal distribution and therefore, the error terms, <math>\epsilon \,\!</math>, can be assumed to be normally and independently distributed. This assumption may not be valid for the response data used in most of the R-DOE analyses. Further, the life data obtained may either be complete or censored, and in this case standard regression techniques applicable to the response data in traditional DOEs can no longer be used. | |||

Design parameters, manufacturing process settings, and use stresses affecting the life of the product can be investigated using R-DOE analysis. In this case, the primary purpose of any R-DOE analysis is to identify which of the inputs affect the life of the product (by investigating if change in the level of any input factors leads to a significant change in the life of the product). For example, once the important stresses affecting the life of the product have been identified, detailed analyses can be carried out using ReliaSoft's ALTA software. ALTA includes a number of life-stress relationships (LSRs) to model the relation between life and the stress affecting the life of the product. | |||

=R-DOE Analysis of Lognormally Distributed Data= | |||

Assume that the life, | Assume that the life, <math>T\,\!</math>, for a certain product has been found to be lognormally distributed. The probability density function for the lognormal distribution is: | ||

::<math>f(T)=\frac{1}{T{\sigma }'\sqrt{2\pi }}{{e}^{-\frac{1}{2}{{\left( \frac{\ln (T)-{\mu }'}{{{\sigma }'}} \right)}^{2}}}}\,\!</math> | |||

where <math>{\mu }'\,\!</math> represents the mean of the natural logarithm of the times-to-failure and <math>{\sigma }'\,\!</math> represents the standard deviation of the natural logarithms of the times-to-failure [[DOE_References|[Meeker and Escobar 1998, Wu 2000, ReliaSoft 2007b]]]. If the analyst wants to investigate a single two level factor that may affect the life, <math>T\,\!</math>, then the following model may be used: | |||

::<math> | ::<math>{{T}_{i}}={{\mu }_{i}}+{{\xi }_{i}}\,\!</math> | ||

::<math>\ln ({{T}_{i}})={{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\epsilon }_{i}}</math> | where: | ||

*<math>{{T}_{i}}\,\!</math> represents the times-to-failure at the <math>i\,\!</math>th treatment level of the factor | |||

*<math>{{\mu }_{i}}\,\!</math> represents the mean value of <math>{{T}_{i}}\,\!</math> for the <math>i\,\!</math>th treatment | |||

*<math>{{\xi }_{i}}\,\!</math> is the random error term | |||

*The subscript <math>i\,\!</math> represents the treatment level of the factor with <math>i=1,2\,\!</math> for a two level factor | |||

The model of the equation shown above is analogous to the ANOVA model, <math>{{Y}_{i}}={{\mu }_{i}}+{{\epsilon }_{i}}\,\!</math>, used in the [[One Factor Designs]] and [[General Full Factorial Designs]] chapters for traditional DOE analyses. Note, however, that the random error term, <math>{{\xi }_{i}}\,\!</math>, is not normally distributed here because the response, <math>T\,\!</math>, is lognormally distributed. It is known that the logarithmic value of a lognormally distributed random variable follows the normal distribution. Therefore, if the logarithmic transformation of <math>T\,\!</math>, <math>ln(T)\,\!</math>, is used in the above equation, then the model will be identical to the ANOVA model, <math>{{Y}_{i}}={{\mu }_{i}}+{{\epsilon }_{i}}\,\!</math>, used in the other chapters. Thus, using the logarithmic failure times, the model can be written as: | |||

::<math>\ln ({{T}_{i}})=\mu _{i}^{\prime }+{{\epsilon }_{i}}\,\!</math> | |||

where: | |||

*<math>\ln ({{T}_{i}})\,\!</math> represents the logarithmic times-to-failure at the <math>i\,\!</math>th treatment | |||

*<math>\mu _{i}^{\prime }\,\!</math> represents the mean of the natural logarithm of the times-to-failure at the <math>i\,\!</math>th treatment | |||

*<math>{\sigma }'\,\!</math> represents the standard deviation of the natural logarithms of the times-to-failure | |||

The random error term, <math>{{\epsilon }_{i}}\,\!</math>, is normally distributed because the response, <math>\ln ({{T}_{i}})\,\!</math>, is normally distributed. Since the model of the equation given above is identical to the ANOVA model used in traditional DOE analysis, regression techniques can be applied here and the R-DOE analysis can be carried out similar to the traditional DOE analyses. Recall from [[Two_Level_Factorial_Experiments|Two Level Factorial Experiments]] that if the factor(s) affecting the response has only two levels, then the notation of the regression model can be applied to the ANOVA model. Therefore, the model of the above equation can be written using a single indicator variable, <math>{{x}_{1}}\,\!</math>, to represent the two level factor as: | |||

::<math>\ln ({{T}_{i}})={{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\epsilon }_{i}}\,\!</math> | |||

where <math>{{\beta }_{0\text{ }}}\,\!</math> is the intercept term and <math>{{\beta }_{1}}\,\!</math> is the effect coefficient for the investigated factor. Setting the two equations above equal to each other returns: | |||

::<math>\mu _{i}^{\prime }={{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}\,\!</math> | |||

The natural logarithm of the times-to-failure at any factor level, <math>\mu _{i}^{\prime }\,\!</math>, is referred to as the ''life characteristic'' because it represents a characteristic point of the underlying life distribution. The life characteristic used in the R-DOE analysis will change based on the underlying distribution assumed for the life data. If the analyst wants to investigate the effect of two factors (each at two levels) on the life of the product, then the life characteristic equation can be easily expanded as follows: | |||

::<math>\mu _{i}^{\prime }={{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}}\,\!</math> | |||

where <math>{{\beta }_{2}}\,\!</math> is the effect coefficient for the second factor and <math>{{x}_{2}}\,\!</math> is the indicator variable representing the second factor. If the interaction effect is also to be investigated, then the following equation can be used: | |||

::<math>\mu _{i}^{\prime }={{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}}+{{\beta }_{12}}{{x}_{i1}}{{x}_{i2}}\,\!</math> | |||

In general the model to investigate a given number of factors can be expressed as: | In general the model to investigate a given number of factors can be expressed as: | ||

::<math>\mu _{i}^{\prime }={{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}}+{{\beta }_{12}}{{x}_{i1}}{{x}_{i2}}+...</math> | |||

::<math>\mu _{i}^{\prime }={{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}}+{{\beta }_{12}}{{x}_{i1}}{{x}_{i2}}+...\,\!</math> | |||

Based on the model equations mentioned thus far, the analyst can easily conduct an R-DOE analysis for the lognormally distributed life data using standard regression techniques. However this is no longer true once the data also includes censored observations. In the case of censored data, the analysis has to be carried out using maximum likelihood estimation (MLE) techniques. | Based on the model equations mentioned thus far, the analyst can easily conduct an R-DOE analysis for the lognormally distributed life data using standard regression techniques. However this is no longer true once the data also includes censored observations. In the case of censored data, the analysis has to be carried out using maximum likelihood estimation (MLE) techniques. | ||

===Maximum Likelihood Estimation for the Lognormal Distribution=== | ===Maximum Likelihood Estimation for the Lognormal Distribution=== | ||

The maximum likelihood estimation method can be used to estimate parameters in R-DOE analyses when censored data are present. The likelihood function is calculated for each observed time to failure, | The maximum likelihood estimation method can be used to estimate parameters in R-DOE analyses when censored data are present. The likelihood function is calculated for each observed time to failure, <math>{{t}_{i}}\,\!</math>, and the parameters of the model are obtained by maximizing the log-likelihood function. The likelihood function for complete data following the lognormal distribution is given as: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{L}_{failures}}& = & \underset{i=1}{\overset{{{F}_{e}}}{\mathop \prod }}\,f({{t}_{i}},\mu _{i}^{\prime }) \\ | |||

& = & \underset{i=1}{\overset{{{F}_{e}}}{\mathop \prod }}\,\left[ \frac{1}{{{t}_{i}}{\sigma }'\sqrt{2\pi }}{{e}^{-\frac{1}{2}{{\left( \frac{\ln ({{t}_{i}})-\mu _{i}^{\prime }}{{{\sigma }'}} \right)}^{2}}}} \right] \\ | |||

& = & \underset{i=1}{\overset{{{F}_{e}}}{\mathop \prod }}\,\left[ \frac{1}{{{t}_{i}}{\sigma }'\sqrt{2\pi }}{{e}^{-\frac{1}{2}{{\left( \frac{\ln ({{t}_{i}})-({{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}}+...)}{{{\sigma }'}} \right)}^{2}}}} \right] | |||

\end{align}</math> | \end{align}\,\!</math> | ||

: | where: | ||

::<math>{{L}_{interval}}=\underset{i=11}{\overset{FI}{\mathop \prod }}\,\left[ \frac{1}{\sqrt{2\pi }}\ | *<math>{{F}_{e}}\,\!</math> is the total number of observed times-to-failure | ||

*<math>\mu _{i}^{\prime }\,\!</math> is the life characteristic | |||

*<math>{{t}_{i}}\,\!</math> is the time of the <math>i\,\!</math>th failure | |||

For right censored data the likelihood function [[DOE_References|[Meeker and Escobar 1998, Wu 2000, ReliaSoft 2007b]]] is: | |||

::<math>{{L}_{suspensions}}=\underset{i=1}{\overset{{{S}_{e}}}{\mathop \prod }}\,\left[ 1-\frac{1}{\sqrt{2\pi }}\int_{-\infty }^{\left( \tfrac{\ln ({{t}_{i}})-\mu _{i}^{\prime }}{{{\sigma }'}} \right)}{{e}^{-\tfrac{{{g}^{2}}}{2}}}dg \right]\,\!</math> | |||

where: | |||

*<math>{{S}_{e}}\,\!</math> is the total number of observed suspensions | |||

*<math>{{t}_{i}}\,\!</math> is the time of <math>i\,\!</math>th suspension | |||

For interval data the likelihood function [[DOE_References|[Meeker and Escobar 1998, Wu 2000, ReliaSoft 2007b]]] is: | |||

::<math>{{L}_{interval}}=\underset{i=11}{\overset{FI}{\mathop \prod }}\,\left[ \frac{1}{\sqrt{2\pi }}\int_{-\infty }^{\left( \tfrac{\ln (t_{i}^{2})-\mu _{i}^{\prime }}{{{\sigma }'}} \right)}{{e}^{-\tfrac{{{g}^{2}}}{2}}}dg-\frac{1}{\sqrt{2\pi }}\int_{-\infty }^{\left( \tfrac{\ln (t_{i}^{1})-\mu _{i}^{\prime }}{{{\sigma }'}} \right)}{{e}^{-\tfrac{{{g}^{2}}}{2}}}dg \right]\,\!</math> | |||

where: | |||

*<math>FI\,\!</math> is the total number of interval data | |||

*<math>t_{i}^{1}\,\!</math> is the beginning time of the <math>i\,\!</math>th interval | |||

*<math>t_{i}^{2}\,\!</math> is the end time of the <math>i\,\!</math>th interval | |||

The complete likelihood function when all types of data (complete, right censored and interval) are present is: | The complete likelihood function when all types of data (complete, right censored and interval) are present is: | ||

::<math>L({\sigma }',{{\beta }_{0}},{{\beta }_{1}}...)={{L}_{failures}}\cdot {{L}_{suspensions}}\cdot {{L}_{interval}}</math> | |||

::<math>L({\sigma }',{{\beta }_{0}},{{\beta }_{1}}...)={{L}_{failures}}\cdot {{L}_{suspensions}}\cdot {{L}_{interval}}\,\!</math> | |||

Then the log-likelihood function is: | Then the log-likelihood function is: | ||

::<math>\Lambda ({\sigma }',{{\beta }_{0}},{{\beta }_{1}}...)=\ln (L)\,\!</math> | |||

The MLE estimates are obtained by solving for parameters <math>({\sigma }',{{\beta }_{0}},{{\beta }_{1}}...)\,\!</math> so that: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 105: | Line 141: | ||

& \frac{\partial \Lambda }{\partial {{\beta }_{1}}}= & 0 \\ | & \frac{\partial \Lambda }{\partial {{\beta }_{1}}}= & 0 \\ | ||

& & ... | & & ... | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Once the estimates are obtained, the significance of any parameter, | Once the estimates are obtained, the significance of any parameter, <math>{{\theta }_{i}}\,\!</math>, can be assessed using the likelihood ratio test. | ||

===Hypothesis Tests=== | ===Hypothesis Tests=== | ||

Hypothesis testing in R-DOE analyses is carried out using the likelihood ratio test. To test the significance of a factor, the corresponding effect coefficient(s), | Hypothesis testing in R-DOE analyses is carried out using the likelihood ratio test. To test the significance of a factor, the corresponding effect coefficient(s), <math>{{\theta }_{i}}\,\!</math>, is tested. The following statements are used: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

& {{H}_{0}}: & {{\theta }_{i}}=0 \\ | & {{H}_{0}}: & {{\theta }_{i}}=0 \\ | ||

& {{H}_{1}}: & {{\theta }_{i}}\ne 0 | & {{H}_{1}}: & {{\theta }_{i}}\ne 0 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The statistic used for the test is the likelihood ratio, <math>LR\,\!</math>. The likelihood ratio for the parameter <math>{{\theta }_{i}}\,\!</math> is calculated as follows: | |||

::<math>LR=-2\ln \frac{L({{{\hat{\theta }}}_{(-i)}})}{L(\hat{\theta })}\,\!</math> | |||

: | where: | ||

*<math>\hat{\theta }\,\!</math> is the vector of all parameter estimates obtained using MLE (i.e., <math>\hat{\theta }=[{{\hat{\sigma }}^{\prime }}\,\!</math> <math>{{\hat{\beta }}_{0}}\,\!</math> <math>{{\hat{\beta }}_{1}}\,\!</math>... <math>{]}'\,\!</math>) | |||

*<math>{{\hat{\theta }}_{(-i)}}\,\!</math> is the vector of all parameter estimates excluding the estimate of <math>{{\theta }_{i}}\,\!</math> | |||

*<math>L(\hat{\theta })\,\!</math> is the value of the likelihood function when all parameters are included in the model | |||

*<math>L({{\hat{\theta }}_{(-i)}})\,\!</math> is the value of the likelihood function when all parameters except <math>{{\theta }_{i}}\,\!</math> are included in the model | |||

To illustrate the use of MLE in R-DOE analysis, consider the case where the life of a product is thought to be affected by two factors, | |||

If the null hypothesis, <math>{{H}_{0}}\,\!</math>, is true then the ratio, <math>-2\ln L({{\hat{\theta }}_{(-i)}})/L(\hat{\theta })\,\!</math>, follows the chi-squared distribution with one degree of freedom. Therefore, <math>{{H}_{0}}\,\!</math> is rejected at a significance level, <math>\alpha \,\!</math>, if <math>LR\,\!</math> is greater than the critical value <math>\chi _{1,\alpha }^{2}\,\!</math>. | |||

The likelihood ratio test can also be used to test the significance of a number of parameters, <math>r\,\!</math>, at the same time. In this case, <math>L({{\hat{\theta }}_{(-i)}})\,\!</math> represents the likelihood value when all <math>r\,\!</math> parameters to be tested are not included in the model. In other words, <math>L({{\hat{\theta }}_{(-i)}})\,\!</math> would represent the likelihood value for the reduced model that does not contain the <math>r\,\!</math> parameters under test. Here, the ratio <math>-2\ln L({{\hat{\theta }}_{(-i)}})/L(\hat{\theta })\,\!</math> will follow the chi-squared distribution with <math>k-r\,\!</math> degrees of freedom if all <math>r\,\!</math> parameters are insignificant (with <math>k\,\!</math> representing the number of parameters in the full model). Thus, if <math>LR>\chi _{k-r,\alpha }^{2}\,\!</math>, the null hypothesis, <math>{{H}_{0}}\,\!</math>, is rejected and it can be concluded that at least one of the <math>r\,\!</math> parameters is significant. | |||

====Example==== | |||

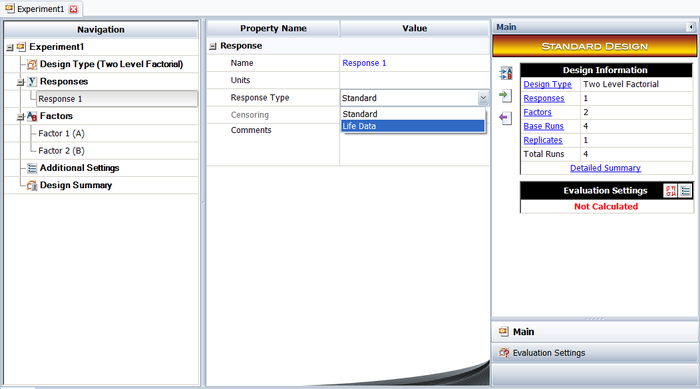

To illustrate the use of MLE in R-DOE analysis, consider the case where the life of a product is thought to be affected by two factors, <math>A\,\!</math> and <math>B\,\!</math>. The failure of the product has been found to follow the lognormal distribution. The analyst decides to run an R-DOE analysis using a single replicate of the <math>2^{2}\,\!</math> design. Previous studies indicate that the interaction between <math>A\,\!</math> and <math>B\,\!</math> does not affect the life of the product. The design for this experiment can be set up in a Weibull++ DOE folio as shown in the following figure. | |||

[[Image:doe11_1.png|center|700px|Design properties for the experiment in the example.]] | |||

The resulting experiment design and the corresponding times-to-failure data obtained are shown next. Note that, although the life data set contains ''complete data'' and regression techniques are applicable, calculations are shown using MLE. Weibull++ DOE folios use MLE for all R-DOE analysis calculations. | |||

[[Image: | [[Image:doe11_2.png|center|700px|The <math>2^2\,\!</math> experiment design and the corresponding life data for the example.]] | ||

Because the purpose of the experiment is to study two factors without considering their interaction, the applicable model for the lognormally distributed response data is: | Because the purpose of the experiment is to study two factors without considering their interaction, the applicable model for the lognormally distributed response data is: | ||

where | ::<math>\mu _{i}^{\prime }={{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}}\,\!</math> | ||

where <math>\mu _{i}^{\prime }\,\!</math> is the mean of the natural logarithm of the times-to-failure at the <math>i\,\!</math> th treatment combination (<math>i=1,2,3,4\,\!</math>), <math>{{\beta }_{1}}\,\!</math> is the effect coefficient for factor <math>A\,\!</math> and <math>{{\beta }_{2}}\,\!</math> is the effect coefficient for factor <math>B\,\!</math>. The analysis for this case is carried out in a DOE folio by excluding the interaction <math>AB\,\!</math> from the analysis. | |||

The following hypotheses need to be tested in this example: | The following hypotheses need to be tested in this example: | ||

where | 1) <math>{{H}_{0}}:{{\beta }_{1}}=0\,\!</math> | ||

<math>{{H}_{1}}:{{\beta }_{1}}\ne 0\,\!</math> | |||

This test investigates the main effect of factor <math>A\,\!</math>. The statistic for this test is: | |||

::<math>L{{R}_{A}}=-2\ln \frac{{{L}_{\tilde{\ }A}}}{L}\,\!</math> | |||

where <math>L\,\!</math> represents the value of the likelihood function when all coefficients are included in the model and <math>{{L}_{\tilde{\ }A}}\,\!</math> represents the value of the likelihood function when all coefficients except <math>{{\beta }_{1}}\,\!</math> are included in the model. | |||

::<math>L{{R}_{B}}=-2\ln \frac{{{L}_{\tilde{\ }B}}}{L}</math> | 2) <math>{{H}_{0}}:{{\beta }_{2}}=0\,\!</math> | ||

<math>{{H}_{1}}:{{\beta }_{2}}\ne 0\,\!</math> | |||

This test investigates the main effect of factor <math>B\,\!</math>. The statistic for this test is: | |||

::<math>L{{R}_{B}}=-2\ln \frac{{{L}_{\tilde{\ }B}}}{L}\,\!</math> | |||

where <math>L\,\!</math> represents the value of the likelihood function when all coefficients are included in the model and <math>{{L}_{\tilde{\ }B}}\,\!</math> represents the value of the likelihood function when all coefficients except <math>{{\beta }_{2}}\,\!</math> are included in the model. | |||

To calculate the test statistics, the maximum likelihood estimates of the parameters must be known. The estimates are obtained next. | To calculate the test statistics, the maximum likelihood estimates of the parameters must be known. The estimates are obtained next. | ||

===MLE Estimates=== | ===MLE Estimates=== | ||

Since the life data for the present experiment are complete and follow the lognormal distribution, the likelihood function can be written as: | Since the life data for the [[Reliability_DOE_for_Life_Tests#Example|present experiment]] are complete and follow the lognormal distribution, the likelihood function can be written as: | ||

::<math>L=\underset{i=1}{\overset{4}{\mathop \prod }}\,\left[ \frac{1}{{{t}_{i}}{\sigma }'\sqrt{2\pi }}{{e}^{-\frac{1}{2}{{\left( \frac{\ln ({{t}_{i}})-\mu _{i}^{\prime }}{{{\sigma }'}} \right)}^{2}}}} \right]\,\!</math> | |||

Substituting <math>\mu _{i}^{\prime }\,\!</math> from the applicable model for the lognormally distributed response data, the likelihood function is: | |||

::<math>L=\underset{i=1}{\overset{4}{\mathop \prod }}\,\left[ \frac{1}{{{t}_{i}}{\sigma }'\sqrt{2\pi }}{{e}^{-\frac{1}{2}{{\left( \frac{\ln ({{t}_{i}})-({{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}})}{{{\sigma }'}} \right)}^{2}}}} \right]\,\!</math> | |||

Then the log-likelihood function is: | Then the log-likelihood function is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

\Lambda ({\sigma }',{{\beta }_{0}},{{\beta }_{1}},{{\beta }_{2}}) & = & \ln (L) \\ | |||

& = & \underset{i=1}{\overset{4}{\mathop \sum }}\,\ln \left[ \frac{1}{{{t}_{i}}{\sigma }'\sqrt{2\pi }}{{e}^{-\frac{1}{2}{{\left( \frac{\ln ({{t}_{i}})-({{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}})}{{{\sigma }'}} \right)}^{2}}}} \right] \\ | & = & \underset{i=1}{\overset{4}{\mathop \sum }}\,\ln \left[ \frac{1}{{{t}_{i}}{\sigma }'\sqrt{2\pi }}{{e}^{-\frac{1}{2}{{\left( \frac{\ln ({{t}_{i}})-({{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}})}{{{\sigma }'}} \right)}^{2}}}} \right] \\ | ||

& = & \ln \left[ \frac{1}{{{t}_{1}}{{t}_{2}}{{t}_{3}}{{t}_{4}}{{({\sigma }'\sqrt{2\pi })}^{4}}} \right]+ \\ | & = & \ln \left[ \frac{1}{{{t}_{1}}{{t}_{2}}{{t}_{3}}{{t}_{4}}{{({\sigma }'\sqrt{2\pi })}^{4}}} \right]+ \\ | ||

| Line 193: | Line 251: | ||

& = & -[\ln ({{t}_{1}}{{t}_{2}}{{t}_{3}}{{t}_{4}})+4\ln ({\sigma }')+2\ln (2\pi )]+ \\ | & = & -[\ln ({{t}_{1}}{{t}_{2}}{{t}_{3}}{{t}_{4}})+4\ln ({\sigma }')+2\ln (2\pi )]+ \\ | ||

& & \left[ -\frac{1}{2}\underset{i=1}{\overset{4}{\mathop \sum }}\,{{\left( \frac{\ln ({{t}_{i}})-({{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}})}{{{\sigma }'}} \right)}^{2}} \right] | & & \left[ -\frac{1}{2}\underset{i=1}{\overset{4}{\mathop \sum }}\,{{\left( \frac{\ln ({{t}_{i}})-({{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}})}{{{\sigma }'}} \right)}^{2}} \right] | ||

\end{align}</math> | \end{align}\,\!</math> | ||

To obtain the MLE estimates of the parameters, <math>{\sigma }',{{\beta }_{0}},{{\beta }_{1}}\,\!</math> and <math>{{\beta }_{2}}\,\!</math>, the log-likelihood function must be differentiated with respect to these parameters: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

\frac{\partial \Lambda }{\partial {\sigma }'}& = & -\frac{4}{{{\sigma }'}}+\frac{1}{{{({\sigma }')}^{3}}}\underset{i=1}{\overset{4}{\mathop \sum }}\,{{[\ln ({{t}_{i}})-({{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}})]}^{2}} \\ | |||

& \frac{\partial \Lambda }{\partial {{\beta }_{0}}}= & \frac{1}{{{({\sigma }')}^{2}}}\underset{i=1}{\overset{4}{\mathop \sum }}\,[\ln ({{t}_{i}})-({{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}})] \\ | & \frac{\partial \Lambda }{\partial {{\beta }_{0}}}= & \frac{1}{{{({\sigma }')}^{2}}}\underset{i=1}{\overset{4}{\mathop \sum }}\,[\ln ({{t}_{i}})-({{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}})] \\ | ||

& \frac{\partial \Lambda }{\partial {{\beta }_{1}}}= & \frac{1}{{{({\sigma }')}^{2}}}\underset{i=1}{\overset{4}{\mathop \sum }}\,{{x}_{i1}}[\ln ({{t}_{i}})-({{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}})] \\ | & \frac{\partial \Lambda }{\partial {{\beta }_{1}}}= & \frac{1}{{{({\sigma }')}^{2}}}\underset{i=1}{\overset{4}{\mathop \sum }}\,{{x}_{i1}}[\ln ({{t}_{i}})-({{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}})] \\ | ||

& \frac{\partial \Lambda }{\partial {{\beta }_{2}}}= & \frac{1}{{{({\sigma }')}^{2}}}\underset{i=1}{\overset{4}{\mathop \sum }}\,{{x}_{i2}}[\ln ({{t}_{i}})-({{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}})] | & \frac{\partial \Lambda }{\partial {{\beta }_{2}}}= & \frac{1}{{{({\sigma }')}^{2}}}\underset{i=1}{\overset{4}{\mathop \sum }}\,{{x}_{i2}}[\ln ({{t}_{i}})-({{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}})] | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Equating the <math>\partial \Lambda /\partial {{\theta }_{i}}\,\!</math> terms to zero returns the required estimates. The coefficients <math>{{\hat{\beta }}_{0}}\,\!</math>, <math>{{\hat{\beta }}_{1}}\,\!</math> and <math>{{\hat{\beta }}_{2}}\,\!</math> are obtained first as these are required to estimate <math>{{\hat{\sigma }}^{\prime }}\,\!</math>. Setting <math>\partial \Lambda /\partial {{\beta }_{0}}=0\,\!</math>: | |||

::<math>\underset{i=1}{\overset{4}{\mathop \sum }}\,[\ln ({{t}_{i}})-({{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}})]=0\,\!</math> | |||

Substituting the values of <math>{{t}_{i}}\,\!</math>, <math>{{x}_{i1}}\,\!</math> and <math>{{x}_{i2}}\,\!</math> from the [[Reliability_DOE_for_Life_Tests#Example|example's]] experiment design and corresponding data and simplifying: | |||

::<math>\ | ::<math>\ln {{t}_{1}}+\ln {{t}_{2}}+\ln {{t}_{3}}+\ln {{t}_{4}}-4{{\beta }_{0}}=0\,\!</math> | ||

: | Thus: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{{\hat{\beta }}}_{0}} & = & \frac{1}{4}(\ln {{t}_{1}}+\ln {{t}_{2}}+\ln {{t}_{3}}+\ln {{t}_{4}}) \\ | |||

& = & \frac{1}{4}(3.2958+3.2189+3.912+4.0073) \\ | & = & \frac{1}{4}(3.2958+3.2189+3.912+4.0073) \\ | ||

& = & 3.6085 | & = & 3.6085 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Setting | Setting <math>\partial \Lambda /\partial {{\beta }_{1}}=0\,\!</math>: | ||

:Thus: | ::<math>{{x}_{i1}}\ln {{t}_{1}}+{{x}_{i1}}\ln {{t}_{2}}+{{x}_{i1}}\ln {{t}_{3}}+{{x}_{i1}}\ln {{t}_{4}}-4{{\beta }_{1}}=0\,\!</math> | ||

Thus: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{{\hat{\beta }}}_{1}} & = & \frac{1}{4}(-\ln {{t}_{1}}+\ln {{t}_{2}}-\ln {{t}_{3}}+\ln {{t}_{4}}) \\ | |||

& = & \frac{1}{4}(-3.2958+3.2189-3.912+4.0073) \\ | & = & \frac{1}{4}(-3.2958+3.2189-3.912+4.0073) \\ | ||

& = & 0.0046 | & = & 0.0046 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Setting <math>\partial \Lambda /\partial {{\beta }_{2}}=0\,\!</math>: | |||

:Thus: | ::<math>{{x}_{i2}}\ln {{t}_{1}}+{{x}_{i2}}\ln {{t}_{2}}+{{x}_{i3}}\ln {{t}_{3}}+{{x}_{i4}}\ln {{t}_{4}}-4{{\beta }_{2}}=0\,\!</math> | ||

Thus: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{{\hat{\beta }}}_{2}} & = & \frac{1}{4}(-\ln {{t}_{1}}-\ln {{t}_{2}}+\ln {{t}_{3}}+\ln {{t}_{4}}) \\ | |||

& = & \frac{1}{4}(-3.2958-3.2189+3.912+4.0073) \\ | & = & \frac{1}{4}(-3.2958-3.2189+3.912+4.0073) \\ | ||

& = & 0.3512 | & = & 0.3512 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Knowing | Knowing <math>{{\hat{\beta }}_{0}}\,\!</math>, <math>{{\hat{\beta }}_{1}}\,\!</math> and <math>{{\hat{\beta }}_{2}}\,\!</math>, <math>{{\hat{\sigma }}^{\prime }}\,\!</math> can now be obtained. Setting <math>\partial \Lambda /\partial {\sigma }'=0\,\!</math>: | ||

:Thus: | ::<math>-\frac{4}{{{\sigma }'}}+\frac{1}{{{({\sigma }')}^{3}}}\underset{i=1}{\overset{4}{\mathop \sum }}\,{{[\ln ({{t}_{i}})-(3.6085+0.0046{{x}_{i1}}+0.3512{{x}_{i2}})]}^{2}}=0\,\!</math> | ||

Thus: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{{\hat{\sigma }}}^{\prime }} & = & \frac{1}{2}\sqrt{\underset{i=1}{\overset{4}{\mathop \sum }}\,{{[\ln ({{t}_{i}})-(3.6085+0.0046{{x}_{i1}}+0.3512{{x}_{i2}})]}^{2}}} \\ | |||

& = & 0.043 | & = & 0.043 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

| Line 262: | Line 337: | ||

===Likelihood Ratio Test=== | ===Likelihood Ratio Test=== | ||

The likelihood ratio test for factor | The likelihood ratio test for factor <math>A\,\!</math> is conducted by using the likelihood value corresponding to the full model and the likelihood value when <math>A\,\!</math> is not included in the model. The likelihood value corresponding to the full model (in this case <math>\mu _{i}^{\prime }={{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}}\,\!</math>) is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

L & = & \underset{i=1}{\overset{4}{\mathop \prod }}\,\left[ \frac{1}{{{t}_{i}}{{{\hat{\sigma }}}^{\prime }}\sqrt{2\pi }}{{e}^{-\frac{1}{2}{{\left( \frac{\ln ({{t}_{i}})-({{{\hat{\beta }}}_{0}}+{{{\hat{\beta }}}_{1}}{{x}_{i1}}+{{{\hat{\beta }}}_{2}}{{x}_{i2}})}{{{{\hat{\sigma }}}^{\prime }}} \right)}^{2}}}} \right] \\ | |||

& = & 0.000537311 | & = & 0.000537311 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The corresponding logarithmic value is <math>\ln (L)=\ln (0.000537311)=-7.529\,\!</math>. | |||

The likelihood value for the reduced model that does not contain factor <math>A\,\!</math> (in this case <math>\mu _{i}^{\prime }={{\beta }_{0}}+{{\beta }_{2}}{{x}_{i2}}\,\!</math>) is: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{L}_{\tilde{\ }A}} & = & \underset{i=1}{\overset{4}{\mathop \prod }}\,\left[ \frac{1}{{{t}_{i}}{{{\hat{\sigma }}}^{\prime }}\sqrt{2\pi }}{{e}^{-\frac{1}{2}{{\left( \frac{\ln ({{t}_{i}})-({{{\hat{\beta }}}_{0}}+{{{\hat{\beta }}}_{2}}{{x}_{i2}})}{{{{\hat{\sigma }}}^{\prime }}} \right)}^{2}}}} \right] \\ | |||

& = & 0.000525337 | & = & 0.000525337 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The corresponding logarithmic value is <math>\ln ({{L}_{\tilde{\ }A}})=\ln (0.000525337)=-7.552\,\!</math>. | |||

Therefore, the likelihood ratio to test the significance of factor <math>A\,\!</math> is: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

L{{R}_{A}} & = & -2\ln \frac{{{L}_{\tilde{\ }A}}}{L} \\ | |||

& = & -2\ln \frac{0.000525337}{0.000537311} \\ | & = & -2\ln \frac{0.000525337}{0.000537311} \\ | ||

& = & 0.0451 | & = & 0.0451 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The | The <math>p\,\!</math> value corresponding to <math>L{{R}_{A}}\,\!</math> is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

p\text{ }value & = & 1-P(\chi _{1}^{2}<L{{R}_{A}}) \\ | |||

& = & 1-0.1682 \\ | & = & 1-0.1682 \\ | ||

& = & 0.8318 | & = & 0.8318 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Assuming that the desired significance level for the [[Reliability_DOE_for_Life_Tests#Example|present experiment]] is 0.1, since <math>p\ value>0.1\,\!</math>, <math>{{H}_{0}}:{{\beta }_{1}}=0\,\!</math> cannot be rejected and it can be concluded that factor <math>A\,\!</math> does not affect the life of the product. | |||

The likelihood ratio to test factor <math>B\,\!</math> can be calculated in a similar way as shown next: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

L{{R}_{B}} & = & -2\ln \frac{{{L}_{\tilde{\ }B}}}{L} \\ | |||

& = & -2\ln \frac{1.17995E-07}{0.000537311} \\ | & = & -2\ln \frac{1.17995E-07}{0.000537311} \\ | ||

& = & 16.8475 | & = & 16.8475 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The <math>p\,\!</math> value corresponding to <math>L{{R}_{B}}\,\!</math> is: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

p\text{ }value & = & 1-P(\chi _{1}^{2}<L{{R}_{B}}) \\ | |||

& = & 1-0.99996 \\ | & = & 1-0.99996 \\ | ||

& = & 0.00004 | & = & 0.00004 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

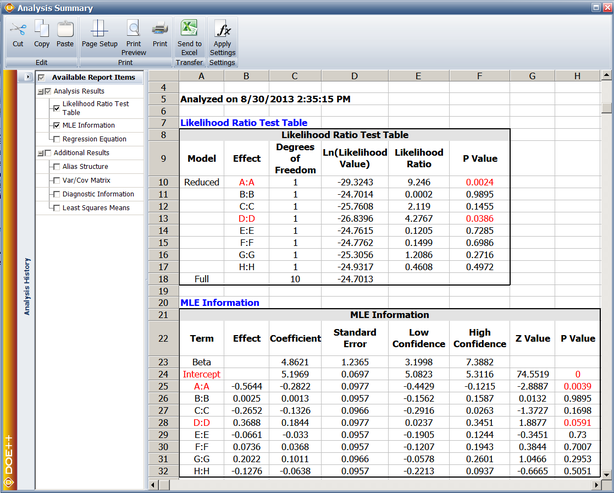

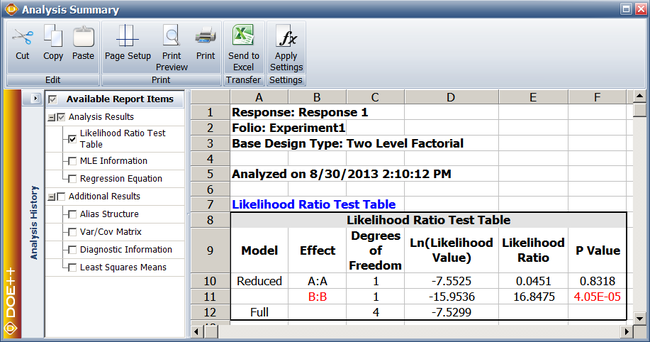

Since | Since <math>p\,\!</math> <math>value<0.1\,\!</math>, <math>{{H}_{0}}:{{\beta }_{2}}=0\,\!</math> is rejected and it is concluded that factor <math>B\,\!</math> affects the life of the product. The previous calculation results are displayed as the Likelihood Ratio Test Table in the results obtained from the DOE folio as shown next. | ||

[[Image:doe11_3.png|center|650px|Likelihood ratio test results from Webibull++ for the experiment in the [[Reliability_DOE_for_Life_Tests#Example|example]].]] | |||

[[Image: | |||

==Fisher Matrix Bounds on Parameters== | ==Fisher Matrix Bounds on Parameters== | ||

In general, the MLE estimates of the parameters are asymptotically normal. This means that for large sample sizes the distribution of the estimates from the same population would be very close to the normal distribution [ | In general, the MLE estimates of the parameters are asymptotically normal. This means that for large sample sizes the distribution of the estimates from the same population would be very close to the normal distribution[[DOE_References|[Meeker and Escobar 1998]]]. If <math>\hat{\theta }\,\!</math> is the MLE estimate of any parameter, <math>\theta \,\!</math>, then the (<math>1-\alpha \,\!</math>)% two-sided confidence bounds on the parameter are: | ||

::<math>\hat{\theta }-{{z}_{\alpha /2}}\cdot \sqrt{Var(\hat{\theta })}<\theta <\hat{\theta }+{{z}_{\alpha /2}}\cdot \sqrt{Var(\hat{\theta })}</math> | ::<math>\hat{\theta }-{{z}_{\alpha /2}}\cdot \sqrt{Var(\hat{\theta })}<\theta <\hat{\theta }+{{z}_{\alpha /2}}\cdot \sqrt{Var(\hat{\theta })}\,\!</math> | ||

where | where <math>Var(\hat{\theta })\,\!</math> represents the variance of <math>\hat{\theta }\,\!</math> and <math>{{z}_{\alpha /2}}\,\!</math> is the critical value corresponding to a significance level of <math>\alpha /2\,\!</math> on the standard normal distribution. The variance of the parameter, <math>Var(\hat{\theta })\,\!</math>, is obtained using the Fisher information matrix. For <math>k\,\!</math> parameters, the Fisher information matrix is obtained from the log-likelihood function <math>\Lambda \,\!</math> as follows: | ||

| Line 340: | Line 420: | ||

. & . & ... & . \\ | . & . & ... & . \\ | ||

-\frac{{{\partial }^{2}}\Lambda }{\partial {{\theta }_{1}}\partial {{\theta }_{k}}} & . & ... & -\frac{{{\partial }^{2}}\Lambda }{\partial \theta _{k}^{2}} \\ | -\frac{{{\partial }^{2}}\Lambda }{\partial {{\theta }_{1}}\partial {{\theta }_{k}}} & . & ... & -\frac{{{\partial }^{2}}\Lambda }{\partial \theta _{k}^{2}} \\ | ||

\end{matrix} \right]</math> | \end{matrix} \right]\,\!</math> | ||

The variance-covariance matrix is obtained by inverting the Fisher matrix | The variance-covariance matrix is obtained by inverting the Fisher matrix <math>F\,\!</math>: | ||

| Line 352: | Line 432: | ||

. & . & ... & {} \\ | . & . & ... & {} \\ | ||

Cov({{{\hat{\theta }}}_{1}},{{{\hat{\theta }}}_{k}}) & . & ... & Var({{{\hat{\theta }}}_{k}}) \\ | Cov({{{\hat{\theta }}}_{1}},{{{\hat{\theta }}}_{k}}) & . & ... & Var({{{\hat{\theta }}}_{k}}) \\ | ||

\end{matrix} \right]= | \end{matrix} \right]={{\left[ \begin{matrix} | ||

-\frac{{{\partial }^{2}}\Lambda }{\partial \theta _{1}^{2}} & -\frac{{{\partial }^{2}}\Lambda }{\partial {{\theta }_{1}}\partial {{\theta }_{2}}} & ... & {} \\ | -\frac{{{\partial }^{2}}\Lambda }{\partial \theta _{1}^{2}} & -\frac{{{\partial }^{2}}\Lambda }{\partial {{\theta }_{1}}\partial {{\theta }_{2}}} & ... & {} \\ | ||

-\frac{{{\partial }^{2}}\Lambda }{\partial {{\theta }_{1}}\partial {{\theta }_{2}}} & -\frac{{{\partial }^{2}}\Lambda }{\partial \theta _{2}^{2}} & ... & {} \\ | -\frac{{{\partial }^{2}}\Lambda }{\partial {{\theta }_{1}}\partial {{\theta }_{2}}} & -\frac{{{\partial }^{2}}\Lambda }{\partial \theta _{2}^{2}} & ... & {} \\ | ||

| Line 362: | Line 438: | ||

. & . & ... & {} \\ | . & . & ... & {} \\ | ||

-\frac{{{\partial }^{2}}\Lambda }{\partial {{\theta }_{1}}\partial {{\theta }_{k}}} & . & ... & -\frac{{{\partial }^{2}}\Lambda }{\partial \theta _{k}^{2}} \\ | -\frac{{{\partial }^{2}}\Lambda }{\partial {{\theta }_{1}}\partial {{\theta }_{k}}} & . & ... & -\frac{{{\partial }^{2}}\Lambda }{\partial \theta _{k}^{2}} \\ | ||

\end{matrix} \right]}^{-1}}</math> | \end{matrix} \right]}^{-1}}\,\!</math> | ||

Once the variance-covariance matrix is known the variance of any parameter can be obtained from the diagonal elements of the matrix. Note that if a parameter, <math>\theta \,\!</math>, can take only positive values, it is assumed that the <math>\ln (\hat{\theta })\,\!</math> follows the normal distribution [[DOE_References|[Meeker and Escobar 1998]]]. The bounds on the parameter in this case are: | |||

::<math>CI\text{ }on\text{ }\ln (\hat{\theta })=\ln (\hat{\theta })\pm {{z}_{\alpha /2}}\sqrt{Var(\ln (\hat{\theta }))}\,\!</math> | |||

Using <math>Var[f(\hat{\theta })]={{(\partial f/\partial \theta )}^{2}}\cdot Var(\hat{\theta })\,\!</math> we get <math>Var(\ln (\hat{\theta }))={{(1/\hat{\theta })}^{2}}Var(\hat{\theta })\,\!</math>. Substituting this value we have: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

CI\text{ }on\text{ }\ln (\hat{\theta })& = & \ln (\hat{\theta })\pm {{z}_{\alpha /2}}\sqrt{{{(1/\hat{\theta })}^{2}}Var(\hat{\theta })} \\ | |||

& = & \ln (\hat{\theta })\pm ({{z}_{\alpha /2}}/\hat{\theta })\sqrt{Var(\hat{\theta })} \\ | & = & \ln (\hat{\theta })\pm ({{z}_{\alpha /2}}/\hat{\theta })\sqrt{Var(\hat{\theta })} \\ | ||

or\text{ }CI\text{ }on\text{ }\hat{\theta }&= & \exp [\ln (\hat{\theta })\pm ({{z}_{\alpha /2}}/\hat{\theta })\sqrt{Var(\hat{\theta })}] \\ | |||

& = & \hat{\theta }\cdot \exp [\pm ({{z}_{\alpha /2}}/\hat{\theta })\sqrt{Var(\hat{\theta })}] | & = & \hat{\theta }\cdot \exp [\pm ({{z}_{\alpha /2}}/\hat{\theta })\sqrt{Var(\hat{\theta })}] | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Knowing <math>Var(\hat{\theta })\,\!</math> from the variance-covariance matrix, the confidence bounds on <math>\hat{\theta }\,\!</math> can then be determined. | |||

Continuing with the present [[Reliability_DOE_for_Life_Tests#Example|example]], the confidence bounds on the MLE estimates of the parameters <math>{{\beta }_{0}}\,\!</math>, <math>{{\beta }_{1}}\,\!</math>, <math>{{\beta }_{2}}\,\!</math> and <math>{\sigma }'\,\!</math> can now be obtained. The Fisher information matrix for the example is: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

F & = & \left[ \begin{matrix} | |||

-\frac{{{\partial }^{2}}\Lambda }{\partial \beta _{0}^{2}} & -\frac{{{\partial }^{2}}\Lambda }{\partial {{\beta }_{0}}\partial {{\beta }_{1}}} & -\frac{{{\partial }^{2}}\Lambda }{\partial {{\beta }_{0}}\partial {{\beta }_{2}}} & -\frac{{{\partial }^{2}}\Lambda }{\partial {{\beta }_{0}}\partial {\sigma }'} \\ | -\frac{{{\partial }^{2}}\Lambda }{\partial \beta _{0}^{2}} & -\frac{{{\partial }^{2}}\Lambda }{\partial {{\beta }_{0}}\partial {{\beta }_{1}}} & -\frac{{{\partial }^{2}}\Lambda }{\partial {{\beta }_{0}}\partial {{\beta }_{2}}} & -\frac{{{\partial }^{2}}\Lambda }{\partial {{\beta }_{0}}\partial {\sigma }'} \\ | ||

{} & -\frac{{{\partial }^{2}}\Lambda }{\partial \beta _{1}^{2}} & -\frac{{{\partial }^{2}}\Lambda }{\partial {{\beta }_{1}}\partial {{\beta }_{2}}} & -\frac{{{\partial }^{2}}\Lambda }{\partial {{\beta }_{1}}\partial {\sigma }'} \\ | {} & -\frac{{{\partial }^{2}}\Lambda }{\partial \beta _{1}^{2}} & -\frac{{{\partial }^{2}}\Lambda }{\partial {{\beta }_{1}}\partial {{\beta }_{2}}} & -\frac{{{\partial }^{2}}\Lambda }{\partial {{\beta }_{1}}\partial {\sigma }'} \\ | ||

| Line 405: | Line 482: | ||

sym. & {} & {} & 4330.8227 \\ | sym. & {} & {} & 4330.8227 \\ | ||

\end{matrix} \right] | \end{matrix} \right] | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The variance-covariance matrix can be obtained by taking the inverse of the Fisher matrix <math>F\,\!</math>: | |||

::<math>\left[ \begin{matrix} | ::<math>\left[ \begin{matrix} | ||

| Line 415: | Line 493: | ||

{} & {} & Var({{{\hat{\beta }}}_{2}}) & Cov({{{\hat{\beta }}}_{0}},{{{\hat{\sigma }}}^{\prime }}) \\ | {} & {} & Var({{{\hat{\beta }}}_{2}}) & Cov({{{\hat{\beta }}}_{0}},{{{\hat{\sigma }}}^{\prime }}) \\ | ||

sym. & {} & {} & Var({{{\hat{\sigma }}}^{\prime }}) \\ | sym. & {} & {} & Var({{{\hat{\sigma }}}^{\prime }}) \\ | ||

\end{matrix} \right]={{F}^{-1}}</math> | \end{matrix} \right]={{F}^{-1}}\,\!</math> | ||

Inverting | Inverting <math>F\,\!</math> returns the following matrix: | ||

::<math>{{F}^{-1}}=\left[ \begin{matrix} | ::<math>{{F}^{-1}}=\left[ \begin{matrix} | ||

| Line 425: | Line 504: | ||

{} & {} & 4.617E-4 & 0 \\ | {} & {} & 4.617E-4 & 0 \\ | ||

sym. & {} & {} & 2.309E-4 \\ | sym. & {} & {} & 2.309E-4 \\ | ||

\end{matrix} \right]</math> | \end{matrix} \right]\,\!</math> | ||

Therefore, the variance of the parameter estimates are: | Therefore, the variance of the parameter estimates are: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 435: | Line 515: | ||

& Var({{{\hat{\beta }}}_{2}})= & 4.617E-4 \\ | & Var({{{\hat{\beta }}}_{2}})= & 4.617E-4 \\ | ||

& Var({{{\hat{\sigma }}}^{\prime }})= & 2.309E-4 | & Var({{{\hat{\sigma }}}^{\prime }})= & 2.309E-4 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Knowing the variance, the confidence bounds on the parameters can be calculated. For example, the 90% bounds ( <math>\alpha =0.1</math> ) on | Knowing the variance, the confidence bounds on the parameters can be calculated. For example, the 90% bounds (<math>\alpha =0.1\,\!</math>) on <math>{{\hat{\beta }}_{2}}\,\!</math> can be calculated as shown next: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

CI & = & {{{\hat{\beta }}}_{2}}\pm {{z}_{\alpha /2}}\cdot \sqrt{Var({{{\hat{\beta }}}_{2}})} \\ | |||

& = & {{{\hat{\beta }}}_{2}}\pm {{z}_{0.05}}\cdot \sqrt{Var({{{\hat{\beta }}}_{2}})} \\ | & = & {{{\hat{\beta }}}_{2}}\pm {{z}_{0.05}}\cdot \sqrt{Var({{{\hat{\beta }}}_{2}})} \\ | ||

& = & 0.3512\pm 1.645\cdot \sqrt{4.617E-4} \\ | & = & 0.3512\pm 1.645\cdot \sqrt{4.617E-4} \\ | ||

& = & 0.3512\pm 0.0354 \\ | & = & 0.3512\pm 0.0354 \\ | ||

& = & 0.3158\text{ }and\text{ }0.3866 | & = & 0.3158\text{ }and\text{ }0.3866 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The 90% bounds on <math>{\sigma }'\,\!</math> are (considering that <math>{\sigma }'\,\!</math> can only take positive values): | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

CI & = & {{{\hat{\sigma }}}^{\prime }}\cdot \exp [\pm ({{z}_{0.05}}/{{{\hat{\sigma }}}^{\prime }})\sqrt{Var({{{\hat{\sigma }}}^{\prime }})}] \\ | |||

& = & 0.043\cdot \exp [\pm (1.645/0.043)\sqrt{2.309E-4}] \\ | & = & 0.043\cdot \exp [\pm (1.645/0.043)\sqrt{2.309E-4}] \\ | ||

& = & 0.024\text{ }and\text{ }0.077 | & = & 0.024\text{ }and\text{ }0.077 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The standard error for the parameters can be obtained by taking the positive square root of the variance. For example, the standard error for <math>{{\hat{\beta }}_{1}}\,\!</math> is: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

se({{{\hat{\beta }}}_{1}}) & = & \sqrt{Var({{{\hat{\beta }}}_{1}})} \\ | |||

& = & \sqrt{4.617E-4} \\ | & = & \sqrt{4.617E-4} \\ | ||

& = & 0.0215 | & = & 0.0215 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The <math>z\,\!</math> statistic for <math>{{\hat{\beta }}_{1}}\,\!</math> is: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{z}_{0}} & = & \frac{{{{\hat{\beta }}}_{1}}}{se({{{\hat{\beta }}}_{1}})} \\ | |||

& = & \frac{0.0046}{0.0215} \\ | & = & \frac{0.0046}{0.0215} \\ | ||

& = & 0.21 | & = & 0.21 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The <math>p\,\!</math> value corresponding to this statistic based on the standard normal distribution is: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

p\text{ }value & = & 2\cdot (1-P(Z\le |{{z}_{0}}|) \\ | |||

& = & 2\cdot (1-0.58435) \\ | & = & 2\cdot (1-0.58435) \\ | ||

& = & 0.8313 | & = & 0.8313 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

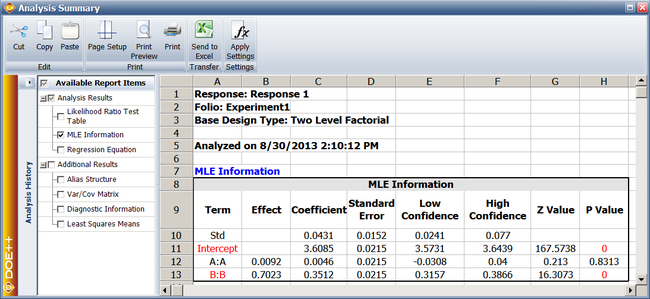

The previous calculation results are displayed as MLE Information in the results obtained from the DOE folio as shown next. | |||

[[Image:doe11_4.png|center|650px|MLE information from Weibull++.]] | |||

In the figure, the Effect corresponding to each factor is simply twice the MLE estimate of the coefficient for that factor. Generally, the <math>p\,\!</math> value corresponding to any coefficient in the MLE Information table should match the value obtained from the likelihood ratio test (displayed in the Likelihood Ratio Test table of the results). If the sample size is not large enough, as in the case of the present example, a difference may be seen in the two values. In such cases, the <math>p\,\!</math> value from the likelihood ratio test should be given preference. For the present example, the <math>p\,\!</math> value of 0.8318 for <math>{{\hat{\beta }}_{1}}\,\!</math>, obtained from the likelihood ratio test, would be preferred to the <math>p\,\!</math> value of 0.8313 displayed under MLE information. For details see [[DOE_References|[Meeker and Escobar 1998]]]. | |||

=R-DOE Analysis of Data Following the Weibull Distribution= | |||

The probability density function for the 2-parameter Weibull distribution is: | |||

::<math>f(T)=\frac{\beta }{\eta }{{\left( \frac{T}{\eta } \right)}^{\beta -1}}\exp \left[ -{{\left( \frac{T}{\eta } \right)}^{\beta }} \right]\,\!</math> | |||

where <math>\eta \,\!</math> is the scale parameter of the Weibull distribution and <math>\beta \,\!</math> is the shape parameter [[DOE_References|[Meeker and Escobar 1998, ReliaSoft 2007b]]]. To distinguish the Weibull shape parameter from the effect coefficients, the shape parameter is represented as <math>Beta\,\!</math> instead of <math>\beta \,\!</math> in the remaining chapter. | |||

For data following the 2-parameter Weibull distribution, the life characteristic used in R-DOE analysis is the scale parameter, <math>\eta \,\!</math> [[DOE_References|[ReliaSoft 2007a, Wu 2000]]]. Since <math>\eta \,\!</math> represents life data that cannot take negative values, a logarithmic transformation is applied to it. The resulting model used in the R-DOE analysis for a two factor experiment with each factor at two levels can be written as follows: | |||

::<math>\ln ({{\eta }_{i}})={{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}}+{{\beta }_{12}}{{x}_{i1}}{{x}_{i2}}\,\!</math> | |||

: | where: | ||

*<math>{{\eta }_{i}}\,\!</math> is the value of the scale parameter at the <math>i\,\!</math>th treatment combination of the two factors | |||

* <math>{{x}_{1}}\,\!</math> is the indicator variable representing the level of the first factor | |||

*<math>{{x}_{2}}\,\!</math> is the indicator variable representing the level of the second factor | |||

*<math>{{\beta }_{0}}\,\!</math> is the intercept term | |||

*<math>{{\beta }_{1}}\,\!</math> and <math>{{\beta }_{2}}\,\!</math> are the effect coefficients for the two factors | |||

*and <math>{{\beta }_{12}}\,\!</math> is the effect coefficient for the interaction of the two factors | |||

The model can be easily expanded to include other factors and their interactions. Note that when any data follows the Weibull distribution, the logarithmic transformation of the data follows the extreme-value distribution, whose probability density function is given as follows: | The model can be easily expanded to include other factors and their interactions. Note that when any data follows the Weibull distribution, the logarithmic transformation of the data follows the extreme-value distribution, whose probability density function is given as follows: | ||

where the <math>T</math> s follows the Weibull distribution, <math>{\mu }''</math> is the location parameter of the extreme-value distribution and <math>{\sigma }''</math> is the scale parameter of the extreme-value distribution. | ::<math>f(\ln (T))=\frac{1}{{{\sigma }''}}\exp \left[ \frac{\ln (T)-{\mu }''}{{{\sigma }''}}-\exp \left( \frac{\ln (T)-{\mu }''}{{{\sigma }''}} \right) \right]\,\!</math> | ||

where the <math>T\,\!</math>s follows the Weibull distribution, <math>{\mu }''\,\!</math> is the location parameter of the extreme-value distribution and <math>{\sigma }''\,\!</math> is the scale parameter of the extreme-value distribution. The two equations given above show that for R-DOE analysis of life data that follows the Weibull distribution, the random error terms, <math>{{\epsilon }_{i}}\,\!</math>, will follow the extreme-value distribution (and not the normal distribution). Hence, regression techniques are not applicable even if the data is complete. Therefore, maximum likelihood estimation has to be used. | |||

===Maximum Likelihood Estimation for the Weibull Distribution=== | ===Maximum Likelihood Estimation for the Weibull Distribution=== | ||

The likelihood function for complete data in R-DOE analysis of Weibull distributed life data is: | The likelihood function for complete data in R-DOE analysis of Weibull distributed life data is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{L}_{failures}}& = & \underset{i=1}{\overset{{{F}_{e}}}{\mathop{\prod }}}\,f({{t}_{i}},{{\eta }_{i}}) \\ | |||

& = & \underset{i=1}{\overset{{{F}_{e}}}{\mathop{\prod }}}\,\left[ \frac{Beta}{{{\eta }_{i}}}{{\left( \frac{{{t}_{i}}}{{{\eta }_{i}}} \right)}^{Beta-1}}\exp \left[ -{{\left( \frac{{{t}_{i}}}{{{\eta }_{i}}} \right)}^{Beta}} \right] \right] | & = & \underset{i=1}{\overset{{{F}_{e}}}{\mathop{\prod }}}\,\left[ \frac{Beta}{{{\eta }_{i}}}{{\left( \frac{{{t}_{i}}}{{{\eta }_{i}}} \right)}^{Beta-1}}\exp \left[ -{{\left( \frac{{{t}_{i}}}{{{\eta }_{i}}} \right)}^{Beta}} \right] \right] | ||

\end{align}</math> | \end{align}\,\!</math> | ||

where: | |||

*<math>{{F}_{e}}\,\!</math> is the total number of observed times-to-failure | |||

*<math>{{\eta }_{i}}\,\!</math> is the life characteristic at the <math>i\,\!</math>th treatment | |||

*<math>{{t}_{i}}\,\!</math> is the time of the <math>i\,\!</math>th failure | |||

For right censored data, the likelihood function is: | For right censored data, the likelihood function is: | ||

:where: | ::<math>{{L}_{suspensions}}=\underset{i=1}{\overset{{{S}_{e}}}{\mathop{\prod }}}\,\left[ \exp \left[ -{{\left( \frac{{{t}_{i}}}{{{\eta }_{i}}} \right)}^{Beta}} \right] \right]\,\!</math> | ||

where: | |||

*<math>{{S}_{e}}\,\!</math> is the total number of observed suspensions | |||

*<math>{{t}_{i}}\,\!</math> is the time of <math>i\,\!</math>th suspension | |||

For interval data, the likelihood function is: | For interval data, the likelihood function is: | ||

: | ::<math>{{L}_{interval}}=\underset{i=1}{\overset{FI}{\mathop{\prod }}}\,\left[ \exp \left[ -{{\left( \frac{t_{i}^{2}}{{{\eta }_{i}}} \right)}^{Beta}} \right]-\exp \left[ -{{\left( \frac{t_{i}^{1}}{{{\eta }_{i}}} \right)}^{Beta}} \right] \right]\,\!</math> | ||

::<math>{{\eta }_{i}}=\exp ({{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}}+...)</math> | |||

where: | |||

*<math>FI\,\!</math> is the total number of interval data | |||

*<math>t_{i}^{1}\,\!</math> is the beginning time of the <math>i\,\!</math>th interval | |||

*<math>t_{i}^{2}\,\!</math> is the end time of the <math>i\,\!</math>th interval | |||

In each of the likelihood functions, <math>{{\eta }_{i}}\,\!</math> is substituted based on the equation for <math>ln({\eta}_{i})\,\!</math> as: | |||

::<math>{{\eta }_{i}}=\exp ({{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}}+...)\,\!</math> | |||

The complete likelihood function when all types of data (complete, right and left censored) are present is: | The complete likelihood function when all types of data (complete, right and left censored) are present is: | ||

::<math>L(Beta,{{\beta }_{0}},{{\beta }_{1}}...)={{L}_{failures}}\cdot {{L}_{suspensions}}\cdot {{L}_{interval}}</math> | |||

::<math>L(Beta,{{\beta }_{0}},{{\beta }_{1}}...)={{L}_{failures}}\cdot {{L}_{suspensions}}\cdot {{L}_{interval}}\,\!</math> | |||

Then the log-likelihood function is: | Then the log-likelihood function is: | ||

::<math>\Lambda (Beta,{{\beta }_{0}},{{\beta }_{1}}...)=\ln (L)\,\!</math> | |||

The MLE estimates are obtained by solving for parameters <math>(Beta,{{\beta }_{0}},{{\beta }_{1}}...)\,\!</math> so that: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

& \frac{\partial \Lambda }{\partial Beta}= & 0 \\ | |||

& \frac{\partial \Lambda }{\partial {{\beta }_{0}}}= & 0 \\ | & \frac{\partial \Lambda }{\partial {{\beta }_{0}}}= & 0 \\ | ||

& \frac{\partial \Lambda }{\partial {{\beta }_{1}}}= & 0 \\ | & \frac{\partial \Lambda }{\partial {{\beta }_{1}}}= & 0 \\ | ||

& & ... | & & ... | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Once the estimates are obtained, the significance of any parameter, <math>{{\theta }_{i}}\,\!</math>, can be assessed using the likelihood ratio test. Other results can also be obtained as discussed in [[Reliability_DOE_for_Life_Tests#Maximum_Likelihood_Estimation_for_the_Lognormal_Distribution|Maximum Likelihood Estimation for the Lognormal Distribution]] and [[Reliability_DOE_for_Life_Tests#Fisher_Matrix_Bounds_on_Parameters|Fisher Matrix Bounds on Parameters]]. | |||

=R-DOE Analysis of Data Following the Exponential Distribution= | |||

The exponential distribution is a special case of the Weibull distribution when the shape parameter <math>Beta\,\!</math> is equal to 1. Substituting <math>Beta=1\,\!</math> in the probability density function for the 2-parameter Weibull distribution gives: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

f(T)&= & \frac{1}{\eta }\exp \left( -\frac{T}{\eta } \right) \\ | |||

& = & \lambda \exp (-\lambda T) | & = & \lambda \exp (-\lambda T) | ||

\end{align}</math> | \end{align}\,\!</math> | ||

where <math>1/\eta \,\!</math> of the ''pdf'' has been replaced by <math>\lambda \,\!</math>. Parameter <math>\lambda \,\!</math> is called the failure rate.[[DOE_References|[ReliaSoft 2007a]]] Hence, R-DOE analysis for exponentially distributed data can be carried out by substituting <math>Beta=1\,\!</math> and replacing <math>1/\eta \,\!</math> by <math>\lambda \,\!</math> in the Weibull distribution. | |||

=Model Diagnostics= | |||

Residual plots can be used to check if the model obtained, based on the MLE estimates, is a good fit to the data. Weibull++ DOE folios use standardized residuals for R-DOE analyses. If the data follows the lognormal distribution, then standardized residuals are calculated using the following equation: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{{\hat{e}}}_{i}}& = & \frac{\ln ({{t}_{i}})-{{{\hat{\mu }}}_{i}}}{{{{\hat{\sigma }}}^{\prime }}} \\ | |||

& = & \frac{\ln ({{t}_{i}})-({{{\hat{\beta }}}_{0}}+{{{\hat{\beta }}}_{1}}{{x}_{i1}}+{{{\hat{\beta }}}_{2}}{{x}_{i2}}+...)}{{{{\hat{\sigma }}}^{\prime }}} | & = & \frac{\ln ({{t}_{i}})-({{{\hat{\beta }}}_{0}}+{{{\hat{\beta }}}_{1}}{{x}_{i1}}+{{{\hat{\beta }}}_{2}}{{x}_{i2}}+...)}{{{{\hat{\sigma }}}^{\prime }}} | ||

\end{align}</math> | \end{align}\,\!</math> | ||

For the probability plot, the standardized residuals are displayed on a normal probability plot. This is because under the assumed model for the lognormal distribution, the standardized residuals should follow a normal distribution with a mean of 0 and a standard deviation of 1. | For the probability plot, the standardized residuals are displayed on a normal probability plot. This is because under the assumed model for the lognormal distribution, the standardized residuals should follow a normal distribution with a mean of 0 and a standard deviation of 1. | ||

For data that follows the Weibull distribution, the standardized residuals are calculated as shown next: | For data that follows the Weibull distribution, the standardized residuals are calculated as shown next: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{{\hat{e}}}_{i}}& = & \hat{B}eta[\ln ({{t}_{i}})-\ln ({{{\hat{\eta }}}_{i}})] \\ | |||

& = & \hat{B}eta[\ln ({{t}_{i}})-({{{\hat{\beta }}}_{0}}+{{{\hat{\beta }}}_{1}}{{x}_{i1}}+{{{\hat{\beta }}}_{2}}{{x}_{i2}}+...)] | & = & \hat{B}eta[\ln ({{t}_{i}})-({{{\hat{\beta }}}_{0}}+{{{\hat{\beta }}}_{1}}{{x}_{i1}}+{{{\hat{\beta }}}_{2}}{{x}_{i2}}+...)] | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The probability plot, in this case, is used to check if the residuals follow the extreme-value distribution with a mean of 0. Note that in all residual plots, when an observation, <math>{{t}_{i}}\,\!</math>, is censored the corresponding residual is also censored. | |||

=Application Examples= | |||

==Using R-DOE to Determine the Best Factor Settings== | |||

This example illustrates the use of R-DOE analysis to design reliability into a product by determining the optimal factor settings. An experiment was carried out to investigate the effect of five factors (each at two levels) on the reliability of fluorescent lights (Taguchi, 1987, p. 930). The factors, <math>A\,\!</math> through <math>E\,\!</math>, were studied using a <math>2^{5-2}\,\!</math> design (with the defining relations <math>D=-AC\,\!</math> and <math>E=-BC\,\!</math>) under the assumption that all interaction effects, except <math>AB\,\!</math> <math>(=DE)\,\!</math>, can be assumed to be inactive. For each treatment, two lights were tested (two replicates) with the readings taken every two days. The experiment was run for 20 days and, if a light had not failed by the 20th day, it was assumed to be a suspension. The experimental design and the corresponding failure times are shown next. | |||

[[Image:doe11_5_1.png|center|700px|The <math>2^{5-2}\,\!</math> experiment to study factors affecting the reliability of fluorescent lights: design]] | |||

[[Image:doe11_5.png|center|700px|The <math>2^{5-2}\,\!</math> experiment to study factors affecting the reliability of fluorescent lights: data]] | |||

This example illustrates the use of R-DOE analysis to design reliability into the | |||

The short duration of the experiment and failure times were probably because the lights were tested under conditions which resulted in stress higher than normal conditions. The failure of the lights was assumed to follow the lognormal distribution. | |||

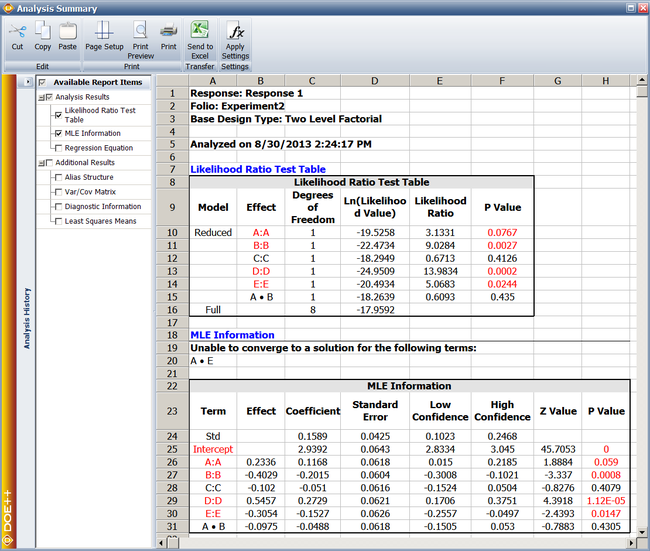

The analysis results from the Weibull++ DOE folio for this experiment are shown next. | |||

[[Image:doe11_6.png|center|650px|Results of the R-DOE analysis for the experiment.]] | |||

< | |||

The results from | |||

The results are obtained by selecting the main effects of the five factors and the interaction <math>AB\,\!</math>. The results show that factors <math>A\,\!</math>, <math>B\,\!</math>, <math>D\,\!</math> and <math>E\,\!</math> are active at a significance level of 0.1. The MLE estimates of the effect coefficients corresponding to these factors are <math>0.1168\,\!</math>, <math>-0.2015\,\!</math>, <math>0.2729\,\!</math> and <math>-0.1527\,\!</math>, respectively. Based on these coefficients, the best settings for these effects to improve the reliability of the fluorescent lights (by maximizing the response, which in this case is the failure time) are: | |||

*Factor <math>A\,\!</math> should be set at the higher level of <math>1\,\!</math> since its coefficient is positive | |||

< | *Factor <math>B\,\!</math> should be set at the lower level of <math>-1\,\!</math> since its coefficient is negative | ||

*Factor <math>D\,\!</math> should be set at the higher level of <math>1\,\!</math> since its coefficient is positive | |||

<math></math> | *Factor <math>E\,\!</math> should be set at the lower level of <math>-1\,\!</math> since its coefficient is negative | ||

Note that, since actual factor levels are not disclosed (presumably for proprietary reasons), predictions beyond the test conditions cannot be carried out in this case. | |||

<div class="noprint"> | |||

{{Examples Box|DOE++ Examples|<p>More R-DOE examples are available! See also:</p> | |||

{{Examples Link External|http://www.reliasoft.com/doe/examples/rc11/index.htm|Two Level Fractional Factorial Reliability Design}}}} | |||

</div> | |||

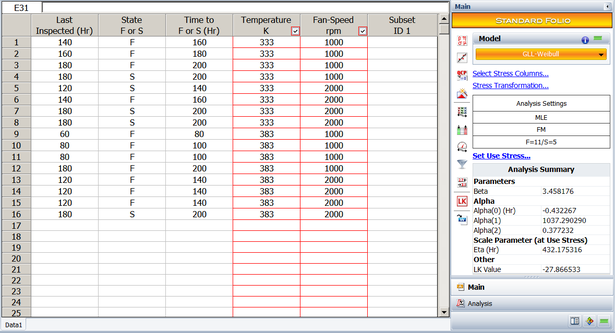

==Using R-DOE and ALTA to Estimate B10 Life== | |||

{{:Using_R-DOE_and_ALTA_to_Estimate_B10_Life}} | |||

=Single Factor R-DOE Analyses= | |||

Webibull++ DOE folios also allow for the analysis of single factor R-DOE experiments. This analysis is similar to the analysis of single factor designed experiments mentioned in [[One Factor Designs]]. In single factor R-DOE analysis, the focus is on discovering whether change in the level of a factor affects reliability and how each of the factor levels are different from the other levels. The analysis models and calculations are similar to multi-factor R-DOE analysis. | |||

==Example== | |||

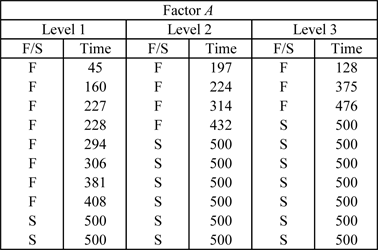

To illustrate single factor R-DOE analysis, consider the data in the table shown next, where 10 life data readings for a product are taken at each of the three levels of a certain factor, <math>A\,\!</math>. | |||

[[Image:doet11.1.png|center|400px|Data obtained from a single factor R-DOE experiment.]] | |||

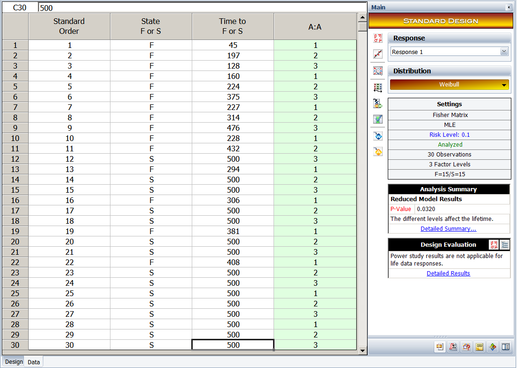

Factor <math>A\,\!</math> could be a stress that is thought to affect life or three different designs of the same product, or it could be the same product manufactured by three different machines or operators, etc. The goal of the experiment is to see if there is a change in life due to change in the levels of the factor. The design for this experiment is shown next. | |||

[[Image:doe11_10.png|center|517px|Experiment design.]] | |||

The life of the product is assumed to follow the Weibull distribution. Therefore, the life characteristic to be used in the R-DOE analysis is the scale parameter, <math>\eta \,\!</math>. Since factor <math>A\,\!</math> has three levels, the model for the life characteristic, <math>\eta \,\!</math>, is: | |||

< | |||

::<math>\ln ({{\eta }_{i}})={{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}}\,\!</math> | |||

where <math>{{\beta }_{0}}\,\!</math> is the intercept, <math>{{\beta }_{1}}\,\!</math> is the effect coefficient for the first level of the factor ( <math>{{\beta }_{1}}\,\!</math> is represented as "A[1]" in Weibull++ DOE folios) and <math>{{\beta }_{2}}\,\!</math> is the effect coefficient for the second level of the factor ( <math>{{\beta }_{2}}\,\!</math> is represented as "A[2]" in Weibull++ DOE folios). Two indicator variables, <math>{{x}_{1}}\,\!</math> and <math>{{x}_{2}},\,\!</math> are the used to represent the three levels of factor <math>A\,\!</math> such that: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 702: | Line 790: | ||

& {{x}_{1}}= & 0,\text{ }{{x}_{2}}=1\text{ Level 2 Effect} \\ | & {{x}_{1}}= & 0,\text{ }{{x}_{2}}=1\text{ Level 2 Effect} \\ | ||

& {{x}_{1}}= & -1,\text{ }{{x}_{2}}=-1\text{ Level 3 Effect} | & {{x}_{1}}= & -1,\text{ }{{x}_{2}}=-1\text{ Level 3 Effect} | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The following hypothesis test needs to be carried out in this example: | The following hypothesis test needs to be carried out in this example: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

& {{H}_{0}}: & {{\theta }_{i}}=0 \\ | & {{H}_{0}}: & {{\theta }_{i}}=0 \\ | ||

& {{H}_{1}}: & {{\theta }_{i}}\ne 0 | & {{H}_{1}}: & {{\theta }_{i}}\ne 0 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

where <math>{{\theta }_{i}}=[{{\beta }_{1}},{{\beta }_{2}}{]}'</math> . The statistic for this test is: | where <math>{{\theta }_{i}}=[{{\beta }_{1}},{{\beta }_{2}}{]}'\,\!</math>. The statistic for this test is: | ||

::<math>LR=-2\ln \frac{L({{{\hat{\theta }}}_{(-i)}})}{L(\hat{\theta })}\,\!</math> | |||

< | where <math>L(\hat{\theta })\,\!</math> is the value of the likelihood function corresponding to the full model, and <math>L({{\hat{\theta }}_{(-i)}})\,\!</math> is the likelihood value for the reduced model. To calculate the statistic for this test, the MLE estimates of the parameters must be obtained. | ||

===MLE Estimates=== | ===MLE Estimates=== | ||

Following the procedure used in the analysis of multi-factor R-DOE experiments, MLE estimates of the parameters are obtained by differentiating the log-likelihood function <math>\Lambda </math> : | Following the procedure used in the analysis of multi-factor R-DOE experiments, MLE estimates of the parameters are obtained by differentiating the log-likelihood function <math>\Lambda \,\!</math>: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 737: | Line 818: | ||

& & +\underset{i\epsilon 1}{\overset{{{S}_{e}}}{\mathop{\sum }}}\,\left[ -{{\left( \frac{{{t}_{i}}}{{{\eta }_{i}}} \right)}^{Beta}} \right] \\ | & & +\underset{i\epsilon 1}{\overset{{{S}_{e}}}{\mathop{\sum }}}\,\left[ -{{\left( \frac{{{t}_{i}}}{{{\eta }_{i}}} \right)}^{Beta}} \right] \\ | ||

& & +\underset{i\epsilon 1}{\overset{FI}{\mathop{\sum }}}\,\ln \left[ \exp \left[ -{{\left( \frac{t_{i}^{2}}{{{\eta }_{i}}} \right)}^{Beta}} \right]-\exp \left[ -{{\left( \frac{t_{i}^{1}}{{{\eta }_{i}}} \right)}^{Beta}} \right] \right] | & & +\underset{i\epsilon 1}{\overset{FI}{\mathop{\sum }}}\,\ln \left[ \exp \left[ -{{\left( \frac{t_{i}^{2}}{{{\eta }_{i}}} \right)}^{Beta}} \right]-\exp \left[ -{{\left( \frac{t_{i}^{1}}{{{\eta }_{i}}} \right)}^{Beta}} \right] \right] | ||

\end{align}</math> | \end{align}\,\!</math> | ||

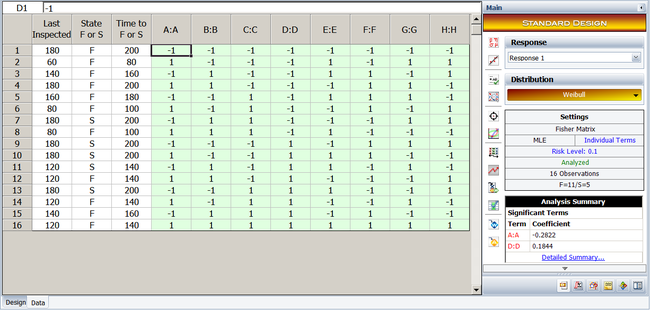

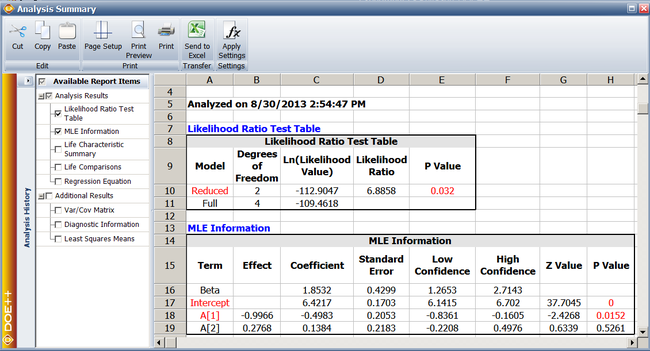

Substituting <math>{{\eta }_{i}}</math> from | Substituting <math>{{\eta }_{i}}\,\!</math> from the model for the life characteristic and setting the partial derivatives <math>\partial \Lambda /\partial {{\theta }_{i}}\,\!</math> to zero, the parameter estimates are obtained as <math>\hat{B}eta=1.8532\,\!</math>, <math>{{\hat{\beta }}_{0}}=6.4217\,\!</math>, <math>{{\hat{\beta }}_{1}}=-0.4983\,\!</math> and <math>{{\hat{\beta }}_{2}}=0.1384\,\!</math>. These parameters are shown in the MLE Information table in the analysis results, shown next. | ||

[[Image: | [[Image:doe11_11.png|center|650px|MLE results for the experiment in the example.]] | ||

===Likelihood Ratio Test=== | |||

Knowing the MLE estimates, the likelihood ratio test for the significance of factor <math>A\,\!</math> can be carried out. The likelihood value for the full model, <math>L(\hat{\theta })\,\!</math>, is the value of the likelihood function corresponding to the model <math>\ln ({{\eta }_{i}})={{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}}\,\!</math>: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

L(\hat{\theta })& = & L(\hat{B}eta,{{{\hat{\beta }}}_{0}},{{{\hat{\beta }}}_{1}},{{{\hat{\beta }}}_{2}}) \\ | |||

& = & 9.2E-50 | & = & 9.2E-50 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The likelihood value for the reduced model, <math>L({{\hat{\theta }}_{(-i)}})\,\!</math>, is the value of the likelihood function corresponding to the model <math>\ln ({{\eta }_{i}})={{\beta }_{0}}\,\!</math>: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

L({{{\hat{\theta }}}_{(-i)}})&= & L(\hat{B}eta,{{{\hat{\beta }}}_{0}}) \\ | |||

& = & 2.9E-48 | & = & 2.9E-48 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Then the likelihood ratio is: | Then the likelihood ratio is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

LR& = & -2\ln \frac{L({{{\hat{\theta }}}_{(-i)}})}{L(\hat{\theta })} \\ | |||

& = & 6.8858 | & = & 6.8858 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

If the null hypothesis, <math>{{H}_{0}}</math> , is true then the likelihood ratio will follow the | If the null hypothesis, <math>{{H}_{0}}\,\!</math>, is true then the likelihood ratio will follow the chi-squared distribution. The number of degrees of freedom for this distribution is equal to the difference in the number of parameters between the full and the reduced model. In this case, this difference is 2. The <math>p\,\!</math> value corresponding to the likelihood ratio on the chi-squared distribution with two degrees of freedom is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

p\text{ }value & = & 1-P(\chi _{2}^{2}<LR) \\ | |||

& = & 1-0.968 \\ | & = & 1-0.968 \\ | ||

& = & 0.032 | & = & 0.032 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Assuming that the desired significance is 0.1, since <math>p | Assuming that the desired significance is 0.1, since <math>p\ value<0.1\,\!</math>, <math>{{H}_{0}}:{{\theta }_{i}}=0\,\!</math> is rejected, it is concluded that, at a significance of 0.1, at least one of the parameters, <math>{{\beta }_{1}}\,\!</math> or <math>{{\beta }_{2}}\,\!</math>, is non-zero. Therefore, factor <math>A\,\!</math> affects the life of the product. This result is shown in the Likelihood Ratio Test table in the analysis results. | ||

Additional results for single factor R-DOE analysis obtained from DOE | |||

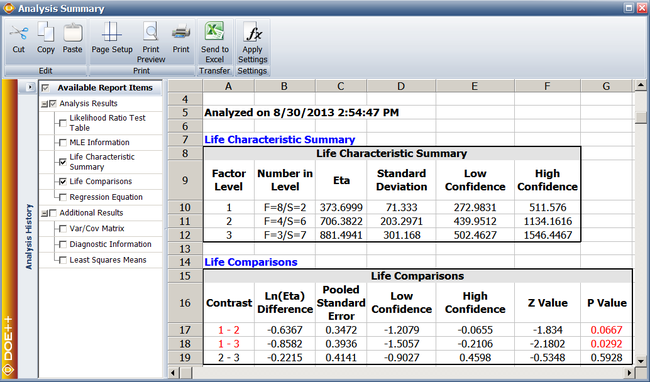

Additional results for single factor R-DOE analysis obtained from the DOE folio include information on the life characteristic and comparison of life characteristics at different levels of the factor. | |||

===Life Characteristic Summary Results=== | ===Life Characteristic Summary Results=== | ||

Results in the Life Characteristic Summary table, include information about the life characteristic corresponding to each treatment level of the factor. If <math>\ln ({{\eta }_{i}})</math> is represented as <math>E({{y}_{i}})</math> , then | Results in the Life Characteristic Summary table, include information about the life characteristic corresponding to each treatment level of the factor. If <math>\ln ({{\eta }_{i}})\,\!</math> is represented as <math>E({{y}_{i}})\,\!</math>, then the model for the life characteristic can be written as: | ||

::<math>E({{y}_{i}})={{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}}</math> | |||

::<math>E({{y}_{i}})={{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}{{x}_{i2}}\,\!</math> | |||

The respective equations for all three treatment levels for a single replicate of the experiment can be expressed in matrix notation as: | The respective equations for all three treatment levels for a single replicate of the experiment can be expressed in matrix notation as: | ||

::<math>E(y)=X\beta </math> | |||

::<math>E(y)=X\beta \,\!</math> | |||

where: | where: | ||

::<math>E(y)=\left[ \begin{matrix} | ::<math>E(y)=\left[ \begin{matrix} | ||

| Line 809: | Line 896: | ||

{{\beta }_{1}} \\ | {{\beta }_{1}} \\ | ||

{{\beta }_{2}} \\ | {{\beta }_{2}} \\ | ||

\end{matrix} \right]</math> | \end{matrix} \right]\,\!</math> | ||

Knowing <math>{{\hat{\beta }}_{0}}\,\!</math>, <math>{{\hat{\beta }}_{1}}\,\!</math> and <math>{{\hat{\beta }}_{2}}\,\!</math>, the predicted value of the life characteristic at any level can be obtained. For example, for the second level: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

E({{{\hat{y}}}_{2}})&= & {{{\hat{\beta }}}_{0}}+{{{\hat{\beta }}}_{2}} \\ | |||

or\text{ }\ln ({{{\hat{\eta }}}_{2}})& = & {{{\hat{\beta }}}_{0}}+{{{\hat{\beta }}}_{2}} \\ | |||

& = & 6.421743+0.138414 \\ | & = & 6.421743+0.138414 \\ | ||

& = & 6.560157 | & = & 6.560157 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Thus: | Thus: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

{{{\hat{\eta }}}_{2}} & = & \exp (6.560157) \\ | |||

& = & 706.3828 | & = & 706.3828 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The variance for the predicted values of life characteristic can be calculated using the following equation: | The variance for the predicted values of life characteristic can be calculated using the following equation: | ||

::<math>Var(y)=XVar(\hat{\beta }){{X}^{\prime }}\,\!</math> | |||

where <math>Var(\hat{\beta })\,\!</math> is the variance-covariance matrix for <math>{{\hat{\beta }}_{0}}\,\!</math>, <math>{{\hat{\beta }}_{1}}\,\!</math> and <math>{{\hat{\beta }}_{2}}\,\!</math>. Substituting the required values: | |||

<center><math>\begin{align} | <center><math>\begin{align} | ||

Var(\hat{y})& = & \left[ \begin{matrix} | |||

1 & 1 & 0 \\ | 1 & 1 & 0 \\ | ||

1 & 0 & 1 \\ | 1 & 0 & 1 \\ | ||

| Line 856: | Line 947: | ||

-0.0009 & 0.0141 & 0.1167 \\ | -0.0009 & 0.0141 & 0.1167 \\ | ||

\end{matrix} \right] | \end{matrix} \right] | ||

\end{align}</math></center> | \end{align}\,\!</math></center> | ||

From the previous matrix, <math>Var({{\hat{y}}_{2}})=0.0829</math> . Therefore, the 90% confidence interval ( <math>\alpha =0.1</math> ) on <math>{{\hat{y}}_{2}}</math> is: | From the previous matrix, <math>Var({{\hat{y}}_{2}})=0.0829\,\!</math>. Therefore, the 90% confidence interval (<math>\alpha =0.1\,\!</math>) on <math>{{\hat{y}}_{2}}\,\!</math> is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

CI\text{ }on\text{ }{{{\hat{y}}}_{2}}& = & E({{{\hat{y}}}_{2}})\pm {{z}_{\alpha /2}}\sqrt{Var({{{\hat{y}}}_{2}})} \\ | |||

& = & E({{{\hat{y}}}_{2}})\pm {{z}_{0.05}}\sqrt{Var({{{\hat{y}}}_{2}})} \\ | & = & E({{{\hat{y}}}_{2}})\pm {{z}_{0.05}}\sqrt{Var({{{\hat{y}}}_{2}})} \\ | ||

& = & 6.560157\pm 1.645\sqrt{0.0829} \\ | & = & 6.560157\pm 1.645\sqrt{0.0829} \\ | ||

& = & 6.0867\text{ }and\text{ }7.0336 | & = & 6.0867\text{ }and\text{ }7.0336 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Since <math>{{\hat{y}}_{2}}=\ln ({{\hat{\eta }}_{2}}),</math> the 90% confidence interval on <math>{{\hat{\eta }}_{2}}</math> is: | Since <math>{{\hat{y}}_{2}}=\ln ({{\hat{\eta }}_{2}}),\,\!</math> the 90% confidence interval on <math>{{\hat{\eta }}_{2}}\,\!</math> is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

CI\text{ }on\text{ }{{{\hat{\eta }}}_{2}}&= & \exp (6.0867)\text{ }and\text{ }\exp (7.0336) \\ | |||

& = & 439.9\text{ }and\text{ }1134.1 | & = & 439.9\text{ }and\text{ }1134.1 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Results for other levels can be calculated in a similar manner and are shown | Results for other levels can be calculated in a similar manner and are shown next. | ||

[[Image:doe11_12.png|center|650px|Life characteristic results for the experiment.]] | |||

===Life Comparisons Results=== | ===Life Comparisons Results=== | ||

Results under Life Comparisons include information on how life is different at a level in comparison to any other level of the factor. For example, the difference between the predicted values of life at levels 1 and 2 is (in terms of the logarithmic transformation): | Results under Life Comparisons include information on how life is different at a level in comparison to any other level of the factor. For example, the difference between the predicted values of life at levels 1 and 2 is (in terms of the logarithmic transformation): | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

E({{{\hat{y}}}_{1}})-E({{{\hat{y}}}_{2}})&= & 5.923453-6.560157 \\ | |||

& = & -0.6367 | & = & -0.6367 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

| Line 899: | Line 989: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

Pooled\text{ }Std.\text{ }Error&= & \sqrt{Var({{{\hat{y}}}_{1}}-{{{\hat{y}}}_{2}})} \\ | |||

& = & \sqrt{Var({{{\hat{y}}}_{1}})+Var({{{\hat{y}}}_{2}})} \\ | & = & \sqrt{Var({{{\hat{y}}}_{1}})+Var({{{\hat{y}}}_{2}})} \\ | ||

& = & \sqrt{0.0366+0.0831} \\ | & = & \sqrt{0.0366+0.0831} \\ | ||

& = & 0.3454 | & = & 0.3454 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

If the covariance between <math>{{\hat{y}}_{1}}</math> and <math>{{\hat{y}}_{2}}</math> is taken into account, then the pooled standard error is: | If the covariance between <math>{{\hat{y}}_{1}}\,\!</math> and <math>{{\hat{y}}_{2}}\,\!</math> is taken into account, then the pooled standard error is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

Pooled\text{ }Std.\text{ }Error&= & \sqrt{Var({{{\hat{y}}}_{1}}-{{{\hat{y}}}_{2}})} \\ | |||

& = & \sqrt{Var({{{\hat{y}}}_{1}})+Var({{{\hat{y}}}_{2}})-2\cdot Cov({{{\hat{y}}}_{1}},{{{\hat{y}}}_{2}})} \\ | & = & \sqrt{Var({{{\hat{y}}}_{1}})+Var({{{\hat{y}}}_{2}})-2\cdot Cov({{{\hat{y}}}_{1}},{{{\hat{y}}}_{2}})} \\ | ||

& = & \sqrt{0.0364+0.0829-2\cdot (-0.0006)} \\ | & = & \sqrt{0.0364+0.0829-2\cdot (-0.0006)} \\ | ||

& = & 0.3471 | & = & 0.3471 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

This is the value displayed by | This is the value displayed by the Weibull++ DOE folio. Knowing the pooled standard error the confidence interval on the difference can be calculated. The 90% confidence interval on the difference in (logarithmic) life between levels 1 and 2 of factor <math>A\,\!</math> is: | ||