Statistical Background: Difference between revisions

Dingzhou Cao (talk | contribs) |

Lisa Hacker (talk | contribs) No edit summary |

||

| (181 intermediate revisions by 7 users not shown) | |||

| Line 1: | Line 1: | ||

{{Template:bsbook| | {{Template:bsbook|2}} | ||

This chapter presents a brief review of statistical principles and terminology. The objective of this chapter is to introduce concepts from probability theory and statistics that will be used in later chapters. As such, this chapter is not intended to cover this subject completely, but rather to provide an overview of applicable concepts as a foundation that you can refer to when more complex concepts are introduced. | This chapter presents a brief review of statistical principles and terminology. The objective of this chapter is to introduce concepts from probability theory and statistics that will be used in later chapters. As such, this chapter is not intended to cover this subject completely, but rather to provide an overview of applicable concepts as a foundation that you can refer to when more complex concepts are introduced. | ||

<br> | <br> | ||

If you are familiar with basic probability theory and life data analysis, you may wish to skip this chapter. If you would like additional information, we encourage you to review other references on the subject. | If you are familiar with basic probability theory and life data analysis, you may wish to skip this chapter. If you would like additional information, we encourage you to review other references on the subject. | ||

=A Brief Introduction to Probability Theory = | =A Brief Introduction to Probability Theory = | ||

==Basic Definitions== | |||

Before considering the methodology for estimating system reliability, some basic concepts from probability theory should be reviewed. | Before considering the methodology for estimating system reliability, some basic concepts from probability theory should be reviewed. | ||

The terms that follow are important in creating and analyzing reliability block diagrams. | The terms that follow are important in creating and analyzing reliability block diagrams. | ||

<br> | <br> | ||

#Experiment | #Experiment <math>(E)\,\!</math> : An experiment is any well-defined action that may result in a number of outcomes. For example, the rolling of dice can be considered an experiment. | ||

#Outcome | #Outcome <math>(O)\,\!</math> : An outcome is defined as any possible result of an experiment. | ||

#Sample space | #Sample space <math>(S)\,\!</math> : The sample space is defined as the set of all possible outcomes of an experiment. | ||

#Event: An event is a collection of outcomes. | #Event: An event is a collection of outcomes. | ||

#Union of two events | #Union of two events <math>A\,\!</math> and <math>B\,\!</math> <math>(A\cup B)\,\!</math> : The union of two events <math>A\,\!</math> and <math>B\,\!</math> is the set of outcomes that belong to <math>A\,\!</math> or <math>B\,\!</math> or both. | ||

#Intersection of two events | #Intersection of two events <math>A\,\!</math> and <math>B\,\!</math> <math>(A\cap B)\,\!</math> : The intersection of two events <math>A\,\!</math> and <math>B\,\!</math> is the set of outcomes that belong to both <math>A\,\!</math> and <math>B\,\!</math>. | ||

#Complement of event A ( <math>\overline{A}</math> ): A complement of an event | #Complement of event A ( <math>\overline{A}\,\!</math> ): A complement of an event <math>A\,\!</math> contains all outcomes of the sample space, <math>S\,\!</math>, that do not belong to <math>A\,\!</math>. | ||

#Null event ( <math>\varnothing</math> ): A null event is an empty set that has no outcomes. | #Null event ( <math>\varnothing\,\!</math> ): A null event is an empty set that has no outcomes. | ||

#Probability: Probability is a numerical measure of the likelihood of an event relative to a set of alternative events. For example, there is a | #Probability: Probability is a numerical measure of the likelihood of an event relative to a set of alternative events. For example, there is a 50% probability of observing heads relative to observing tails when flipping a coin (assuming a fair or unbiased coin). | ||

'''Example''' | |||

Consider an experiment that consists of the rolling of a six-sided die. The numbers on each side of the die are the possible outcomes. Accordingly, the sample space is | |||

Consider an experiment that consists of the rolling of a six-sided die. The numbers on each side of the die are the possible outcomes. Accordingly, the sample space is <math>S=\{1,2,3,4,5,6\}\,\!</math>. | |||

Let | |||

#The union of | Let <math>A\,\!</math> be the event of rolling a 3, 4 or 6, <math>A=\{3,4,6\}\,\!</math>, and let <math>B\,\!</math> be the event of rolling a 2, 3 or 5, <math>B=\{2,3,5\}\,\!</math>. | ||

#The intersection of | #The union of <math>A\,\!</math> and <math>B\,\!</math> is: <math>A\cup B=\{2,3,4,5,6\}\,\!</math>. | ||

#The complement of | #The intersection of <math>A\,\!</math> and <math>B\,\!</math> is: <math>A\cap B=\{3\}\,\!</math>. | ||

#The complement of <math>A\,\!</math> is: <math>\overline{A}=\{1,2,5\}\,\!</math>. | |||

==Probability Properties, Theorems and Axioms== | |||

The probability of an event <math>A\,\!</math> is expressed as <math>P(A)\,\!</math> and has the following properties: | |||

# <math>0\le P(A)\le 1\,\!</math> | |||

# <math>P(A)=1-P(\overline{A})\,\!</math> | |||

# <math>P(\varnothing)=0\,\!</math> | |||

# <math>P(S)=1\,\!</math> | |||

In other words, when an event is certain to occur, it has a probability equal to 1; when it is impossible for the event to occur, it has a probability equal to 0. | |||

In other words, when an event is certain to occur, it has a probability equal to | |||

It can also be shown that the probability of the union of two events <math>A\,\!</math> and <math>B\,\!</math> is: | |||

::<math>P(A\cup B)=P(A)+P(B)-P(A\cap B)\ \,\!</math> | |||

Similarly, the probability of the union of three events, <math>A\,\!</math>, <math>B\,\!</math> and <math>C\,\!</math> is given by: | |||

Similarly, the probability of the union of three events, | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

P(A\cup B\cup C)= & P(A)+P(B)+P(C) \\ | P(A\cup B\cup C)= & P(A)+P(B)+P(C) \\ | ||

& -P(A\cap B)-P(A\cap C) \\ | & -P(A\cap B)-P(A\cap C) \\ | ||

& -P(B\cap C)+P(A\cap B\cap C) | & -P(B\cap C)+P(A\cap B\cap C) | ||

\end{align}</math> | \end{align}\,\!</math> | ||

===Mutually Exclusive Events=== | |||

Two events <math>A\,\!</math> and <math>B\,\!</math> are said to be mutually exclusive if it is impossible for them to occur simultaneously ( <math>A\cap B\,\!</math> = <math>\varnothing\,\!</math> ). In such cases, the expression for the union of these two events reduces to the following, since the probability of the intersection of these events is defined as zero. | |||

::<math>P(A\cup B)=P(A)+P(B)\,\!</math> | |||

Two events | |||

===Conditional Probability=== | |||

The conditional probability of two events <math>A\,\!</math> and <math>B\,\!</math> is defined as the probability of one of the events occurring, knowing that the other event has already occurred. The expression below denotes the probability of <math>A\,\!</math> occurring given that <math>B\,\!</math> has already occurred. | |||

::<math>P(A|B)=\frac{P(A\cap B)}{P(B)}\ \,\!</math> | |||

Note that knowing that event <math>B\,\!</math> has occurred reduces the sample space. | |||

===Independent Events=== | |||

If knowing <math>B\,\!</math> gives no information about <math>A\,\!</math>, then the events are said to be ''independent'' and the conditional probability expression reduces to: | |||

::<math>P(A|B)=P(A)\ \,\!</math> | |||

::<math>P(A | |||

From the definition of conditional probability, <math>P(A|B)=\frac{P(A\cap B)}{P(B)}\ \,\!</math> can be written as: | |||

< | ::<math>P(A\cap B)=P(A|B)P(B)\ \,\!</math> | ||

Since events <math>A\,\!</math> and <math>B\,\!</math> are independent, the expression reduces to: | |||

::<math>P(A\cap B)=P(A)P(B)\ \,\!</math> | |||

If a group of <math>n\,\!</math> events <math>{{A}_{i}}\,\!</math> are independent, then: | |||

::<math>P\left[ \underset{i=1}{\overset{n}{\mathop \bigcap }}\,{{A}_{i}} \right]=\underset{i=1}{\overset{n}{\mathop \prod }}\,P({{A}_{i}})\ \,\!</math> | |||

As an illustration, consider the outcome of a six-sided die roll. The probability of rolling a 3 is one out of six or: | As an illustration, consider the outcome of a six-sided die roll. The probability of rolling a 3 is one out of six or: | ||

::<math>P(O=3)=1/6=0.16667</math> | ::<math>\begin{align} | ||

P(O=3)=1/6=0.16667 | |||

\end{align}\,\!</math> | |||

All subsequent rolls of the die are independent events, since knowing the outcome of the first die roll gives no information as to the outcome of subsequent die rolls (unless the die is loaded). Thus the probability of rolling a 3 on the second die roll is again: | All subsequent rolls of the die are independent events, since knowing the outcome of the first die roll gives no information as to the outcome of subsequent die rolls (unless the die is loaded). Thus the probability of rolling a 3 on the second die roll is again: | ||

::<math>P(O=3)=1/6=0.16667</math> | ::<math>\begin{align} | ||

P(O=3)=1/6=0.16667 | |||

\end{align}\,\!</math> | |||

However, if one were to ask the probability of rolling a double 3 with two dice, the result would be: | However, if one were to ask the probability of rolling a double 3 with two dice, the result would be: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

0.16667\cdot 0.16667= & 0.027778 \\ | 0.16667\cdot 0.16667= & 0.027778 \\ | ||

= & \frac{1}{36} | = & \frac{1}{36} | ||

\end{align}</math | \end{align}\,\!</math> | ||

===Example 1=== | ===Example 1=== | ||

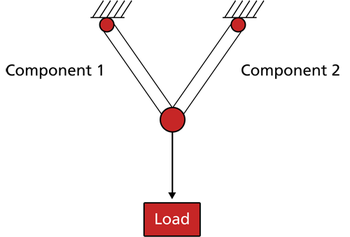

Consider a system where two hinged members are holding a load in place, as shown next. | |||

Consider a system | |||

[[Image:chp3ex1.png|center|350px|System for Example 1|link=]] | |||

The system fails if either member fails and the load is moved from its position. | |||

#Let <math>A=\,\!</math> event of failure of Component 1 and let <math>\overline{A}\,\!</math> <math>=\,\!</math> the event of not failure of Component 1. | |||

#Let <math>B=\,\!</math> event of failure of Component 2 and let <math>\overline{B}\,\!</math> <math>=\,\!</math> the event of not failure of Component 2. | |||

Failure occurs if Component 1 or Component 2 or both fail. The system probability of failure (or unreliability) is: | |||

< | ::<math>{{P}_{f}}=P(A\cup B)=P(A)+P(B)-P(A\cap B)\,\!</math> | ||

< | Assuming independence (or that the failure of either component is not influenced by the success or failure of the other component), the system probability of failure becomes the sum of the probabilities of <math>A\,\!</math> and <math>B\,\!</math> occurring minus the product of the probabilities: | ||

::<math>{{P}_{f}}=P(A\cup B)=P(A)+P(B)-P(A)P(B)\,\!</math> | |||

Another approach is to calculate the probability of the system not failing (i.e., the reliability of the system): | |||

Another approach is to calculate the probability of the system not failing (i.e. the reliability of the system): | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 146: | Line 125: | ||

= & P(\overline{A}\cap\overline{B})\\ | = & P(\overline{A}\cap\overline{B})\\ | ||

= & P(\overline{A})P(\overline{B}) | = & P(\overline{A})P(\overline{B}) | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Then the probability of system failure is simply 1 (or 100%) minus the reliability: | Then the probability of system failure is simply 1 (or 100%) minus the reliability: | ||

::<math>\begin{align} | |||

::<math>{{P}_{f}}=1-Reliability</math> | {{P}_{f}}=1-Reliability | ||

\end{align}\,\!</math> | |||

===Example 2=== | ===Example 2=== | ||

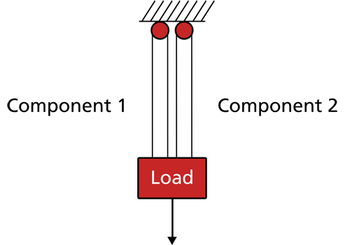

Consider a system with a load being held in place by two rigid members, as shown next. | |||

[[Image:chp3ex2.png|center|350px|System for Example 2.|link=]] | |||

[[Image:chp3ex2.png | |||

:• Let | :• Let <math>A=\,\!</math> event of failure of Component 1. | ||

:• Let | :• Let <math>B=\,\!</math> event of failure of Component 2. | ||

:• The system fails if Component 1 fails and Component 2 fails. In other words, both components must fail for the system to fail. | :• The system fails if Component 1 fails and Component 2 fails. In other words, both components must fail for the system to fail. | ||

The system probability of failure is defined as the intersection of events | The system probability of failure is defined as the intersection of events <math>A\,\!</math> and <math>B\,\!</math> : | ||

::<math>{{P}_{f}}=P(A\cap B)\ | ::<math>{{P}_{f}}=P(A\cap B)) \,\!</math> | ||

'''Case 1''' | |||

Assuming independence (i.e., either one of the members is sufficiently strong to hold the load in place), the probability of system failure becomes the product of the probabilities of <math>A\,\!</math> and <math>B\,\!</math> failing: | |||

< | |||

== | ::<math>{{P}_{f}}=P(A\cap B)=P(A)P(B)\,\!</math> | ||

< | |||

The reliability of the system now becomes: | |||

::<math>Reliability=1-{{P}_{f}}=1-P(A)P(B)\ \,\!</math> | |||

: | |||

'''Case 2''' | |||

If independence is not assumed (e.g., when one component fails the other one is then more likely to fail), then the simplification given in <math>Reliability=1-{{P}_{f}}=1-P(A)P(B)\ \,\!</math> is no longer applicable. In this case, <math>{{P}_{f}}=P(A\cap B)) \,\!</math> must be used. We will examine this dependency in later sections under the subject of load sharing. | |||

== | =A Brief Introduction to Continuous Life Distributions = | ||

{{:Brief Statistical Background}} | |||

=A Brief Introduction to Life-Stress Relationships= <!-- THIS SECTION HEADER IS LINKED FROM: Time-Dependent_System_Reliability_(Analytical). IF YOU RENAME THE SECTION, YOU MUST UPDATE THE LINK(S). --> | |||

: | |||

In certain cases when one or more of the characteristics of the distribution change based on an outside factor, one may be interested in formulating a model that includes both the life distribution and a model that describes how a characteristic of the distribution changes. In reliability, the most common "outside factor" is the stress applied to the component. In system analysis, stress comes into play when dealing with units in a load sharing configuration. When components of a system operate in a load sharing configuration, each component supports a portion of the total load for that aspect of the system. When one or more load sharing components fail, the operating components must take on an increased portion of the load in order to compensate for the failure(s). Therefore, the reliability of each component is dependent upon the performance of the other components in the load sharing configuration. | |||

Traditionally in a reliability block diagram, one assumes independence and thus an item's failure characteristics can be fully described by its failure distribution. However, if the configuration includes load sharing redundancy, then a single failure distribution is no longer sufficient to describe an item's failure characteristics. Instead, the item will fail differently when operating under different loads and the load applied to the component will vary depending on the performance of the other component(s) in the configuration. Therefore, a more complex model is needed to fully describe the failure characteristics of such blocks. This model must describe both the effect of the load (or stress) on the life of the product and the probability of failure of the item at the specified load. The models, theory and methodology used in Quantitative Accelerated Life Testing (QALT) data analysis can be used to obtain the desired model for this situation. The objective of QALT analysis is to relate the applied stress to life (or a life distribution). Identically in the load sharing case, one again wants to relate the applied stress (or load) to life. The following figure graphically illustrates the probability density function (''pdf'') for a standard item, where only a single distribution is required. | |||

The | |||

[[Image:chp3LSR1.png|center|300px|Single ''pdf''|link=]] | |||

The next figure represents a load sharing item by using a 3-D surface that illustrates the ''pdf'' , load and time. | |||

[[Image:Pdf_lifestress1.png|center|300px|''pdf'' and life-stress relationship.|link=]] | |||

The following figure shows the reliability curve for a load sharing item vs. the applied load. | |||

[[Image:chp3reliabilityvsloadsurface.png|center|400px|Reliability and life-stress relationship.|link=]] | |||

To formulate the model, a life distribution is combined with a life-stress relationship. The distribution choice is based on the product's failure characteristics while the life-stress relationship is based on how the stress affects the life characteristics. The following figure graphically shows these elements of the formulation. | |||

[[Image:chp3formulation.png|center|550px|A life distribution and a life-stress relationship.|link=]] | |||

The next figure shows the combination of both an underlying distribution and a life-stress model by plotting a ''pdf'' against both time and stress. | |||

[[Image:chp3formulation2.png|center|400px|''pdf'' vs. time and stress.|link=]] | |||

[[Image: | |||

[[Image: | |||

< | The assumed underlying life distribution can be any life distribution. The most commonly used life distributions include the Weibull, the exponential and the lognormal. The life-stress relationship describes how a specific life characteristic changes with the application of stress. The life characteristic can be any life measure such as the mean, median, <math>R(x)\,\!</math>, <math>F(x)\,\!</math>, etc. It is expressed as a function of stress. Depending on the assumed underlying life distribution, different life characteristics are considered. Typical life characteristics for some distributions are shown in the next table. | ||

{| border="1" align="center" style="border-collapse:collapse;" cellpadding="2" | |||

{| border="1" align="center" | |||

|- | |- | ||

! ''Distribution'' | ! ''Distribution'' | ||

| Line 416: | Line 188: | ||

|- | |- | ||

| Weibull | | Weibull | ||

| <math>\ | | <math>\beta\,\!</math>*, <math>\eta \,\!</math> | ||

| Scale parameter, <math>\eta </math> | | Scale parameter, <math>\eta \,\!</math> | ||

|- | |- | ||

| Exponential | | Exponential | ||

| <math>\lambda </math> | | <math>\lambda \,\!</math> | ||

| Mean Life, (<math>1/{\lambda} </math>) | | Mean Life, (<math>1/{\lambda} \,\!</math>) | ||

|- | |- | ||

| Lognormal | | Lognormal | ||

| <math>\bar{T} </math>, <math>\sigma </math>* | | <math>\bar{T} \,\!</math>, <math>\sigma \,\!</math>* | ||

| Median, <math>\breve{T} </math> | | Median, <math>\breve{T} \,\!</math> | ||

|- | |- | ||

|colspan="3" style="text-align:center"|*Usually assumed constant | |colspan="3" style="text-align:center"|*Usually assumed constant | ||

|} | |} | ||

For example, when considering the Weibull distribution, the scale parameter, <math>\eta \,\!</math>, is chosen to be the life characteristic that is stress-dependent while <math>\beta \,\!</math> is assumed to remain constant across different stress levels. A life-stress relationship is then assigned to <math>\eta .\,\!</math> | |||

For a detailed discussion of this topic, see ReliaSoft's Accelerated Life Testing Data Analysis Reference. | |||

Latest revision as of 18:56, 15 September 2023

This chapter presents a brief review of statistical principles and terminology. The objective of this chapter is to introduce concepts from probability theory and statistics that will be used in later chapters. As such, this chapter is not intended to cover this subject completely, but rather to provide an overview of applicable concepts as a foundation that you can refer to when more complex concepts are introduced.

If you are familiar with basic probability theory and life data analysis, you may wish to skip this chapter. If you would like additional information, we encourage you to review other references on the subject.

A Brief Introduction to Probability Theory

Basic Definitions

Before considering the methodology for estimating system reliability, some basic concepts from probability theory should be reviewed.

The terms that follow are important in creating and analyzing reliability block diagrams.

- Experiment [math]\displaystyle{ (E)\,\! }[/math] : An experiment is any well-defined action that may result in a number of outcomes. For example, the rolling of dice can be considered an experiment.

- Outcome [math]\displaystyle{ (O)\,\! }[/math] : An outcome is defined as any possible result of an experiment.

- Sample space [math]\displaystyle{ (S)\,\! }[/math] : The sample space is defined as the set of all possible outcomes of an experiment.

- Event: An event is a collection of outcomes.

- Union of two events [math]\displaystyle{ A\,\! }[/math] and [math]\displaystyle{ B\,\! }[/math] [math]\displaystyle{ (A\cup B)\,\! }[/math] : The union of two events [math]\displaystyle{ A\,\! }[/math] and [math]\displaystyle{ B\,\! }[/math] is the set of outcomes that belong to [math]\displaystyle{ A\,\! }[/math] or [math]\displaystyle{ B\,\! }[/math] or both.

- Intersection of two events [math]\displaystyle{ A\,\! }[/math] and [math]\displaystyle{ B\,\! }[/math] [math]\displaystyle{ (A\cap B)\,\! }[/math] : The intersection of two events [math]\displaystyle{ A\,\! }[/math] and [math]\displaystyle{ B\,\! }[/math] is the set of outcomes that belong to both [math]\displaystyle{ A\,\! }[/math] and [math]\displaystyle{ B\,\! }[/math].

- Complement of event A ( [math]\displaystyle{ \overline{A}\,\! }[/math] ): A complement of an event [math]\displaystyle{ A\,\! }[/math] contains all outcomes of the sample space, [math]\displaystyle{ S\,\! }[/math], that do not belong to [math]\displaystyle{ A\,\! }[/math].

- Null event ( [math]\displaystyle{ \varnothing\,\! }[/math] ): A null event is an empty set that has no outcomes.

- Probability: Probability is a numerical measure of the likelihood of an event relative to a set of alternative events. For example, there is a 50% probability of observing heads relative to observing tails when flipping a coin (assuming a fair or unbiased coin).

Example

Consider an experiment that consists of the rolling of a six-sided die. The numbers on each side of the die are the possible outcomes. Accordingly, the sample space is [math]\displaystyle{ S=\{1,2,3,4,5,6\}\,\! }[/math].

Let [math]\displaystyle{ A\,\! }[/math] be the event of rolling a 3, 4 or 6, [math]\displaystyle{ A=\{3,4,6\}\,\! }[/math], and let [math]\displaystyle{ B\,\! }[/math] be the event of rolling a 2, 3 or 5, [math]\displaystyle{ B=\{2,3,5\}\,\! }[/math].

- The union of [math]\displaystyle{ A\,\! }[/math] and [math]\displaystyle{ B\,\! }[/math] is: [math]\displaystyle{ A\cup B=\{2,3,4,5,6\}\,\! }[/math].

- The intersection of [math]\displaystyle{ A\,\! }[/math] and [math]\displaystyle{ B\,\! }[/math] is: [math]\displaystyle{ A\cap B=\{3\}\,\! }[/math].

- The complement of [math]\displaystyle{ A\,\! }[/math] is: [math]\displaystyle{ \overline{A}=\{1,2,5\}\,\! }[/math].

Probability Properties, Theorems and Axioms

The probability of an event [math]\displaystyle{ A\,\! }[/math] is expressed as [math]\displaystyle{ P(A)\,\! }[/math] and has the following properties:

- [math]\displaystyle{ 0\le P(A)\le 1\,\! }[/math]

- [math]\displaystyle{ P(A)=1-P(\overline{A})\,\! }[/math]

- [math]\displaystyle{ P(\varnothing)=0\,\! }[/math]

- [math]\displaystyle{ P(S)=1\,\! }[/math]

In other words, when an event is certain to occur, it has a probability equal to 1; when it is impossible for the event to occur, it has a probability equal to 0.

It can also be shown that the probability of the union of two events [math]\displaystyle{ A\,\! }[/math] and [math]\displaystyle{ B\,\! }[/math] is:

- [math]\displaystyle{ P(A\cup B)=P(A)+P(B)-P(A\cap B)\ \,\! }[/math]

Similarly, the probability of the union of three events, [math]\displaystyle{ A\,\! }[/math], [math]\displaystyle{ B\,\! }[/math] and [math]\displaystyle{ C\,\! }[/math] is given by:

- [math]\displaystyle{ \begin{align} P(A\cup B\cup C)= & P(A)+P(B)+P(C) \\ & -P(A\cap B)-P(A\cap C) \\ & -P(B\cap C)+P(A\cap B\cap C) \end{align}\,\! }[/math]

Mutually Exclusive Events

Two events [math]\displaystyle{ A\,\! }[/math] and [math]\displaystyle{ B\,\! }[/math] are said to be mutually exclusive if it is impossible for them to occur simultaneously ( [math]\displaystyle{ A\cap B\,\! }[/math] = [math]\displaystyle{ \varnothing\,\! }[/math] ). In such cases, the expression for the union of these two events reduces to the following, since the probability of the intersection of these events is defined as zero.

- [math]\displaystyle{ P(A\cup B)=P(A)+P(B)\,\! }[/math]

Conditional Probability

The conditional probability of two events [math]\displaystyle{ A\,\! }[/math] and [math]\displaystyle{ B\,\! }[/math] is defined as the probability of one of the events occurring, knowing that the other event has already occurred. The expression below denotes the probability of [math]\displaystyle{ A\,\! }[/math] occurring given that [math]\displaystyle{ B\,\! }[/math] has already occurred.

- [math]\displaystyle{ P(A|B)=\frac{P(A\cap B)}{P(B)}\ \,\! }[/math]

Note that knowing that event [math]\displaystyle{ B\,\! }[/math] has occurred reduces the sample space.

Independent Events

If knowing [math]\displaystyle{ B\,\! }[/math] gives no information about [math]\displaystyle{ A\,\! }[/math], then the events are said to be independent and the conditional probability expression reduces to:

- [math]\displaystyle{ P(A|B)=P(A)\ \,\! }[/math]

From the definition of conditional probability, [math]\displaystyle{ P(A|B)=\frac{P(A\cap B)}{P(B)}\ \,\! }[/math] can be written as:

- [math]\displaystyle{ P(A\cap B)=P(A|B)P(B)\ \,\! }[/math]

Since events [math]\displaystyle{ A\,\! }[/math] and [math]\displaystyle{ B\,\! }[/math] are independent, the expression reduces to:

- [math]\displaystyle{ P(A\cap B)=P(A)P(B)\ \,\! }[/math]

If a group of [math]\displaystyle{ n\,\! }[/math] events [math]\displaystyle{ {{A}_{i}}\,\! }[/math] are independent, then:

- [math]\displaystyle{ P\left[ \underset{i=1}{\overset{n}{\mathop \bigcap }}\,{{A}_{i}} \right]=\underset{i=1}{\overset{n}{\mathop \prod }}\,P({{A}_{i}})\ \,\! }[/math]

As an illustration, consider the outcome of a six-sided die roll. The probability of rolling a 3 is one out of six or:

- [math]\displaystyle{ \begin{align} P(O=3)=1/6=0.16667 \end{align}\,\! }[/math]

All subsequent rolls of the die are independent events, since knowing the outcome of the first die roll gives no information as to the outcome of subsequent die rolls (unless the die is loaded). Thus the probability of rolling a 3 on the second die roll is again:

- [math]\displaystyle{ \begin{align} P(O=3)=1/6=0.16667 \end{align}\,\! }[/math]

However, if one were to ask the probability of rolling a double 3 with two dice, the result would be:

- [math]\displaystyle{ \begin{align} 0.16667\cdot 0.16667= & 0.027778 \\ = & \frac{1}{36} \end{align}\,\! }[/math]

Example 1

Consider a system where two hinged members are holding a load in place, as shown next.

The system fails if either member fails and the load is moved from its position.

- Let [math]\displaystyle{ A=\,\! }[/math] event of failure of Component 1 and let [math]\displaystyle{ \overline{A}\,\! }[/math] [math]\displaystyle{ =\,\! }[/math] the event of not failure of Component 1.

- Let [math]\displaystyle{ B=\,\! }[/math] event of failure of Component 2 and let [math]\displaystyle{ \overline{B}\,\! }[/math] [math]\displaystyle{ =\,\! }[/math] the event of not failure of Component 2.

Failure occurs if Component 1 or Component 2 or both fail. The system probability of failure (or unreliability) is:

- [math]\displaystyle{ {{P}_{f}}=P(A\cup B)=P(A)+P(B)-P(A\cap B)\,\! }[/math]

Assuming independence (or that the failure of either component is not influenced by the success or failure of the other component), the system probability of failure becomes the sum of the probabilities of [math]\displaystyle{ A\,\! }[/math] and [math]\displaystyle{ B\,\! }[/math] occurring minus the product of the probabilities:

- [math]\displaystyle{ {{P}_{f}}=P(A\cup B)=P(A)+P(B)-P(A)P(B)\,\! }[/math]

Another approach is to calculate the probability of the system not failing (i.e., the reliability of the system):

- [math]\displaystyle{ \begin{align} P(no\text{ }failure)= & Reliability \\ = & P(\overline{A}\cap\overline{B})\\ = & P(\overline{A})P(\overline{B}) \end{align}\,\! }[/math]

Then the probability of system failure is simply 1 (or 100%) minus the reliability:

- [math]\displaystyle{ \begin{align} {{P}_{f}}=1-Reliability \end{align}\,\! }[/math]

Example 2

Consider a system with a load being held in place by two rigid members, as shown next.

- • Let [math]\displaystyle{ A=\,\! }[/math] event of failure of Component 1.

- • Let [math]\displaystyle{ B=\,\! }[/math] event of failure of Component 2.

- • The system fails if Component 1 fails and Component 2 fails. In other words, both components must fail for the system to fail.

The system probability of failure is defined as the intersection of events [math]\displaystyle{ A\,\! }[/math] and [math]\displaystyle{ B\,\! }[/math] :

- [math]\displaystyle{ {{P}_{f}}=P(A\cap B)) \,\! }[/math]

Case 1

Assuming independence (i.e., either one of the members is sufficiently strong to hold the load in place), the probability of system failure becomes the product of the probabilities of [math]\displaystyle{ A\,\! }[/math] and [math]\displaystyle{ B\,\! }[/math] failing:

- [math]\displaystyle{ {{P}_{f}}=P(A\cap B)=P(A)P(B)\,\! }[/math]

The reliability of the system now becomes:

- [math]\displaystyle{ Reliability=1-{{P}_{f}}=1-P(A)P(B)\ \,\! }[/math]

Case 2

If independence is not assumed (e.g., when one component fails the other one is then more likely to fail), then the simplification given in [math]\displaystyle{ Reliability=1-{{P}_{f}}=1-P(A)P(B)\ \,\! }[/math] is no longer applicable. In this case, [math]\displaystyle{ {{P}_{f}}=P(A\cap B)) \,\! }[/math] must be used. We will examine this dependency in later sections under the subject of load sharing.

A Brief Introduction to Continuous Life Distributions

Random Variables

In general, most problems in reliability engineering deal with quantitative measures, such as the time-to-failure of a component, or qualitative measures, such as whether a component is defective or non-defective. We can then use a random variable [math]\displaystyle{ X\,\! }[/math] to denote these possible measures.

In the case of times-to-failure, our random variable [math]\displaystyle{ X\,\! }[/math] is the time-to-failure of the component and can take on an infinite number of possible values in a range from 0 to infinity (since we do not know the exact time a priori). Our component can be found failed at any time after time 0 (e.g., at 12 hours or at 100 hours and so forth), thus [math]\displaystyle{ X\,\! }[/math] can take on any value in this range. In this case, our random variable [math]\displaystyle{ X\,\! }[/math] is said to be a continuous random variable. In this reference, we will deal almost exclusively with continuous random variables.

In judging a component to be defective or non-defective, only two outcomes are possible. That is, [math]\displaystyle{ X\,\! }[/math] is a random variable that can take on one of only two values (let's say defective = 0 and non-defective = 1). In this case, the variable is said to be a discrete random variable.

The Probability Density Function and the Cumulative Distribution Function

The probability density function (pdf) and cumulative distribution function (cdf) are two of the most important statistical functions in reliability and are very closely related. When these functions are known, almost any other reliability measure of interest can be derived or obtained. We will now take a closer look at these functions and how they relate to other reliability measures, such as the reliability function and failure rate.

From probability and statistics, given a continuous random variable [math]\displaystyle{ X,\,\! }[/math] we denote:

- The probability density function, pdf, as [math]\displaystyle{ f(x)\,\! }[/math].

- The cumulative distribution function, cdf, as [math]\displaystyle{ F(x)\,\! }[/math].

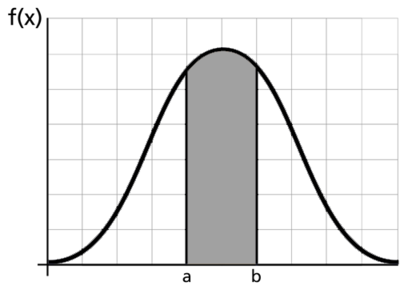

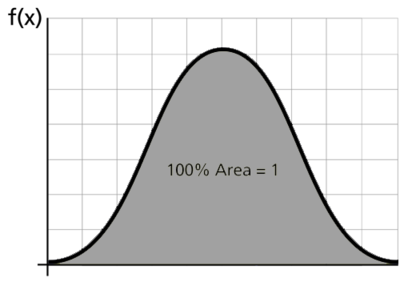

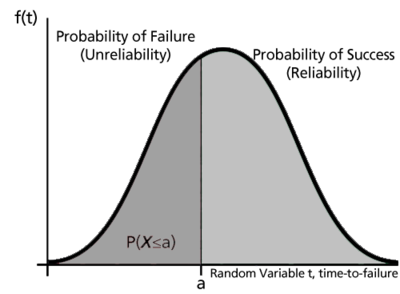

The pdf and cdf give a complete description of the probability distribution of a random variable. The following figure illustrates a pdf.

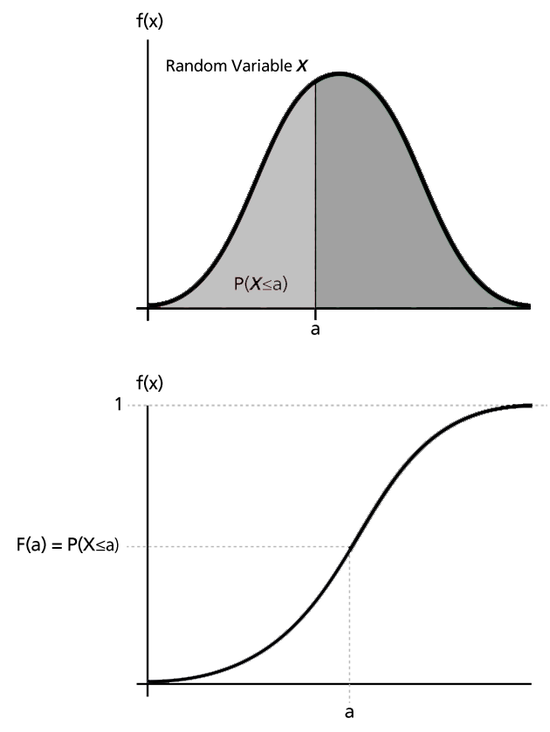

The next figures illustrate the pdf - cdf relationship.

If [math]\displaystyle{ X\,\! }[/math] is a continuous random variable, then the pdf of [math]\displaystyle{ X\,\! }[/math] is a function, [math]\displaystyle{ f(x)\,\! }[/math], such that for any two numbers, [math]\displaystyle{ a\,\! }[/math] and [math]\displaystyle{ b\,\! }[/math] with [math]\displaystyle{ a\le b\,\! }[/math] :

- [math]\displaystyle{ P(a\le X\le b)=\int_{a}^{b}f(x)dx\ \,\! }[/math]

That is, the probability that [math]\displaystyle{ X\,\! }[/math] takes on a value in the interval [math]\displaystyle{ [a,b]\,\! }[/math] is the area under the density function from [math]\displaystyle{ a\,\! }[/math] to [math]\displaystyle{ b,\,\! }[/math] as shown above. The pdf represents the relative frequency of failure times as a function of time.

The cdf is a function, [math]\displaystyle{ F(x)\,\! }[/math], of a random variable [math]\displaystyle{ X\,\! }[/math], and is defined for a number [math]\displaystyle{ x\,\! }[/math] by:

- [math]\displaystyle{ F(x)=P(X\le x)=\int_{0}^{x}f(s)ds\ \,\! }[/math]

That is, for a number [math]\displaystyle{ x\,\! }[/math], [math]\displaystyle{ F(x)\,\! }[/math] is the probability that the observed value of [math]\displaystyle{ X\,\! }[/math] will be at most [math]\displaystyle{ x\,\! }[/math]. The cdf represents the cumulative values of the pdf. That is, the value of a point on the curve of the cdf represents the area under the curve to the left of that point on the pdf. In reliability, the cdf is used to measure the probability that the item in question will fail before the associated time value, [math]\displaystyle{ t\,\! }[/math], and is also called unreliability.

Note that depending on the density function, denoted by [math]\displaystyle{ f(x)\,\! }[/math], the limits will vary based on the region over which the distribution is defined. For example, for the life distributions considered in this reference, with the exception of the normal distribution, this range would be [math]\displaystyle{ [0,+\infty ].\,\! }[/math]

Mathematical Relationship: pdf and cdf

The mathematical relationship between the pdf and cdf is given by:

- [math]\displaystyle{ F(x)=\int_{0}^{x}f(s)ds \,\! }[/math]

where [math]\displaystyle{ s\,\! }[/math] is a dummy integration variable.

Conversely:

- [math]\displaystyle{ f(x)=\frac{d(F(x))}{dx}\,\! }[/math]

The cdf is the area under the probability density function up to a value of [math]\displaystyle{ x\,\! }[/math]. The total area under the pdf is always equal to 1, or mathematically:

- [math]\displaystyle{ \int_{-\infty}^{+\infty }f(x)dx=1\,\! }[/math]

The well-known normal (or Gaussian) distribution is an example of a probability density function. The pdf for this distribution is given by:

- [math]\displaystyle{ f(t)=\frac{1}{\sigma \sqrt{2\pi }}{{e}^{-\tfrac{1}{2}{{\left( \tfrac{t-\mu }{\sigma } \right)}^{2}}}}\,\! }[/math]

where [math]\displaystyle{ \mu \,\! }[/math] is the mean and [math]\displaystyle{ \sigma \,\! }[/math] is the standard deviation. The normal distribution has two parameters, [math]\displaystyle{ \mu \,\! }[/math] and [math]\displaystyle{ \sigma \,\! }[/math].

Another is the lognormal distribution, whose pdf is given by:

- [math]\displaystyle{ f(t)=\frac{1}{t\cdot {{\sigma }^{\prime }}\sqrt{2\pi }}{{e}^{-\tfrac{1}{2}{{\left( \tfrac{{{t}^{\prime }}-{{\mu }^{\prime }}}{{{\sigma }^{\prime }}} \right)}^{2}}}}\,\! }[/math]

where [math]\displaystyle{ {\mu }'\,\! }[/math] is the mean of the natural logarithms of the times-to-failure and [math]\displaystyle{ {\sigma }'\,\! }[/math] is the standard deviation of the natural logarithms of the times-to-failure. Again, this is a 2-parameter distribution.

Reliability Function

The reliability function can be derived using the previous definition of the cumulative distribution function, [math]\displaystyle{ F(x)=\int_{0}^{x}f(s)ds \,\! }[/math]. From our definition of the cdf, the probability of an event occurring by time [math]\displaystyle{ t\,\! }[/math] is given by:

- [math]\displaystyle{ F(t)=\int_{0}^{t}f(s)ds\ \,\! }[/math]

Or, one could equate this event to the probability of a unit failing by time [math]\displaystyle{ t\,\! }[/math].

Since this function defines the probability of failure by a certain time, we could consider this the unreliability function. Subtracting this probability from 1 will give us the reliability function, one of the most important functions in life data analysis. The reliability function gives the probability of success of a unit undertaking a mission of a given time duration. The following figure illustrates this.

To show this mathematically, we first define the unreliability function, [math]\displaystyle{ Q(t)\,\! }[/math], which is the probability of failure, or the probability that our time-to-failure is in the region of 0 and [math]\displaystyle{ t\,\! }[/math]. This is the same as the cdf. So from [math]\displaystyle{ F(t)=\int_{0}^{t}f(s)ds\ \,\! }[/math]:

- [math]\displaystyle{ Q(t)=F(t)=\int_{0}^{t}f(s)ds\,\! }[/math]

Reliability and unreliability are the only two events being considered and they are mutually exclusive; hence, the sum of these probabilities is equal to unity.

Then:

- [math]\displaystyle{ \begin{align} Q(t)+R(t)= & 1 \\ R(t)= & 1-Q(t) \\ R(t)= & 1-\int_{0}^{t}f(s)ds \\ R(t)= & \int_{t}^{\infty }f(s)ds \end{align}\,\! }[/math]

Conversely:

- [math]\displaystyle{ f(t)=-\frac{d(R(t))}{dt}\,\! }[/math]

Conditional Reliability Function

Conditional reliability is the probability of successfully completing another mission following the successful completion of a previous mission. The time of the previous mission and the time for the mission to be undertaken must be taken into account for conditional reliability calculations. The conditional reliability function is given by:

- [math]\displaystyle{ R(t|T)=\frac{R(T+t)}{R(T)}\ \,\! }[/math]

Failure Rate Function

The failure rate function enables the determination of the number of failures occurring per unit time. Omitting the derivation, the failure rate is mathematically given as:

- [math]\displaystyle{ \lambda (t)=\frac{f(t)}{R(t)}\ \,\! }[/math]

This gives the instantaneous failure rate, also known as the hazard function. It is useful in characterizing the failure behavior of a component, determining maintenance crew allocation, planning for spares provisioning, etc. Failure rate is denoted as failures per unit time.

Mean Life (MTTF)

The mean life function, which provides a measure of the average time of operation to failure, is given by:

- [math]\displaystyle{ \overline{T}=m=\int_{0}^{\infty }t\cdot f(t)dt\,\! }[/math]

This is the expected or average time-to-failure and is denoted as the MTTF (Mean Time To Failure).

The MTTF, even though an index of reliability performance, does not give any information on the failure distribution of the component in question when dealing with most lifetime distributions. Because vastly different distributions can have identical means, it is unwise to use the MTTF as the sole measure of the reliability of a component.

Median Life

Median life,

[math]\displaystyle{ \tilde{T}\,\! }[/math],

is the value of the random variable that has exactly one-half of the area under the pdf to its left and one-half to its right.

It represents the centroid of the distribution.

The median is obtained by solving the following equation for [math]\displaystyle{ \breve{T}\,\! }[/math]. (For individual data, the median is the midpoint value.)

- [math]\displaystyle{ \int_{-\infty}^{{\breve{T}}}f(t)dt=0.5\ \,\! }[/math]

Modal Life (or Mode)

The modal life (or mode), [math]\displaystyle{ \tilde{T}\,\! }[/math], is the value of [math]\displaystyle{ T\,\! }[/math] that satisfies:

- [math]\displaystyle{ \frac{d\left[ f(t) \right]}{dt}=0\ \,\! }[/math]

For a continuous distribution, the mode is that value of [math]\displaystyle{ t\,\! }[/math] that corresponds to the maximum probability density (the value at which the pdf has its maximum value, or the peak of the curve).

Lifetime Distributions

A statistical distribution is fully described by its pdf. In the previous sections, we used the definition of the pdf to show how all other functions most commonly used in reliability engineering and life data analysis can be derived. The reliability function, failure rate function, mean time function, and median life function can be determined directly from the pdf definition, or [math]\displaystyle{ f(t)\,\! }[/math]. Different distributions exist, such as the normal (Gaussian), exponential, Weibull, etc., and each has a predefined form of [math]\displaystyle{ f(t)\,\! }[/math] that can be found in many references. In fact, there are certain references that are devoted exclusively to different types of statistical distributions. These distributions were formulated by statisticians, mathematicians and engineers to mathematically model or represent certain behavior. For example, the Weibull distribution was formulated by Waloddi Weibull and thus it bears his name. Some distributions tend to better represent life data and are most commonly called "lifetime distributions".

A more detailed introduction to this topic is presented in Life Distributions.

A Brief Introduction to Life-Stress Relationships

In certain cases when one or more of the characteristics of the distribution change based on an outside factor, one may be interested in formulating a model that includes both the life distribution and a model that describes how a characteristic of the distribution changes. In reliability, the most common "outside factor" is the stress applied to the component. In system analysis, stress comes into play when dealing with units in a load sharing configuration. When components of a system operate in a load sharing configuration, each component supports a portion of the total load for that aspect of the system. When one or more load sharing components fail, the operating components must take on an increased portion of the load in order to compensate for the failure(s). Therefore, the reliability of each component is dependent upon the performance of the other components in the load sharing configuration.

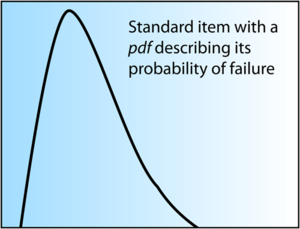

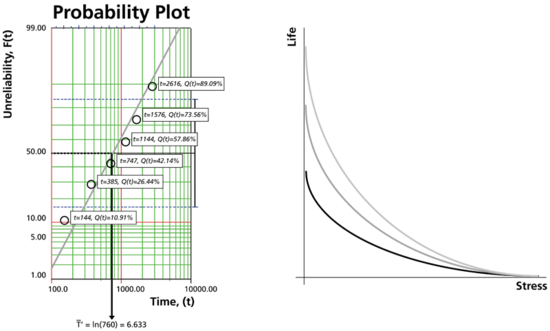

Traditionally in a reliability block diagram, one assumes independence and thus an item's failure characteristics can be fully described by its failure distribution. However, if the configuration includes load sharing redundancy, then a single failure distribution is no longer sufficient to describe an item's failure characteristics. Instead, the item will fail differently when operating under different loads and the load applied to the component will vary depending on the performance of the other component(s) in the configuration. Therefore, a more complex model is needed to fully describe the failure characteristics of such blocks. This model must describe both the effect of the load (or stress) on the life of the product and the probability of failure of the item at the specified load. The models, theory and methodology used in Quantitative Accelerated Life Testing (QALT) data analysis can be used to obtain the desired model for this situation. The objective of QALT analysis is to relate the applied stress to life (or a life distribution). Identically in the load sharing case, one again wants to relate the applied stress (or load) to life. The following figure graphically illustrates the probability density function (pdf) for a standard item, where only a single distribution is required.

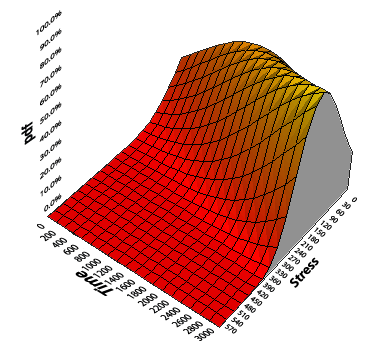

The next figure represents a load sharing item by using a 3-D surface that illustrates the pdf , load and time.

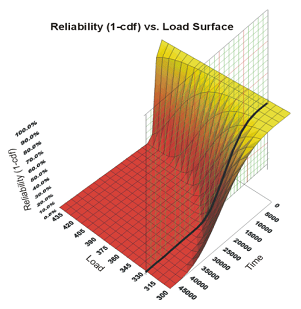

The following figure shows the reliability curve for a load sharing item vs. the applied load.

To formulate the model, a life distribution is combined with a life-stress relationship. The distribution choice is based on the product's failure characteristics while the life-stress relationship is based on how the stress affects the life characteristics. The following figure graphically shows these elements of the formulation.

The next figure shows the combination of both an underlying distribution and a life-stress model by plotting a pdf against both time and stress.

The assumed underlying life distribution can be any life distribution. The most commonly used life distributions include the Weibull, the exponential and the lognormal. The life-stress relationship describes how a specific life characteristic changes with the application of stress. The life characteristic can be any life measure such as the mean, median, [math]\displaystyle{ R(x)\,\! }[/math], [math]\displaystyle{ F(x)\,\! }[/math], etc. It is expressed as a function of stress. Depending on the assumed underlying life distribution, different life characteristics are considered. Typical life characteristics for some distributions are shown in the next table.

| Distribution | Parameters | Life Characteristic |

|---|---|---|

| Weibull | [math]\displaystyle{ \beta\,\! }[/math]*, [math]\displaystyle{ \eta \,\! }[/math] | Scale parameter, [math]\displaystyle{ \eta \,\! }[/math] |

| Exponential | [math]\displaystyle{ \lambda \,\! }[/math] | Mean Life, ([math]\displaystyle{ 1/{\lambda} \,\! }[/math]) |

| Lognormal | [math]\displaystyle{ \bar{T} \,\! }[/math], [math]\displaystyle{ \sigma \,\! }[/math]* | Median, [math]\displaystyle{ \breve{T} \,\! }[/math] |

| *Usually assumed constant | ||

For example, when considering the Weibull distribution, the scale parameter, [math]\displaystyle{ \eta \,\! }[/math], is chosen to be the life characteristic that is stress-dependent while [math]\displaystyle{ \beta \,\! }[/math] is assumed to remain constant across different stress levels. A life-stress relationship is then assigned to [math]\displaystyle{ \eta .\,\! }[/math]

For a detailed discussion of this topic, see ReliaSoft's Accelerated Life Testing Data Analysis Reference.