Template:Lognormal distribution estimation of the parameters

Estimation of the Parameters

As may be indicated by the name, the loglogistic distribution has certain similarities to the logistic distribution. A random variable is loglogistically distributed if the logarithm of the random variable is logistically distributed. Because of this, there are many mathematical similarities between the two distributions, as discussed in Meeker and Escobar [27]. For example, the mathematical reasoning for the construction of the probability plotting scales is very similar for these two distributions.

Loglogistic Probability Density Function

The loglogistic distribution is a 2-parameter distribution with parameters [math]\displaystyle{ \mu \,\! }[/math] and [math]\displaystyle{ \sigma \,\! }[/math]. The pdf for this distribution is given by:

- [math]\displaystyle{ f(t)=\frac{{{e}^{z}}}{\sigma {t}{{(1+{{e}^{z}})}^{2}}}\,\! }[/math]

where:

- [math]\displaystyle{ z=\frac{{t}'-\mu }{\sigma }\,\! }[/math]

- [math]\displaystyle{ {t}'=\ln (t)\,\! }[/math]

and:

- [math]\displaystyle{ \begin{align} & \mu = & \text{scale parameter} \\ & \sigma = & \text{shape parameter} \end{align}\,\! }[/math]

where [math]\displaystyle{ 0\lt t\lt \infty \,\! }[/math], [math]\displaystyle{ -\infty \lt \mu \lt \infty \,\! }[/math] and [math]\displaystyle{ 0\lt \sigma \lt \infty \,\! }[/math].

Mean, Median and Mode

The mean of the loglogistic distribution, [math]\displaystyle{ \overline{T}\,\! }[/math], is given by:

- [math]\displaystyle{ \overline{T}={{e}^{\mu }}\Gamma (1+\sigma )\Gamma (1-\sigma )\,\! }[/math]

Note that for [math]\displaystyle{ \sigma \ge 1,\,\! }[/math] [math]\displaystyle{ \overline{T}\,\! }[/math] does not exist.

The median of the loglogistic distribution, [math]\displaystyle{ \breve{T}\,\! }[/math], is given by:

- [math]\displaystyle{ \widehat{T}={{e}^{\mu }}\,\! }[/math]

The mode of the loglogistic distribution, [math]\displaystyle{ \tilde{T}\,\! }[/math], if [math]\displaystyle{ \sigma \lt 1,\,\! }[/math] is given by:

- [math]\displaystyle{ \tilde{T} = e^{\mu+\sigma ln(\frac{1-\sigma}{1+\sigma})}\,\! }[/math]

The Standard Deviation

The standard deviation of the loglogistic distribution, [math]\displaystyle{ {{\sigma }_{T}}\,\! }[/math], is given by:

- [math]\displaystyle{ {{\sigma }_{T}}={{e}^{\mu }}\sqrt{\Gamma (1+2\sigma )\Gamma (1-2\sigma )-{{(\Gamma (1+\sigma )\Gamma (1-\sigma ))}^{2}}}\,\! }[/math]

Note that for [math]\displaystyle{ \sigma \ge 0.5,\,\! }[/math] the standard deviation does not exist.

The Loglogistic Reliability Function

The reliability for a mission of time [math]\displaystyle{ T\,\! }[/math], starting at age 0, for the loglogistic distribution is determined by:

- [math]\displaystyle{ R=\frac{1}{1+{{e}^{z}}}\,\! }[/math]

where:

- [math]\displaystyle{ z=\frac{{t}'-\mu }{\sigma }\,\! }[/math]

- [math]\displaystyle{ \begin{align} {t}'=\ln (t) \end{align}\,\! }[/math]

The unreliability function is:

- [math]\displaystyle{ F=\frac{{{e}^{z}}}{1+{{e}^{z}}}\,\! }[/math]

The loglogistic Reliable Life

The logistic reliable life is:

- [math]\displaystyle{ \begin{align} {{T}_{R}}={{e}^{\mu +\sigma [\ln (1-R)-\ln (R)]}} \end{align}\,\! }[/math]

The loglogistic Failure Rate Function

The loglogistic failure rate is given by:

- [math]\displaystyle{ \lambda (t)=\frac{{{e}^{z}}}{\sigma t(1+{{e}^{z}})}\,\! }[/math]

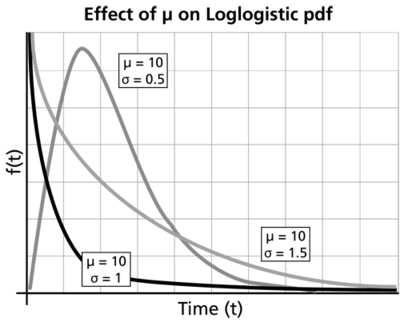

Distribution Characteristics

For [math]\displaystyle{ \sigma \gt 1\,\! }[/math] :

- [math]\displaystyle{ f(t)\,\! }[/math] decreases monotonically and is convex. Mode and mean do not exist.

For [math]\displaystyle{ \sigma =1\,\! }[/math] :

- [math]\displaystyle{ f(t)\,\! }[/math] decreases monotonically and is convex. Mode and mean do not exist. As [math]\displaystyle{ t\to 0\,\! }[/math], [math]\displaystyle{ f(t)\to \tfrac{1}{\sigma {{e}^{\tfrac{\mu }{\sigma }}}}.\,\! }[/math]

- As [math]\displaystyle{ t\to 0\,\! }[/math], [math]\displaystyle{ \lambda (t)\to \tfrac{1}{\sigma {{e}^{\tfrac{\mu }{\sigma }}}}.\,\! }[/math]

For [math]\displaystyle{ 0\lt \sigma \lt 1\,\! }[/math] :

- The shape of the loglogistic distribution is very similar to that of the lognormal distribution and the Weibull distribution.

- The pdf starts at zero, increases to its mode, and decreases thereafter.

- As [math]\displaystyle{ \mu \,\! }[/math] increases, while [math]\displaystyle{ \sigma \,\! }[/math] is kept the same, the pdf gets stretched out to the right and its height decreases, while maintaining its shape.

- As [math]\displaystyle{ \mu \,\! }[/math] decreases,while [math]\displaystyle{ \sigma \,\! }[/math] is kept the same, the pdf gets pushed in towards the left and its height increases.

- [math]\displaystyle{ \lambda (t)\,\! }[/math] increases till [math]\displaystyle{ t={{e}^{\mu +\sigma \ln (\tfrac{1-\sigma }{\sigma })}}\,\! }[/math] and decreases thereafter. [math]\displaystyle{ \lambda (t)\,\! }[/math] is concave at first, then becomes convex.

Confidence Bounds

The method used by the application in estimating the different types of confidence bounds for loglogistically distributed data is presented in this section. The complete derivations were presented in detail for a general function in Parameter Estimation.

Bounds on the Parameters

The lower and upper bounds [math]\displaystyle{ {\mu }\,\! }[/math], are estimated from:

- [math]\displaystyle{ \begin{align} & \mu _{U}= & {{\widehat{\mu }}}+{{K}_{\alpha }}\sqrt{Var(\widehat{\mu })}\text{ (upper bound)} \\ & \mu _{L}= & {{\widehat{\mu }}}-{{K}_{\alpha }}\sqrt{Var(\widehat{\mu })}\text{ (lower bound)} \end{align}\,\! }[/math]

For paramter [math]\displaystyle{ {{\widehat{\sigma }}}\,\! }[/math], [math]\displaystyle{ \ln ({{\widehat{\sigma }}})\,\! }[/math] is treated as normally distributed, and the bounds are estimated from:

- [math]\displaystyle{ \begin{align} & {{\sigma }_{U}}= & {{\widehat{\sigma }}}\cdot {{e}^{\tfrac{{{K}_{\alpha }}\sqrt{Var(\widehat{\sigma })}}{\widehat{\sigma }}}}\text{ (upper bound)} \\ & {{\sigma }_{L}}= & \frac{{{\widehat{\sigma }}}}{{{e}^{\tfrac{{{K}_{\alpha }}\sqrt{Var(\widehat{\sigma })}}{{{\widehat{\sigma }}}}}}}\text{ (lower bound)} \end{align}\,\! }[/math]

where [math]\displaystyle{ {{K}_{\alpha }}\,\! }[/math] is defined by:

- [math]\displaystyle{ \alpha =\frac{1}{\sqrt{2\pi }}\int_{{{K}_{\alpha }}}^{\infty }{{e}^{-\tfrac{{{t}^{2}}}{2}}}dt=1-\Phi ({{K}_{\alpha }})\,\! }[/math]

If [math]\displaystyle{ \delta \,\! }[/math] is the confidence level, then [math]\displaystyle{ \alpha =\tfrac{1-\delta }{2}\,\! }[/math] for the two-sided bounds, and [math]\displaystyle{ \alpha =1-\delta \,\! }[/math] for the one-sided bounds.

The variances and covariances of [math]\displaystyle{ \widehat{\mu }\,\! }[/math] and [math]\displaystyle{ \widehat{\sigma }\,\! }[/math] are estimated as follows:

- [math]\displaystyle{ \left( \begin{matrix} \widehat{Var}\left( \widehat{\mu } \right) & \widehat{Cov}\left( \widehat{\mu },\widehat{\sigma } \right) \\ \widehat{Cov}\left( \widehat{\mu },\widehat{\sigma } \right) & \widehat{Var}\left( \widehat{\sigma } \right) \\ \end{matrix} \right)=\left( \begin{matrix} -\tfrac{{{\partial }^{2}}\Lambda }{\partial {{(\mu )}^{2}}} & -\tfrac{{{\partial }^{2}}\Lambda }{\partial \mu \partial \sigma } \\ {} & {} \\ -\tfrac{{{\partial }^{2}}\Lambda }{\partial \mu \partial \sigma } & -\tfrac{{{\partial }^{2}}\Lambda }{\partial {{\sigma }^{2}}} \\ \end{matrix} \right)_{\mu =\widehat{\mu },\sigma =\widehat{\sigma }}^{-1}\,\! }[/math]

where [math]\displaystyle{ \Lambda \,\! }[/math] is the log-likelihood function of the loglogistic distribution.

Bounds on Reliability

The reliability of the logistic distribution is:

- [math]\displaystyle{ \widehat{R}=\frac{1}{1+\exp (\widehat{z})}\,\! }[/math]

where:

- [math]\displaystyle{ \widehat{z}=\frac{{t}'-\widehat{\mu }}{\widehat{\sigma }}\,\! }[/math]

Here [math]\displaystyle{ 0\lt t\lt \infty \,\! }[/math], [math]\displaystyle{ -\infty \lt \mu \lt \infty \,\! }[/math], [math]\displaystyle{ 0\lt \sigma \lt \infty \,\! }[/math], therefore [math]\displaystyle{ 0\lt t'=\ln (t)\lt \infty \,\! }[/math] and [math]\displaystyle{ z\,\! }[/math] also is changing from [math]\displaystyle{ -\infty \,\! }[/math] till [math]\displaystyle{ +\infty \,\! }[/math].

The bounds on [math]\displaystyle{ z\,\! }[/math] are estimated from:

- [math]\displaystyle{ {{z}_{U}}=\widehat{z}+{{K}_{\alpha }}\sqrt{Var(\widehat{z})}\,\! }[/math]

- [math]\displaystyle{ {{z}_{L}}=\widehat{z}-{{K}_{\alpha }}\sqrt{Var(\widehat{z})\text{ }}\text{ }\,\! }[/math]

where:

- [math]\displaystyle{ Var(\widehat{z})={{(\frac{\partial z}{\partial \mu })}^{2}}Var({{\widehat{\mu }}^{\prime }})+2(\frac{\partial z}{\partial \mu })(\frac{\partial z}{\partial \sigma })Cov(\widehat{\mu },\widehat{\sigma })+{{(\frac{\partial z}{\partial \sigma })}^{2}}Var(\widehat{\sigma })\,\! }[/math]

or:

- [math]\displaystyle{ Var(\widehat{z})=\frac{1}{{{\sigma }^{2}}}(Var(\widehat{\mu })+2\widehat{z}Cov(\widehat{\mu },\widehat{\sigma })+{{\widehat{z}}^{2}}Var(\widehat{\sigma }))\,\! }[/math]

The upper and lower bounds on reliability are:

- [math]\displaystyle{ {{R}_{U}}=\frac{1}{1+{{e}^{{{z}_{L}}}}}\text{(Upper bound)}\,\! }[/math]

- [math]\displaystyle{ {{R}_{L}}=\frac{1}{1+{{e}^{{{z}_{U}}}}}\text{(Lower bound)}\,\! }[/math]

Bounds on Time

The bounds around time for a given loglogistic percentile, or unreliability, are estimated by first solving the reliability equation with respect to time, as follows:

- [math]\displaystyle{ \widehat{T}(\widehat{\mu },\widehat{\sigma })={{e}^{\widehat{\mu }+\widehat{\sigma }z}}\,\! }[/math]

where:

- [math]\displaystyle{ \begin{align} z=\ln (1-R)-\ln (R) \end{align}\,\! }[/math]

or:

- [math]\displaystyle{ \ln (\hat{T})=\widehat{\mu }+\widehat{\sigma }z\,\! }[/math]

Let:

- [math]\displaystyle{ {u}=\ln (\hat{T})=\widehat{\mu }+\widehat{\sigma }z\,\! }[/math]

then:

- [math]\displaystyle{ {u}_{U}=\widehat{u}+{{K}_{\alpha }}\sqrt{Var(\widehat{u})\text{ }}\text{ }\,\! }[/math]

- [math]\displaystyle{ {u}_{L}=\widehat{u}-{{K}_{\alpha }}\sqrt{Var(\widehat{u})\text{ }}\text{ }\,\! }[/math]

where:

- [math]\displaystyle{ Var(\widehat{u})={{(\frac{\partial u}{\partial \mu })}^{2}}Var(\widehat{\mu })+2(\frac{\partial u}{\partial \mu })(\frac{\partial u}{\partial \sigma })Cov(\widehat{\mu },\widehat{\sigma })+{{(\frac{\partial u}{\partial \sigma })}^{2}}Var(\widehat{\sigma })\,\! }[/math]

or:

- [math]\displaystyle{ Var(\widehat{u})=Var(\widehat{\mu })+2\widehat{z}Cov(\widehat{\mu },\widehat{\sigma })+{{\widehat{z}}^{2}}Var(\widehat{\sigma })\,\! }[/math]

The upper and lower bounds are then found by:

- [math]\displaystyle{ {{T}_{U}}={{e}^{{{u}_{U}}}}\text{ (upper bound)}\,\! }[/math]

- [math]\displaystyle{ {{T}_{L}}={{e}^{{{u}_{L}}}}\text{ (lower bound)}\,\! }[/math]

General Examples

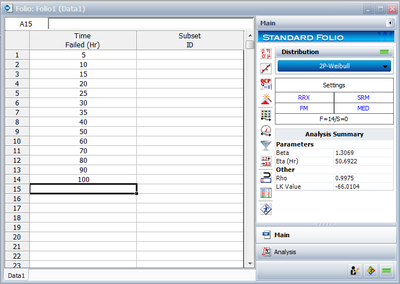

Determine the loglogistic parameter estimates for the data given in the following table.

Set up the folio for times-to-failure data that includes interval and left censored data, then enter the data. The computed parameters for maximum likelihood are calculated to be:

- [math]\displaystyle{ \begin{align} & {{{\hat{\mu }}}^{\prime }}= & 5.9772 \\ & {{{\hat{\sigma }}}_{{{T}'}}}= & 0.3256 \end{align}\,\! }[/math]

For rank regression on [math]\displaystyle{ X\,\! }[/math]:

- [math]\displaystyle{ \begin{align} & \hat{\mu }= & 5.9281 \\ & \hat{\sigma }= & 0.3821 \end{align}\,\! }[/math]

For rank regression on [math]\displaystyle{ Y\,\! }[/math]:

- [math]\displaystyle{ \begin{align} & \hat{\mu }= & 5.9772 \\ & \hat{\sigma }= & 0.3256 \end{align}\,\! }[/math]

Rank Regression on Y

Performing a rank regression on Y requires that a straight line be fitted to a set of data points such that the sum of the squares of the vertical deviations from the points to the line is minimized.

The least squares parameter estimation method, or regression analysis, was discussed in Chapter 3 and the following equations for regression on Y were derived, and are again applicable:

- [math]\displaystyle{ \hat{a}=\bar{y}-\hat{b}\bar{x}=\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}}}{N}-\hat{b}\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}}}{N} }[/math]

- and:

- [math]\displaystyle{ \hat{b}=\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}}{{y}_{i}}-\tfrac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}}\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}}}{N}}{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,x_{i}^{2}-\tfrac{{{\left( \underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}} \right)}^{2}}}{N}} }[/math]

In our case the equations for [math]\displaystyle{ {{y}_{i}} }[/math] and [math]\displaystyle{ x_{i} }[/math] are:

- [math]\displaystyle{ {{y}_{i}}={{\Phi }^{-1}}\left[ F(T_{i}^{\prime }) \right] }[/math]

- and:

- [math]\displaystyle{ {{x}_{i}}=T_{i}^{\prime } }[/math]

where the [math]\displaystyle{ F(T_{i}^{\prime }) }[/math] is estimated from the median ranks. Once [math]\displaystyle{ \widehat{a} }[/math] and [math]\displaystyle{ \widehat{b} }[/math] are obtained, then [math]\displaystyle{ \widehat{\sigma } }[/math] and [math]\displaystyle{ \widehat{\mu } }[/math] can easily be obtained from Eqns. (aln) and (bln).

The Correlation Coefficient

The estimator of [math]\displaystyle{ \rho }[/math] is the sample correlation coefficient, [math]\displaystyle{ \hat{\rho } }[/math] , given by:

- [math]\displaystyle{ \hat{\rho }=\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,({{x}_{i}}-\overline{x})({{y}_{i}}-\overline{y})}{\sqrt{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{({{x}_{i}}-\overline{x})}^{2}}\cdot \underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{({{y}_{i}}-\overline{y})}^{2}}}} }[/math]

Example 2

Fourteen units were reliability tested and the following life test data were obtained:

| Table 9.1 - Life Test Data for Example 2 | |

| Data point index | Time-to-failure |

|---|---|

| 1 | 5 |

| 2 | 10 |

| 3 | 15 |

| 4 | 20 |

| 5 | 25 |

| 6 | 30 |

| 7 | 35 |

| 8 | 40 |

| 9 | 50 |

| 10 | 60 |

| 11 | 70 |

| 12 | 80 |

| 13 | 90 |

| 14 | 100 |

Assuming the data follow a lognormal distribution, estimate the parameters and the correlation coefficient, [math]\displaystyle{ \rho }[/math] , using rank regression on Y.

Solution to Example 2

Construct Table 9.2, as shown next.

The median rank values ( [math]\displaystyle{ F({{T}_{i}}) }[/math] ) can be found in rank tables or by using the Quick Statistical Reference in Weibull++ .

The [math]\displaystyle{ {{y}_{i}} }[/math] values were obtained from the standardized normal distribution's area tables by entering for [math]\displaystyle{ F(z) }[/math] and getting the corresponding [math]\displaystyle{ z }[/math] value ( [math]\displaystyle{ {{y}_{i}} }[/math] ).

Given the values in the table above, calculate [math]\displaystyle{ \widehat{a} }[/math] and [math]\displaystyle{ \widehat{b} }[/math] using Eqns. (aaln) and (bbln):

- [math]\displaystyle{ \begin{align} & \widehat{b}= & \frac{\underset{i=1}{\overset{14}{\mathop{\sum }}}\,T_{i}^{\prime }{{y}_{i}}-(\underset{i=1}{\overset{14}{\mathop{\sum }}}\,T_{i}^{\prime })(\underset{i=1}{\overset{14}{\mathop{\sum }}}\,{{y}_{i}})/14}{\underset{i=1}{\overset{14}{\mathop{\sum }}}\,T_{i}^{\prime 2}-{{(\underset{i=1}{\overset{14}{\mathop{\sum }}}\,T_{i}^{\prime })}^{2}}/14} \\ & & \\ & \widehat{b}= & \frac{10.4473-(49.2220)(0)/14}{183.1530-{{(49.2220)}^{2}}/14} \end{align} }[/math]

- or:

- [math]\displaystyle{ \widehat{b}=1.0349 }[/math]

- and:

- [math]\displaystyle{ \widehat{a}=\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}}}{N}-\widehat{b}\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,T_{i}^{\prime }}{N} }[/math]

- or:

- [math]\displaystyle{ \widehat{a}=\frac{0}{14}-(1.0349)\frac{49.2220}{14}=-3.6386 }[/math]

- Therefore, from Eqn. (bln):

- [math]\displaystyle{ {{\sigma }_{{{T}'}}}=\frac{1}{\widehat{b}}=\frac{1}{1.0349}=0.9663 }[/math]

- and from Eqn. (aln):

- [math]\displaystyle{ {\mu }'=-\widehat{a}\cdot {{\sigma }_{{{T}'}}}=-(-3.6386)\cdot 0.9663 }[/math]

- or:

- [math]\displaystyle{ {\mu }'=3.516 }[/math]

The mean and the standard deviation of the lognormal distribution are obtained using Eqns. (mean) and (sdv):

- [math]\displaystyle{ \overline{T}=\mu ={{e}^{3.516+\tfrac{1}{2}{{0.9663}^{2}}}}=53.6707\text{ hours} }[/math]

- and:

- [math]\displaystyle{ {{\sigma }_{T}}=\sqrt{({{e}^{2\cdot 3.516+{{0.9663}^{2}}}})({{e}^{{{0.9663}^{2}}}}-1)}=66.69\text{ hours} }[/math]

The correlation coefficient can be estimated using Eqn. (RHOln):

- [math]\displaystyle{ \widehat{\rho }=0.9754 }[/math]

The above example can be repeated using Weibull++ , using RRY.

The mean can be obtained from the QCP and both the mean and the standard deviation can be obtained from the Function Wizard.

Rank Regression on X

Performing a rank regression on X requires that a straight line be fitted to a set of data points such that the sum of the squares of the horizontal deviations from the points to the line is minimized.

Again, the first task is to bring our [math]\displaystyle{ cdf }[/math] function into a linear form. This step is exactly the same as in regression on Y analysis and Eqns. (lnorm), (yln), (aln) and (bln) apply in this case too. The deviation from the previous analysis begins on the least squares fit part, where in this case we treat [math]\displaystyle{ x }[/math] as the dependent variable and [math]\displaystyle{ y }[/math] as the independent variable. The best-fitting straight line to the data, for regression on X (see Chapter 3), is the straight line:

- [math]\displaystyle{ x=\widehat{a}+\widehat{b}y }[/math]

The corresponding equations for and [math]\displaystyle{ \widehat{b} }[/math] are:

- [math]\displaystyle{ \hat{a}=\overline{x}-\hat{b}\overline{y}=\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}}}{N}-\hat{b}\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}}}{N} }[/math]

- and:

- [math]\displaystyle{ \hat{b}=\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}}{{y}_{i}}-\tfrac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}}\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}}}{N}}{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,y_{i}^{2}-\tfrac{{{\left( \underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}} \right)}^{2}}}{N}} }[/math]

- where:

- [math]\displaystyle{ {{y}_{i}}={{\Phi }^{-1}}\left[ F(T_{i}^{\prime }) \right] }[/math]

- and:

- [math]\displaystyle{ {{x}_{i}}=T_{i}^{\prime } }[/math]

and the [math]\displaystyle{ F(T_{i}^{\prime }) }[/math] is estimated from the median ranks. Once [math]\displaystyle{ \widehat{a} }[/math] and [math]\displaystyle{ \widehat{b} }[/math] are obtained, solve Eqn. (xlineln) for the unknown [math]\displaystyle{ y }[/math] , which corresponds to:

- [math]\displaystyle{ y=-\frac{\widehat{a}}{\widehat{b}}+\frac{1}{\widehat{b}}x }[/math]

Solving for the parameters from Eqns. (bln) and (aln) we get:

- [math]\displaystyle{ a=-\frac{\widehat{a}}{\widehat{b}}=-\frac{{{\mu }'}}{{{\sigma }_{{{T}'}}}} }[/math]

- and:

- [math]\displaystyle{ b=\frac{1}{\widehat{b}}=\frac{1}{{{\sigma }_{{{T}'}}}}\text{ } }[/math]

The correlation coefficient is evaluated as before using Eqn. (RHOln).

Example 3

Using the data of Example 2 and assuming a lognormal distribution, estimate the parameters and estimate the correlation coefficient, [math]\displaystyle{ \rho }[/math] , using rank regression on X.

Solution to Example 3

Table 9.2 constructed in Example 2 applies to this example as well. Using the values in this table we get:

- [math]\displaystyle{ \begin{align} & \hat{b}= & \frac{\underset{i=1}{\overset{14}{\mathop{\sum }}}\,T_{i}^{\prime }{{y}_{i}}-\tfrac{\underset{i=1}{\overset{14}{\mathop{\sum }}}\,T_{i}^{\prime }\underset{i=1}{\overset{14}{\mathop{\sum }}}\,{{y}_{i}}}{14}}{\underset{i=1}{\overset{14}{\mathop{\sum }}}\,y_{i}^{2}-\tfrac{{{\left( \underset{i=1}{\overset{14}{\mathop{\sum }}}\,{{y}_{i}} \right)}^{2}}}{14}} \\ & & \\ & \widehat{b}= & \frac{10.4473-(49.2220)(0)/14}{11.3646-{{(0)}^{2}}/14} \end{align} }[/math]

- or:

- [math]\displaystyle{ \widehat{b}=0.9193 }[/math]

- and:

- [math]\displaystyle{ \hat{a}=\overline{x}-\hat{b}\overline{y}=\frac{\underset{i=1}{\overset{14}{\mathop{\sum }}}\,T_{i}^{\prime }}{14}-\widehat{b}\frac{\underset{i=1}{\overset{14}{\mathop{\sum }}}\,{{y}_{i}}}{14} }[/math]

- or:

- [math]\displaystyle{ \widehat{a}=\frac{49.2220}{14}-(0.9193)\frac{(0)}{14}=3.5159 }[/math]

Therefore, from Eqn. (blnx):

- [math]\displaystyle{ {{\sigma }_{{{T}'}}}=\widehat{b}=0.9193 }[/math]

and from Eqn. (alnx):

- [math]\displaystyle{ {\mu }'=\frac{\widehat{a}}{\widehat{b}}{{\sigma }_{{{T}'}}}=\frac{3.5159}{0.9193}\cdot 0.9193=3.5159 }[/math]

Using Eqns. (mean) and (sdv) we get:

- [math]\displaystyle{ \overline{T}=\mu =51.3393\text{ hours} }[/math]

- and:

- [math]\displaystyle{ {{\sigma }_{T}}=59.1682\text{ hours}. }[/math]

The correlation coefficient is found using Eqn. (RHOln):

- [math]\displaystyle{ \widehat{\rho }=0.9754. }[/math]

Note that the regression on Y analysis is not necessarily the same as the regression on X. The only time when the results of the two regression types are the same (i.e. will yield the same equation for a line) is when the data lie perfectly on a line.

Using Weibull++ , with the Rank Regression on X option, the results are:

Maximum Likelihood Estimation

As it was outlined in Chapter 3, maximum likelihood estimation works by developing a likelihood function based on the available data and finding the values of the parameter estimates that maximize the likelihood function. This can be achieved by using iterative methods to determine the parameter estimate values that maximize the likelihood function. However, this can be rather difficult and time-consuming, particularly when dealing with the three-parameter distribution. Another method of finding the parameter estimates involves taking the partial derivatives of the likelihood equation with respect to the parameters, setting the resulting equations equal to zero, and solving simultaneously to determine the values of the parameter estimates. The log-likelihood functions and associated partial derivatives used to determine maximum likelihood estimates for the lognormal distribution are covered in Appendix C.

Confidence Bounds

The method used by the application in estimating the different types of confidence bounds for lognormally distributed data is presented in this section. Note that there are closed-form solutions for both the normal and lognormal reliability that can be obtained without the use of the Fisher information matrix. However, these closed-form solutions only apply to complete data. To achieve consistent application across all possible data types, Weibull++ always uses the Fisher matrix in computing confidence intervals. The complete derivations were presented in detail for a general function in Chapter 5. For a discussion on exact confidence bounds for the normal and lognormal, see Chapter 8.

Fisher Matrix Bounds

Bounds on the Parameters

The lower and upper bounds on the mean, [math]\displaystyle{ {\mu }' }[/math] , are estimated from:

- [math]\displaystyle{ \begin{align} & \mu _{U}^{\prime }= & {{\widehat{\mu }}^{\prime }}+{{K}_{\alpha }}\sqrt{Var({{\widehat{\mu }}^{\prime }})}\text{ (upper bound),} \\ & \mu _{L}^{\prime }= & {{\widehat{\mu }}^{\prime }}-{{K}_{\alpha }}\sqrt{Var({{\widehat{\mu }}^{\prime }})}\text{ (lower bound)}\text{.} \end{align} }[/math]

For the standard deviation, [math]\displaystyle{ {{\widehat{\sigma }}_{{{T}'}}} }[/math] , [math]\displaystyle{ \ln ({{\widehat{\sigma }}_{{{T}'}}}) }[/math] is treated as normally distributed, and the bounds are estimated from:

- [math]\displaystyle{ \begin{align} & {{\sigma }_{U}}= & {{\widehat{\sigma }}_{{{T}'}}}\cdot {{e}^{\tfrac{{{K}_{\alpha }}\sqrt{Var({{\widehat{\sigma }}_{{{T}'}}})}}{{{\widehat{\sigma }}_{{{T}'}}}}}}\text{ (upper bound),} \\ & {{\sigma }_{L}}= & \frac{{{\widehat{\sigma }}_{{{T}'}}}}{{{e}^{\tfrac{{{K}_{\alpha }}\sqrt{Var({{\widehat{\sigma }}_{{{T}'}}})}}{{{\widehat{\sigma }}_{{{T}'}}}}}}}\text{ (lower bound),} \end{align} }[/math]

where [math]\displaystyle{ {{K}_{\alpha }} }[/math] is defined by:

- [math]\displaystyle{ \alpha =\frac{1}{\sqrt{2\pi }}\int_{{{K}_{\alpha }}}^{\infty }{{e}^{-\tfrac{{{t}^{2}}}{2}}}dt=1-\Phi ({{K}_{\alpha }}) }[/math]

If [math]\displaystyle{ \delta }[/math] is the confidence level, then [math]\displaystyle{ \alpha =\tfrac{1-\delta }{2} }[/math] for the two-sided bounds and [math]\displaystyle{ \alpha =1-\delta }[/math] for the one-sided bounds.

The variances and covariances of [math]\displaystyle{ {{\widehat{\mu }}^{\prime }} }[/math] and [math]\displaystyle{ {{\widehat{\sigma }}_{{{T}'}}} }[/math] are estimated as follows:

- [math]\displaystyle{ \left( \begin{matrix} \widehat{Var}\left( {{\widehat{\mu }}^{\prime }} \right) & \widehat{Cov}\left( {{\widehat{\mu }}^{\prime }},{{\widehat{\sigma }}_{{{T}'}}} \right) \\ \widehat{Cov}\left( {{\widehat{\mu }}^{\prime }},{{\widehat{\sigma }}_{{{T}'}}} \right) & \widehat{Var}\left( {{\widehat{\sigma }}_{{{T}'}}} \right) \\ \end{matrix} \right)=\left( \begin{matrix} -\tfrac{{{\partial }^{2}}\Lambda }{\partial {{({\mu }')}^{2}}} & -\tfrac{{{\partial }^{2}}\Lambda }{\partial {\mu }'\partial {{\sigma }_{{{T}'}}}} \\ {} & {} \\ -\tfrac{{{\partial }^{2}}\Lambda }{\partial {\mu }'\partial {{\sigma }_{{{T}'}}}} & -\tfrac{{{\partial }^{2}}\Lambda }{\partial \sigma _{{{T}'}}^{2}} \\ \end{matrix} \right)_{{\mu }'={{\widehat{\mu }}^{\prime }},{{\sigma }_{{{T}'}}}={{\widehat{\sigma }}_{{{T}'}}}}^{-1} }[/math]

where [math]\displaystyle{ \Lambda }[/math] is the log-likelihood function of the lognormal distribution.

Bounds on Reliability

The reliability of the lognormal distribution is:

- [math]\displaystyle{ \hat{R}({T}';{\mu }',{{\sigma }_{{{T}'}}})=\int_{{{T}'}}^{\infty }\frac{1}{{{\widehat{\sigma }}_{{{T}'}}}\sqrt{2\pi }}{{e}^{-\tfrac{1}{2}{{\left( \tfrac{t-{{\widehat{\mu }}^{\prime }}}{{{\widehat{\sigma }}_{{{T}'}}}} \right)}^{2}}}}dt }[/math]

Let [math]\displaystyle{ \widehat{z}(t;{{\hat{\mu }}^{\prime }},{{\hat{\sigma }}_{{{T}'}}})=\tfrac{t-{{\widehat{\mu }}^{\prime }}}{{{\widehat{\sigma }}_{{{T}'}}}}, }[/math] then [math]\displaystyle{ \tfrac{d\widehat{z}}{dt}=\tfrac{1}{{{\widehat{\sigma }}_{{{T}'}}}}. }[/math] For [math]\displaystyle{ t={T}' }[/math] , [math]\displaystyle{ \widehat{z}=\tfrac{{T}'-{{\widehat{\mu }}^{\prime }}}{{{\widehat{\sigma }}_{{{T}'}}}} }[/math] , and for [math]\displaystyle{ t=\infty , }[/math] [math]\displaystyle{ \widehat{z}=\infty . }[/math] The above equation then becomes:

- [math]\displaystyle{ \hat{R}(\widehat{z})=\int_{\widehat{z}({T}')}^{\infty }\frac{1}{\sqrt{2\pi }}{{e}^{-\tfrac{1}{2}{{z}^{2}}}}dz }[/math]

The bounds on [math]\displaystyle{ z }[/math] are estimated from:

- [math]\displaystyle{ \begin{align} & {{z}_{U}}= & \widehat{z}+{{K}_{\alpha }}\sqrt{Var(\widehat{z})} \\ & {{z}_{L}}= & \widehat{z}-{{K}_{\alpha }}\sqrt{Var(\widehat{z})} \end{align} }[/math]

- where:

- [math]\displaystyle{ \begin{align} & Var(\widehat{z})= & \left( \frac{\partial z}{\partial {\mu }'} \right)_{{{\widehat{\mu }}^{\prime }}}^{2}Var({{\widehat{\mu }}^{\prime }})+\left( \frac{\partial z}{\partial {{\sigma }_{{{T}'}}}} \right)_{{{\widehat{\sigma }}_{{{T}'}}}}^{2}Var({{\widehat{\sigma }}_{{{T}'}}}) \\ & & +2{{\left( \frac{\partial z}{\partial {\mu }'} \right)}_{{{\widehat{\mu }}^{\prime }}}}{{\left( \frac{\partial z}{\partial {{\sigma }_{{{T}'}}}} \right)}_{{{\widehat{\sigma }}_{{{T}'}}}}}Cov\left( {{\widehat{\mu }}^{\prime }},{{\widehat{\sigma }}_{{{T}'}}} \right) \end{align} }[/math]

- or:

- [math]\displaystyle{ Var(\widehat{z})=\frac{1}{\widehat{\sigma }_{{{T}'}}^{2}}\left[ Var({{\widehat{\mu }}^{\prime }})+{{\widehat{z}}^{2}}Var({{\widehat{\sigma }}_{{{T}'}}})+2\cdot \widehat{z}\cdot Cov\left( {{\widehat{\mu }}^{\prime }},{{\widehat{\sigma }}_{{{T}'}}} \right) \right] }[/math]

The upper and lower bounds on reliability are:

- [math]\displaystyle{ \begin{align} & {{R}_{U}}= & \int_{{{z}_{L}}}^{\infty }\frac{1}{\sqrt{2\pi }}{{e}^{-\tfrac{1}{2}{{z}^{2}}}}dz\text{ (Upper bound)} \\ & {{R}_{L}}= & \int_{{{z}_{U}}}^{\infty }\frac{1}{\sqrt{2\pi }}{{e}^{-\tfrac{1}{2}{{z}^{2}}}}dz\text{ (Lower bound)} \end{align} }[/math]

Bounds on Time

The bounds around time for a given lognormal percentile, or unreliability, are estimated by first solving the reliability equation with respect to time, as follows:

- [math]\displaystyle{ {T}'({{\widehat{\mu }}^{\prime }},{{\widehat{\sigma }}_{{{T}'}}})={{\widehat{\mu }}^{\prime }}+z\cdot {{\widehat{\sigma }}_{{{T}'}}} }[/math]

- where:

- [math]\displaystyle{ z={{\Phi }^{-1}}\left[ F({T}') \right] }[/math]

- and:

- [math]\displaystyle{ \Phi (z)=\frac{1}{\sqrt{2\pi }}\int_{-\infty }^{z({T}')}{{e}^{-\tfrac{1}{2}{{z}^{2}}}}dz }[/math]

The next step is to calculate the variance of [math]\displaystyle{ {T}'({{\widehat{\mu }}^{\prime }},{{\widehat{\sigma }}_{{{T}'}}}): }[/math]

- [math]\displaystyle{ \begin{align} & Var({{{\hat{T}}}^{\prime }})= & {{\left( \frac{\partial {T}'}{\partial {\mu }'} \right)}^{2}}Var({{\widehat{\mu }}^{\prime }})+{{\left( \frac{\partial {T}'}{\partial {{\sigma }_{{{T}'}}}} \right)}^{2}}Var({{\widehat{\sigma }}_{{{T}'}}}) \\ & & +2\left( \frac{\partial {T}'}{\partial {\mu }'} \right)\left( \frac{\partial {T}'}{\partial {{\sigma }_{{{T}'}}}} \right)Cov\left( {{\widehat{\mu }}^{\prime }},{{\widehat{\sigma }}_{{{T}'}}} \right) \\ & & \\ & Var({{{\hat{T}}}^{\prime }})= & Var({{\widehat{\mu }}^{\prime }})+{{\widehat{z}}^{2}}Var({{\widehat{\sigma }}_{{{T}'}}})+2\cdot \widehat{z}\cdot Cov\left( {{\widehat{\mu }}^{\prime }},{{\widehat{\sigma }}_{{{T}'}}} \right) \end{align} }[/math]

The upper and lower bounds are then found by:

- [math]\displaystyle{ \begin{align} & T_{U}^{\prime }= & \ln {{T}_{U}}={{{\hat{T}}}^{\prime }}+{{K}_{\alpha }}\sqrt{Var({{{\hat{T}}}^{\prime }})} \\ & T_{L}^{\prime }= & \ln {{T}_{L}}={{{\hat{T}}}^{\prime }}-{{K}_{\alpha }}\sqrt{Var({{{\hat{T}}}^{\prime }})} \end{align} }[/math]

Solving for [math]\displaystyle{ {{T}_{U}} }[/math] and [math]\displaystyle{ {{T}_{L}} }[/math] we get:

- [math]\displaystyle{ \begin{align} & {{T}_{U}}= & {{e}^{T_{U}^{\prime }}}\text{ (upper bound),} \\ & {{T}_{L}}= & {{e}^{T_{L}^{\prime }}}\text{ (lower bound)}\text{.} \end{align} }[/math]

Example 4

Using the data of Example 2 and assuming a lognormal distribution, estimate the parameters using the MLE method.

Solution to Example 4

In this example we have only complete data. Thus, the partials reduce to:

- [math]\displaystyle{ \begin{align} & \frac{\partial \Lambda }{\partial {\mu }'}= & \frac{1}{\sigma _{{{T}'}}^{2}}\cdot \underset{i=1}{\overset{14}{\mathop \sum }}\,\ln ({{T}_{i}})-{\mu }'=0 \\ & \frac{\partial \Lambda }{\partial {{\sigma }_{{{T}'}}}}= & \underset{i=1}{\overset{14}{\mathop \sum }}\,\left( \frac{\ln ({{T}_{i}})-{\mu }'}{\sigma _{{{T}'}}^{3}}-\frac{1}{{{\sigma }_{{{T}'}}}} \right)=0 \end{align} }[/math]

Substituting the values of [math]\displaystyle{ {{T}_{i}} }[/math] and solving the above system simultaneously, we get:

- [math]\displaystyle{ \begin{align} & {{{\hat{\sigma }}}_{{{T}'}}}= & 0.849 \\ & {{{\hat{\mu }}}^{\prime }}= & 3.516 \end{align} }[/math]

Using Eqns. (mean) and (sdv) we get:

- [math]\displaystyle{ \overline{T}=\hat{\mu }=48.25\text{ hours} }[/math]

- and:

- [math]\displaystyle{ {{\hat{\sigma }}_{{{T}'}}}=49.61\text{ hours}. }[/math]

The variance/covariance matrix is given by:

- [math]\displaystyle{ \left[ \begin{matrix} \widehat{Var}\left( {{{\hat{\mu }}}^{\prime }} \right)=0.0515 & {} & \widehat{Cov}\left( {{{\hat{\mu }}}^{\prime }},{{{\hat{\sigma }}}_{{{T}'}}} \right)=0.0000 \\ {} & {} & {} \\ \widehat{Cov}\left( {{{\hat{\mu }}}^{\prime }},{{{\hat{\sigma }}}_{{{T}'}}} \right)=0.0000 & {} & \widehat{Var}\left( {{{\hat{\sigma }}}_{{{T}'}}} \right)=0.0258 \\ \end{matrix} \right] }[/math]

Note About Bias

See the discussion regarding bias with the normal distribution in Chapter 8 for information regarding parameter bias in the lognormal distribution.

Likelihood Ratio Confidence Bounds

Bounds on Parameters

As covered in Chapter 5, the likelihood confidence bounds are calculated by finding values for [math]\displaystyle{ {{\theta }_{1}} }[/math] and [math]\displaystyle{ {{\theta }_{2}} }[/math] that satisfy:

- [math]\displaystyle{ -2\cdot \text{ln}\left( \frac{L({{\theta }_{1}},{{\theta }_{2}})}{L({{\widehat{\theta }}_{1}},{{\widehat{\theta }}_{2}})} \right)=\chi _{\alpha ;1}^{2} }[/math]

This equation can be rewritten as:

- [math]\displaystyle{ L({{\theta }_{1}},{{\theta }_{2}})=L({{\widehat{\theta }}_{1}},{{\widehat{\theta }}_{2}})\cdot {{e}^{\tfrac{-\chi _{\alpha ;1}^{2}}{2}}} }[/math]

For complete data, the likelihood formula for the normal distribution is given by:

- [math]\displaystyle{ L({\mu }',{{\sigma }_{{{T}'}}})=\underset{i=1}{\overset{N}{\mathop \prod }}\,f({{x}_{i}};{\mu }',{{\sigma }_{{{T}'}}})=\underset{i=1}{\overset{N}{\mathop \prod }}\,\frac{1}{{{x}_{i}}\cdot {{\sigma }_{{{T}'}}}\cdot \sqrt{2\pi }}\cdot {{e}^{-\tfrac{1}{2}{{\left( \tfrac{\text{ln}({{x}_{i}})-{\mu }'}{{{\sigma }_{{{T}'}}}} \right)}^{2}}}} }[/math]

where the [math]\displaystyle{ {{x}_{i}} }[/math] values represent the original time-to-failure data. For a given value of [math]\displaystyle{ \alpha }[/math] , values for [math]\displaystyle{ {\mu }' }[/math] and [math]\displaystyle{ {{\sigma }_{{{T}'}}} }[/math] can be found which represent the maximum and minimum values that satisfy Eqn. (lratio3). These represent the confidence bounds for the parameters at a confidence level [math]\displaystyle{ \delta , }[/math] where [math]\displaystyle{ \alpha =\delta }[/math] for two-sided bounds and [math]\displaystyle{ \alpha =2\delta -1 }[/math] for one-sided.

Example 5

Five units are put on a reliability test and experience failures at 45, 60, 75, 90, and 115 hours. Assuming a lognormal distribution, the MLE parameter estimates are calculated to be [math]\displaystyle{ {{\widehat{\mu }}^{\prime }}=4.2926 }[/math] and [math]\displaystyle{ {{\widehat{\sigma }}_{{{T}'}}}=0.32361. }[/math] Calculate the two-sided 75% confidence bounds on these parameters using the likelihood ratio method.

Solution to Example 5

The first step is to calculate the likelihood function for the parameter estimates:

where [math]\displaystyle{ {{x}_{i}} }[/math] are the original time-to-failure data points. We can now rearrange Eqn. (lratio3) to the form:

- [math]\displaystyle{ L({\mu }',{{\sigma }_{{{T}'}}})-L({{\widehat{\mu }}^{\prime }},{{\widehat{\sigma }}_{{{T}'}}})\cdot {{e}^{\tfrac{-\chi _{\alpha ;1}^{2}}{2}}}=0 }[/math]

Since our specified confidence level, [math]\displaystyle{ \delta }[/math] , is 75%, we can calculate the value of the chi-squared statistic, [math]\displaystyle{ \chi _{0.75;1}^{2}=1.323303. }[/math] We can now substitute this information into the equation:

- [math]\displaystyle{ \begin{align} & L({\mu }',{{\sigma }_{{{T}'}}})-L({{\widehat{\mu }}^{\prime }},{{\widehat{\sigma }}_{{{T}'}}})\cdot {{e}^{\tfrac{-\chi _{\alpha ;1}^{2}}{2}}}= & 0 \\ & L({\mu }',{{\sigma }_{{{T}'}}})-1.115256\times {{10}^{-10}}\cdot {{e}^{\tfrac{-1.323303}{2}}}= & 0 \\ & L({\mu }',{{\sigma }_{{{T}'}}})-5.754703\times {{10}^{-11}}= & 0 \end{align} }[/math]

It now remains to find the values of [math]\displaystyle{ {\mu }' }[/math] and [math]\displaystyle{ {{\sigma }_{{{T}'}}} }[/math] which satisfy this equation. This is an iterative process that requires setting the value of [math]\displaystyle{ {{\sigma }_{{{T}'}}} }[/math] and finding the appropriate values of [math]\displaystyle{ {\mu }' }[/math] , and vice versa.

The following table gives the values of [math]\displaystyle{ {\mu }' }[/math] based on given values of [math]\displaystyle{ {{\sigma }_{{{T}'}}} }[/math] .

These points are represented graphically in the following contour plot:

(Note that this plot is generated with degrees of freedom [math]\displaystyle{ k=1 }[/math] , as we are only determining bounds on one parameter. The contour plots generated in Weibull++ are done with degrees of freedom [math]\displaystyle{ k=2 }[/math] , for use in comparing both parameters simultaneously.) As can be determined from the table the lowest calculated value for [math]\displaystyle{ {\mu }' }[/math] is 4.1145, while the highest is 4.4708. These represent the two-sided 75% confidence limits on this parameter. Since solutions for the equation do not exist for values of [math]\displaystyle{ {{\sigma }_{{{T}'}}} }[/math] below 0.24 or above 0.48, these can be considered the two-sided 75% confidence limits for this parameter. In order to obtain more accurate values for the confidence limits on [math]\displaystyle{ {{\sigma }_{{{T}'}}} }[/math] , we can perform the same procedure as before, but finding the two values of [math]\displaystyle{ \sigma }[/math] that correspond with a given value of [math]\displaystyle{ {\mu }'. }[/math] Using this method, we find that the 75% confidence limits on [math]\displaystyle{ {{\sigma }_{{{T}'}}} }[/math] are 0.23405 and 0.48936, which are close to the initial estimates of 0.24 and 0.48.

Bounds on Time and Reliability

In order to calculate the bounds on a time estimate for a given reliability, or on a reliability estimate for a given time, the likelihood function needs to be rewritten in terms of one parameter and time/reliability, so that the maximum and minimum values of the time can be observed as the parameter is varied. This can be accomplished by substituting a form of the normal reliability equation into the likelihood function. The normal reliability equation can be written as:

- [math]\displaystyle{ R=1-\Phi \left( \frac{\text{ln}(t)-{\mu }'}{{{\sigma }_{{{T}'}}}} \right) }[/math]

This can be rearranged to the form:

- [math]\displaystyle{ {\mu }'=\text{ln}(t)-{{\sigma }_{{{T}'}}}\cdot {{\Phi }^{-1}}(1-R) }[/math]

where [math]\displaystyle{ {{\Phi }^{-1}} }[/math] is the inverse standard normal. This equation can now be substituted into Eqn. (lognormlikelihood) to produce a likelihood equation in terms of [math]\displaystyle{ {{\sigma }_{{{T}'}}}, }[/math] [math]\displaystyle{ t }[/math] and [math]\displaystyle{ R\ \ : }[/math]

- [math]\displaystyle{ L({{\sigma }_{{{T}'}}},t/R)=\underset{i=1}{\overset{N}{\mathop \prod }}\,\frac{1}{{{x}_{i}}\cdot {{\sigma }_{{{T}'}}}\cdot \sqrt{2\pi }}\cdot {{e}^{-\tfrac{1}{2}{{\left( \tfrac{\text{ln}({{x}_{i}})-\left( \text{ln}(t)-{{\sigma }_{{{T}'}}}\cdot {{\Phi }^{-1}}(1-R) \right)}{{{\sigma }_{{{T}'}}}} \right)}^{2}}}} }[/math]

The unknown variable [math]\displaystyle{ t/R }[/math] depends on what type of bounds are being determined. If one is trying to determine the bounds on time for a given reliability, then [math]\displaystyle{ R }[/math] is a known constant and [math]\displaystyle{ t }[/math] is the unknown variable. Conversely, if one is trying to determine the bounds on reliability for a given time, then [math]\displaystyle{ t }[/math] is a known constant and [math]\displaystyle{ R }[/math] is the unknown variable. Either way, Eqn. (lognormliketr) can be used to solve Eqn. (lratio3) for the values of interest.

Example 6

For the data given in Example 5, determine the two-sided 75% confidence bounds on the time estimate for a reliability of 80%. The ML estimate for the time at [math]\displaystyle{ R(t)=80% }[/math] is 55.718.

Solution to Example 6

In this example, we are trying to determine the two-sided 75% confidence bounds on the time estimate of 55.718. This is accomplished by substituting [math]\displaystyle{ R=0.80 }[/math] and [math]\displaystyle{ \alpha =0.75 }[/math] into Eqn. (lognormliketr), and varying [math]\displaystyle{ {{\sigma }_{{{T}'}}} }[/math] until the maximum and minimum values of [math]\displaystyle{ t }[/math] are found. The following table gives the values of [math]\displaystyle{ t }[/math] based on given values of [math]\displaystyle{ {{\sigma }_{{{T}'}}} }[/math] .

This data set is represented graphically in the following contour plot:

As can be determined from the table, the lowest calculated value for [math]\displaystyle{ t }[/math] is 43.634, while the highest is 66.085. These represent the two-sided 75% confidence limits on the time at which reliability is equal to 80%.

Example 7

For the data given in Example 5, determine the two-sided 75% confidence bounds on the reliability estimate for [math]\displaystyle{ t=65 }[/math] . The ML estimate for the reliability at [math]\displaystyle{ t=65 }[/math] is 64.261%.

Solution to Example 7

In this example, we are trying to determine the two-sided 75% confidence bounds on the reliability estimate of 64.261%. This is accomplished by substituting [math]\displaystyle{ t=65 }[/math] and [math]\displaystyle{ \alpha =0.75 }[/math] into Eqn. (lognormliketr), and varying [math]\displaystyle{ {{\sigma }_{{{T}'}}} }[/math] until the maximum and minimum values of [math]\displaystyle{ R }[/math] are found. The following table gives the values of [math]\displaystyle{ R }[/math] based on given values of [math]\displaystyle{ {{\sigma }_{{{T}'}}} }[/math] .

This data set is represented graphically in the following contour plot:

As can be determined from the table, the lowest calculated value for [math]\displaystyle{ R }[/math] is 43.444%, while the highest is 81.508%. These represent the two-sided 75% confidence limits on the reliability at [math]\displaystyle{ t=65 }[/math] .