Template:Lognormal distributionrank regression on x: Difference between revisions

(Created page with '===Rank Regression on X=== Performing a rank regression on X requires that a straight line be fitted to a set of data points such that the sum of the squares of the horizontal de…') |

|

(No difference)

| |

Revision as of 23:19, 10 February 2012

Rank Regression on X

Performing a rank regression on X requires that a straight line be fitted to a set of data points such that the sum of the squares of the horizontal deviations from the points to the line is minimized.

Again, the first task is to bring our [math]\displaystyle{ cdf }[/math] function into a linear form. This step is exactly the same as in regression on Y analysis and Eqns. (lnorm), (yln), (aln) and (bln) apply in this case too. The deviation from the previous analysis begins on the least squares fit part, where in this case we treat [math]\displaystyle{ x }[/math] as the dependent variable and [math]\displaystyle{ y }[/math] as the independent variable. The best-fitting straight line to the data, for regression on X (see Chapter 3), is the straight line:

- [math]\displaystyle{ x=\widehat{a}+\widehat{b}y }[/math]

The corresponding equations for and [math]\displaystyle{ \widehat{b} }[/math] are:

- [math]\displaystyle{ \hat{a}=\overline{x}-\hat{b}\overline{y}=\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}}}{N}-\hat{b}\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}}}{N} }[/math]

- and:

- [math]\displaystyle{ \hat{b}=\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}}{{y}_{i}}-\tfrac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}}\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}}}{N}}{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,y_{i}^{2}-\tfrac{{{\left( \underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}} \right)}^{2}}}{N}} }[/math]

- where:

- [math]\displaystyle{ {{y}_{i}}={{\Phi }^{-1}}\left[ F(T_{i}^{\prime }) \right] }[/math]

- and:

- [math]\displaystyle{ {{x}_{i}}=T_{i}^{\prime } }[/math]

and the [math]\displaystyle{ F(T_{i}^{\prime }) }[/math] is estimated from the median ranks. Once [math]\displaystyle{ \widehat{a} }[/math] and [math]\displaystyle{ \widehat{b} }[/math] are obtained, solve Eqn. (xlineln) for the unknown [math]\displaystyle{ y }[/math] , which corresponds to:

- [math]\displaystyle{ y=-\frac{\widehat{a}}{\widehat{b}}+\frac{1}{\widehat{b}}x }[/math]

Solving for the parameters from Eqns. (bln) and (aln) we get:

- [math]\displaystyle{ a=-\frac{\widehat{a}}{\widehat{b}}=-\frac{{{\mu }'}}{{{\sigma }_{{{T}'}}}} }[/math]

- and:

- [math]\displaystyle{ b=\frac{1}{\widehat{b}}=\frac{1}{{{\sigma }_{{{T}'}}}}\text{ } }[/math]

The correlation coefficient is evaluated as before using Eqn. (RHOln).

Example 3

Using the data of Example 2 and assuming a lognormal distribution, estimate the parameters and estimate the correlation coefficient, [math]\displaystyle{ \rho }[/math] , using rank regression on X.

Solution to Example 3

Table 9.2 constructed in Example 2 applies to this example as well. Using the values in this table we get:

- [math]\displaystyle{ \begin{align} & \hat{b}= & \frac{\underset{i=1}{\overset{14}{\mathop{\sum }}}\,T_{i}^{\prime }{{y}_{i}}-\tfrac{\underset{i=1}{\overset{14}{\mathop{\sum }}}\,T_{i}^{\prime }\underset{i=1}{\overset{14}{\mathop{\sum }}}\,{{y}_{i}}}{14}}{\underset{i=1}{\overset{14}{\mathop{\sum }}}\,y_{i}^{2}-\tfrac{{{\left( \underset{i=1}{\overset{14}{\mathop{\sum }}}\,{{y}_{i}} \right)}^{2}}}{14}} \\ & & \\ & \widehat{b}= & \frac{10.4473-(49.2220)(0)/14}{11.3646-{{(0)}^{2}}/14} \end{align} }[/math]

- or:

- [math]\displaystyle{ \widehat{b}=0.9193 }[/math]

- and:

- [math]\displaystyle{ \hat{a}=\overline{x}-\hat{b}\overline{y}=\frac{\underset{i=1}{\overset{14}{\mathop{\sum }}}\,T_{i}^{\prime }}{14}-\widehat{b}\frac{\underset{i=1}{\overset{14}{\mathop{\sum }}}\,{{y}_{i}}}{14} }[/math]

- or:

- [math]\displaystyle{ \widehat{a}=\frac{49.2220}{14}-(0.9193)\frac{(0)}{14}=3.5159 }[/math]

Therefore, from Eqn. (blnx):

- [math]\displaystyle{ {{\sigma }_{{{T}'}}}=\widehat{b}=0.9193 }[/math]

and from Eqn. (alnx):

- [math]\displaystyle{ {\mu }'=\frac{\widehat{a}}{\widehat{b}}{{\sigma }_{{{T}'}}}=\frac{3.5159}{0.9193}\cdot 0.9193=3.5159 }[/math]

Using Eqns. (mean) and (sdv) we get:

- [math]\displaystyle{ \overline{T}=\mu =51.3393\text{ hours} }[/math]

- and:

- [math]\displaystyle{ {{\sigma }_{T}}=59.1682\text{ hours}. }[/math]

The correlation coefficient is found using Eqn. (RHOln):

- [math]\displaystyle{ \widehat{\rho }=0.9754. }[/math]

Note that the regression on Y analysis is not necessarily the same as the regression on X. The only time when the results of the two regression types are the same (i.e. will yield the same equation for a line) is when the data lie perfectly on a line.

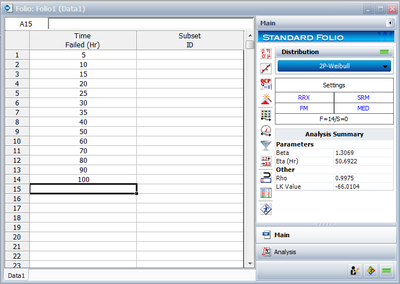

Using Weibull++ , with the Rank Regression on X option, the results are: